- Released At: 10-04-2025

- Page Views:

- Downloads:

- Table of Contents

- Related Documents

-

Maintenance Guide

Document version: 5W102-20250409

Copyright © 2025 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

The information in this document is subject to change without notice.

System maintenance check on CAS

Checking the running status of CAS

Viewing the high-availability functions of the cluster

Viewing dynamic resource scheduling of a cluster

Viewing shared storage in a cluster

Viewing host performance monitoring

Verifying if CAS Tools are running correctly

Viewing the type of disks and NICs

Viewing performance monitoring of VMs

Log analysis and fault location

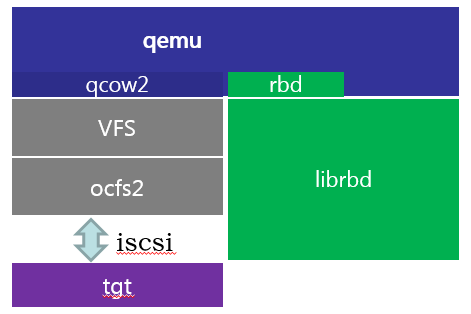

Introduction to the CAS module

Risks, restrictions, and guidelines

About disk backup disaster recovery

Commonly used commands and configuration

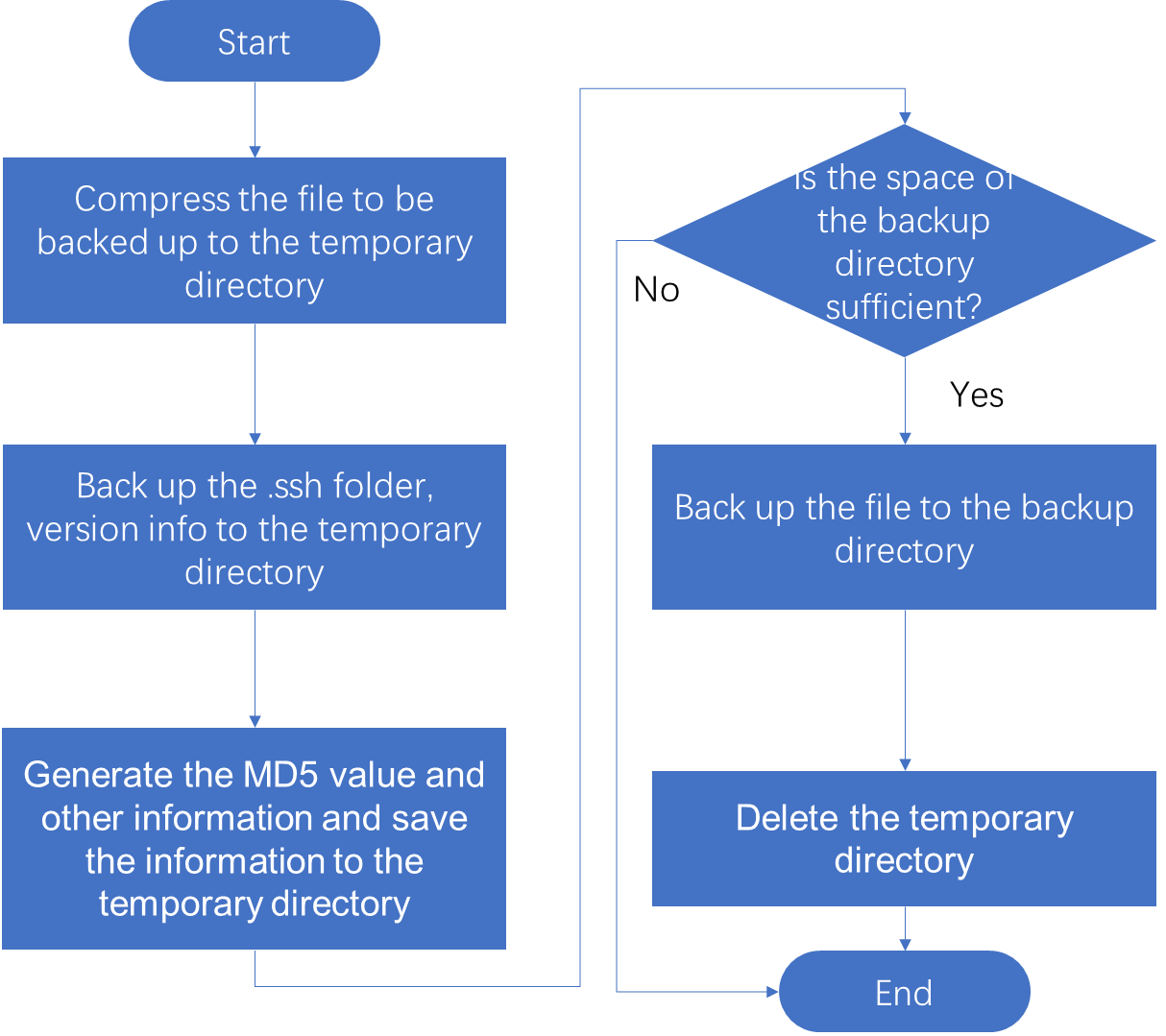

CVM backup process and service management information

CVM backup process and service management information

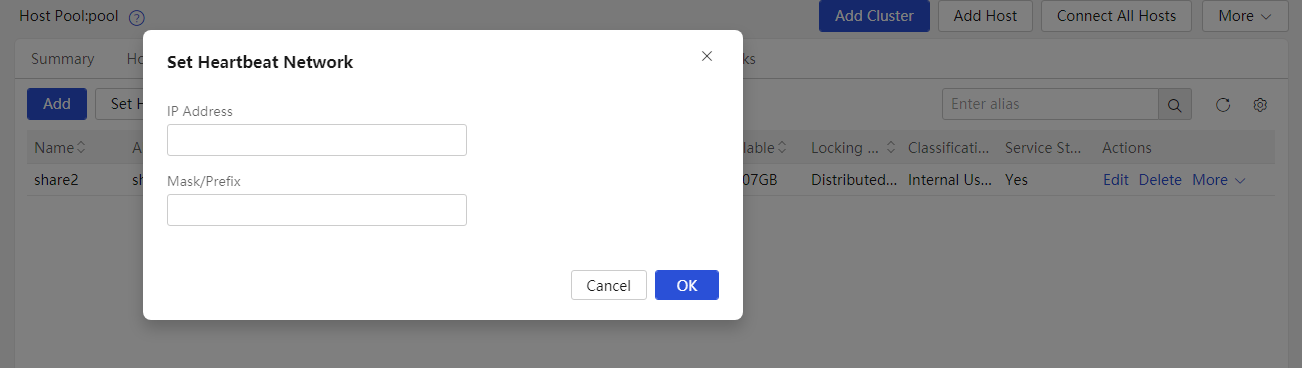

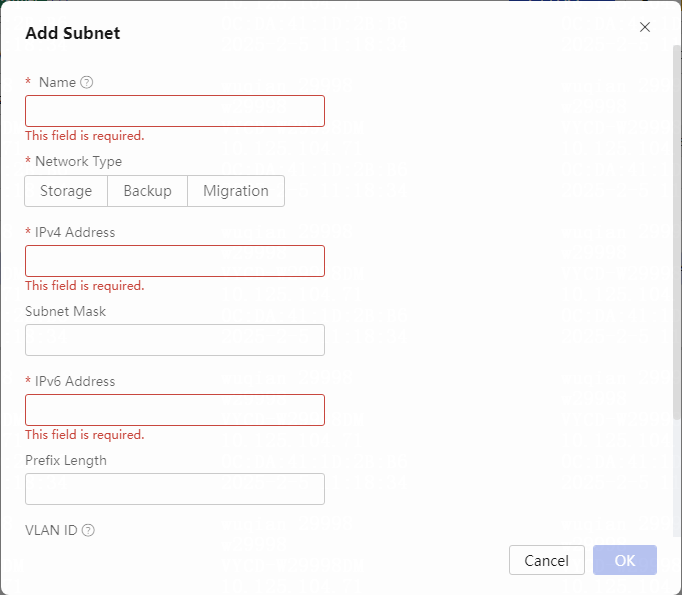

Configuring the heartbeat network for the shared file system

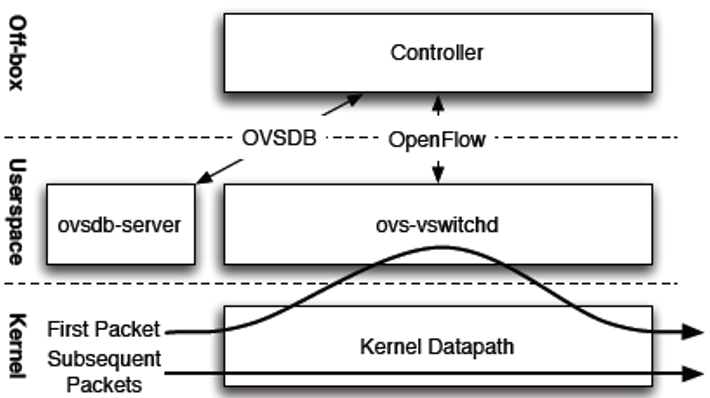

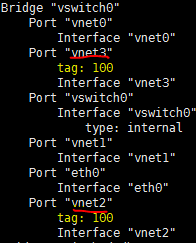

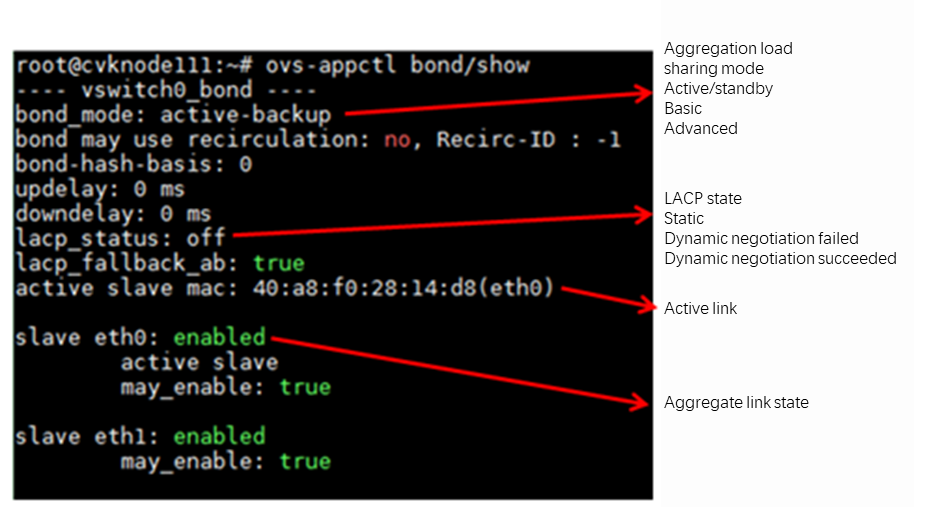

About the OVS command line tool

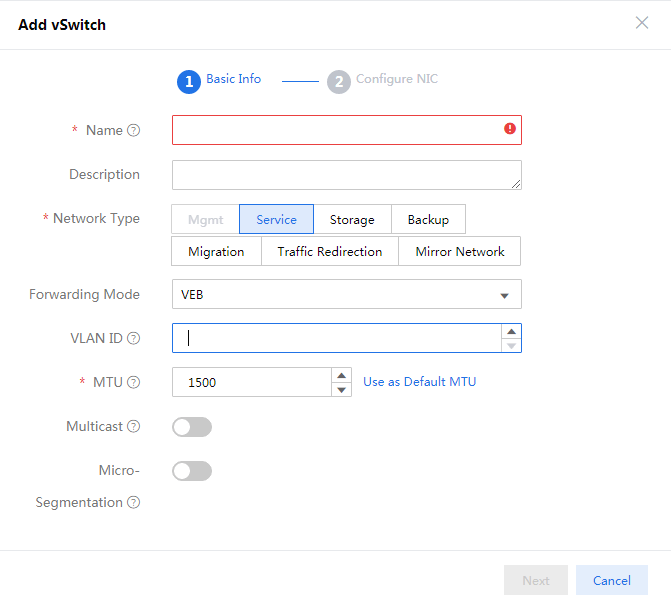

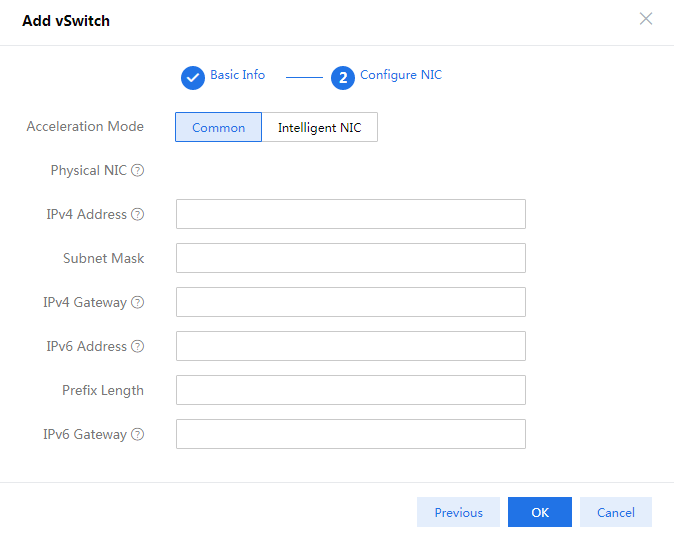

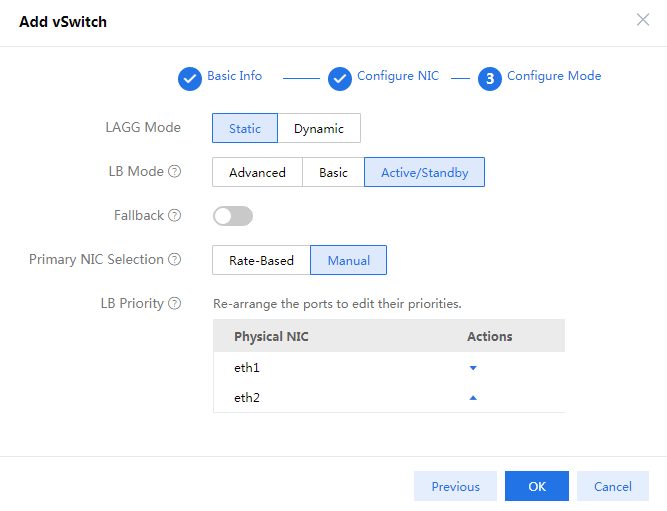

vNetwork configuration procedures

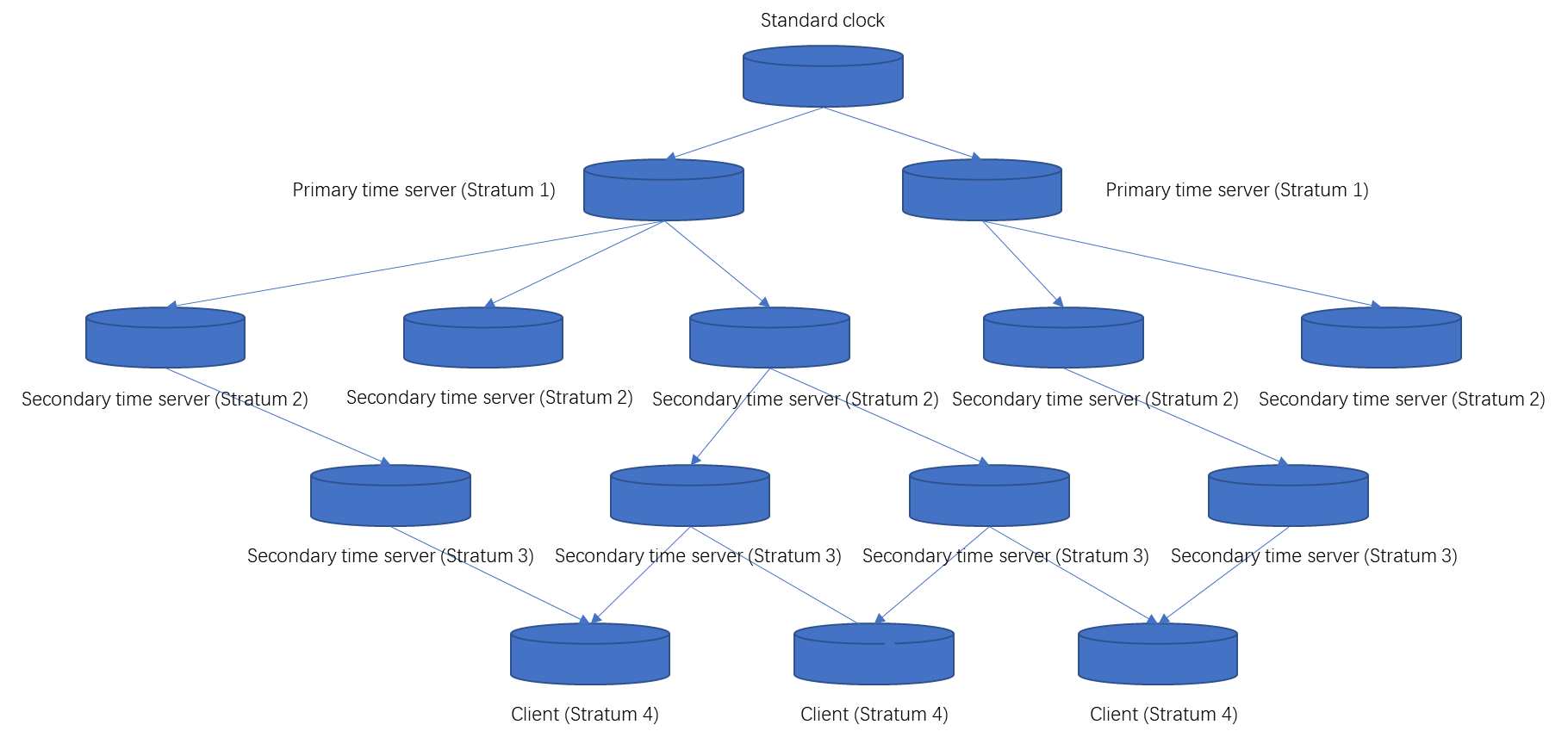

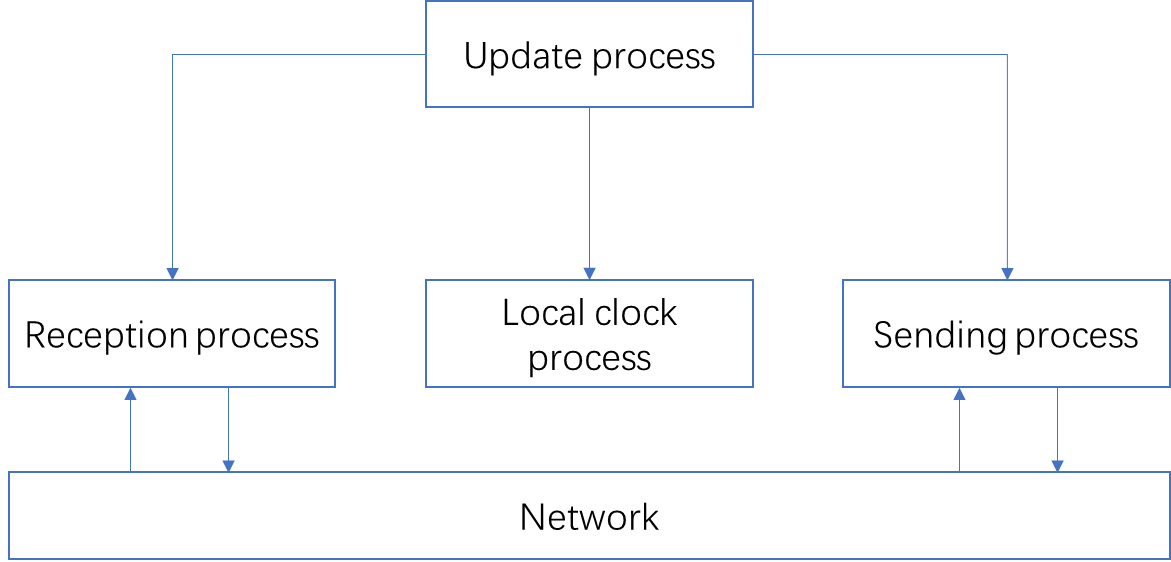

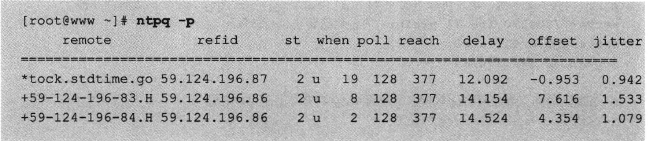

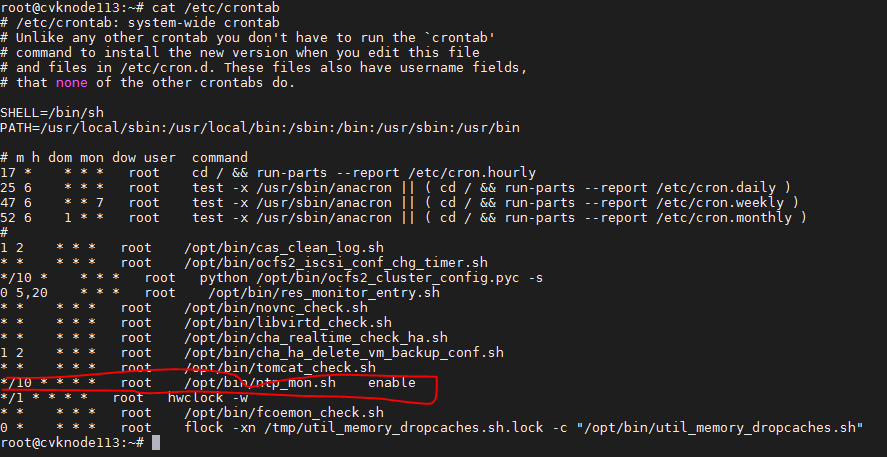

Software and software architecture for NTP

Software and configuration files

Main NTP configuration file ntp.conf

Using the restrict command for access control management

Using the server command to specify upper-stratum NTP servers

Introduction to CAS log collection

Typical scripts for issue location and analysis

Configuration cautions and guidelines

CAS configuration cautions and guidelines

Formatting a shared file system

Basic life cycle management for VMs

CAS installation and deployment

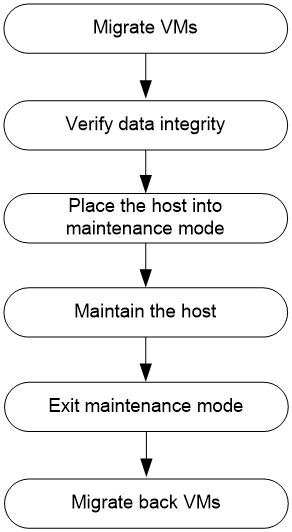

Hardware replacement for a CVK host

Replacing a CPU, memory, or disk

Changing, extending, updating, and unbinding licenses

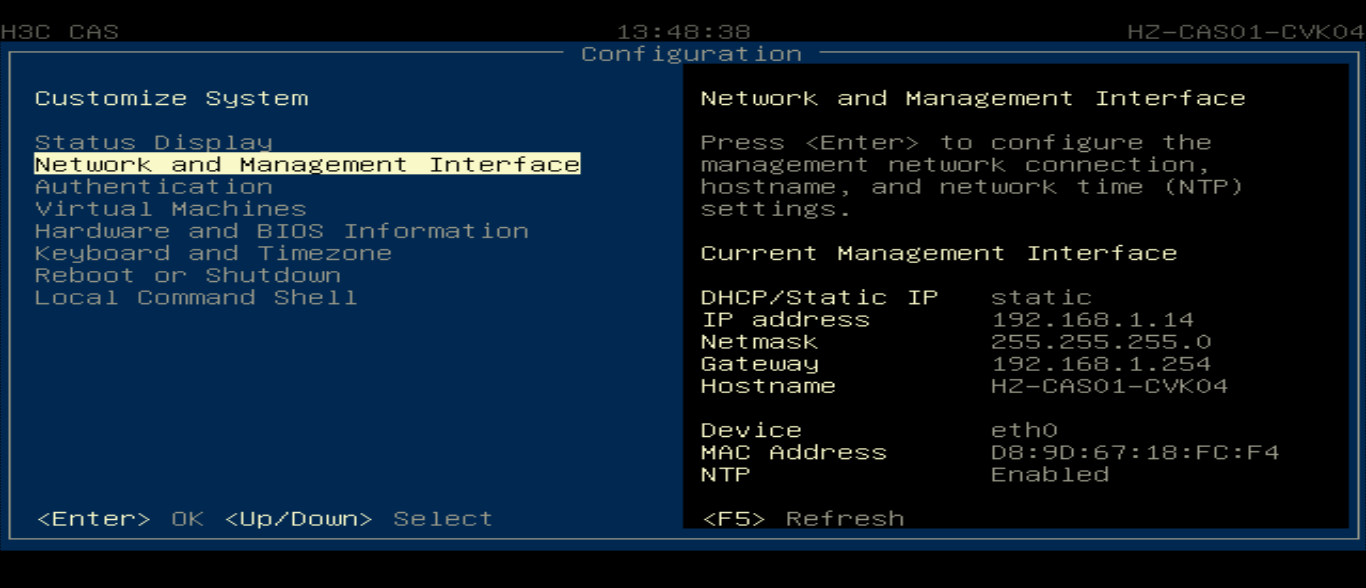

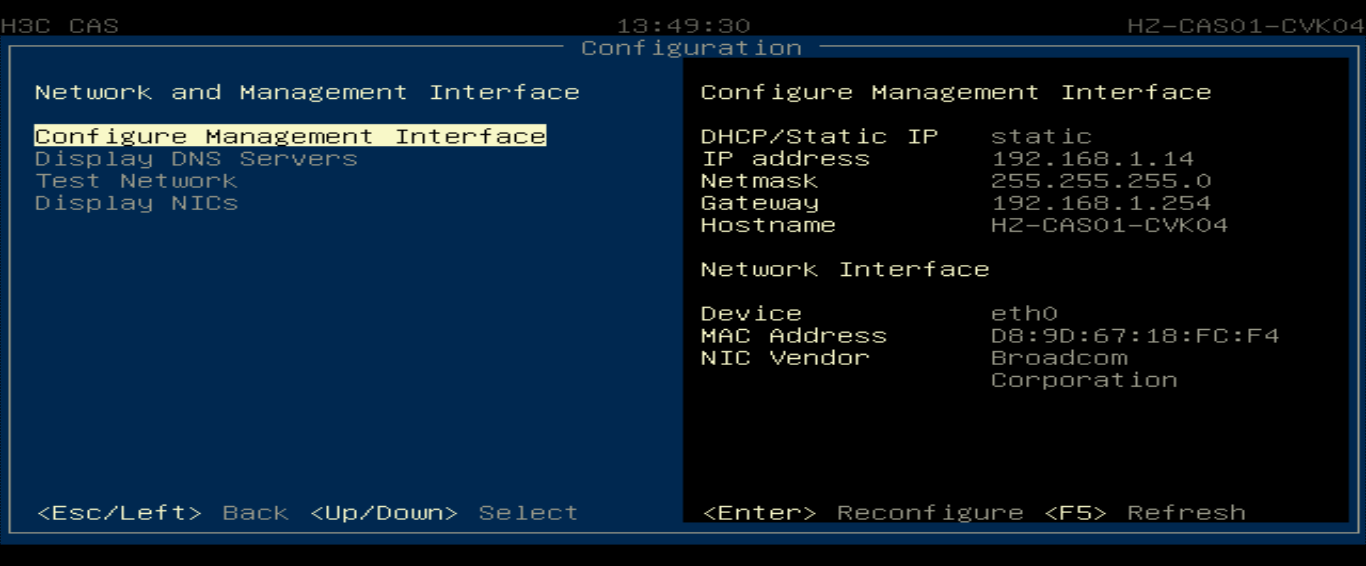

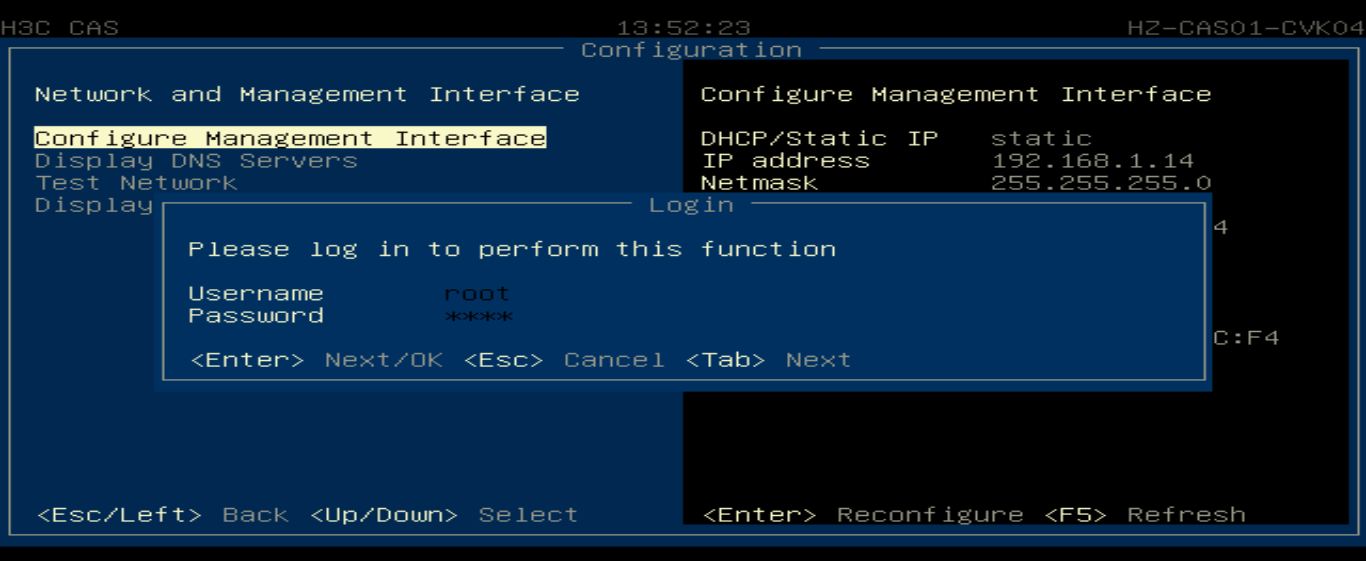

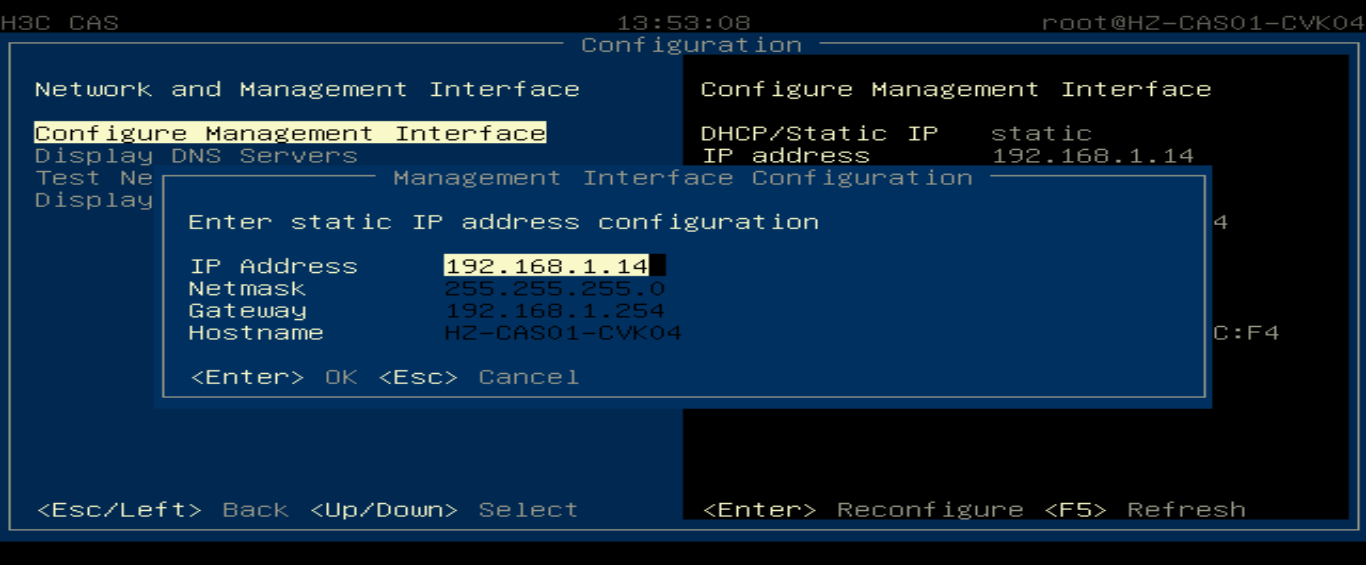

Modifying the management IP address and host name

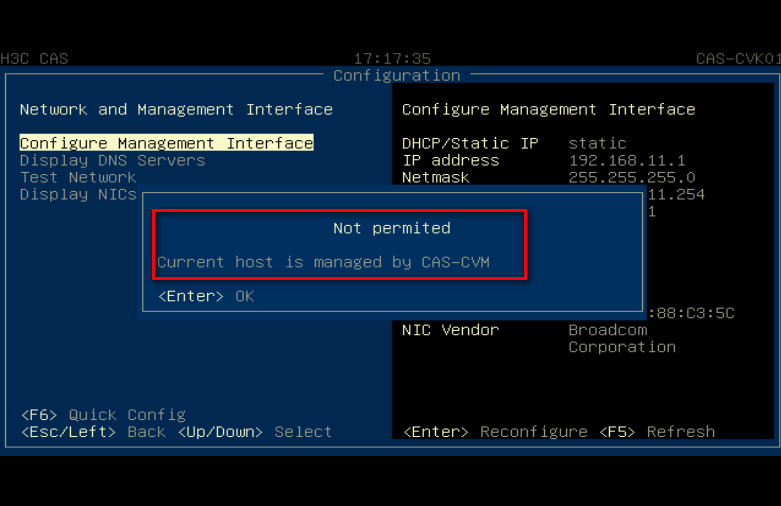

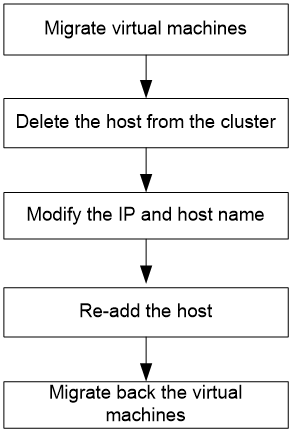

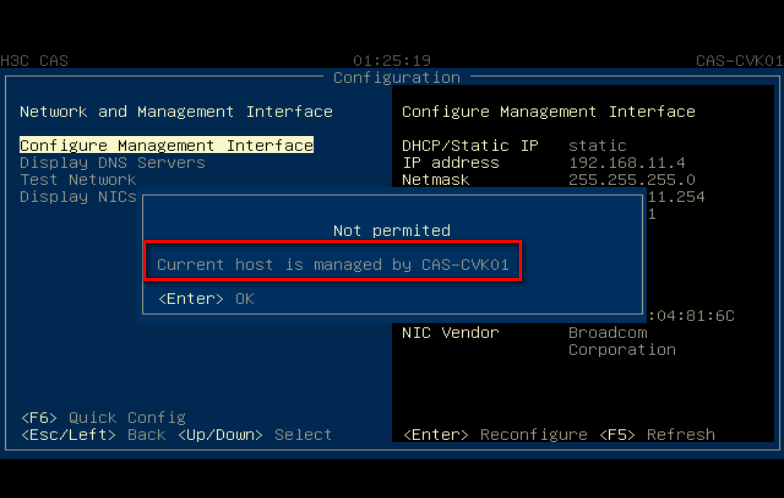

Flowchart for modifying the management IP and host name

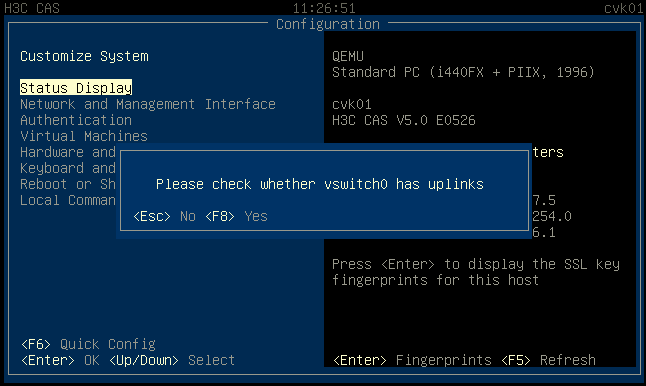

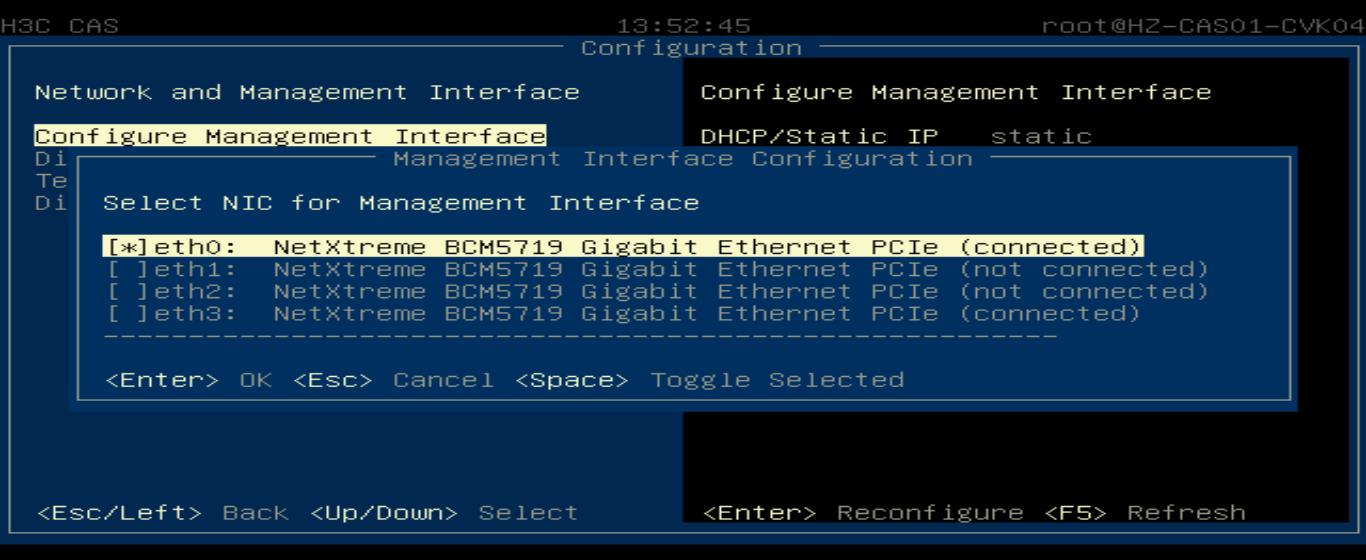

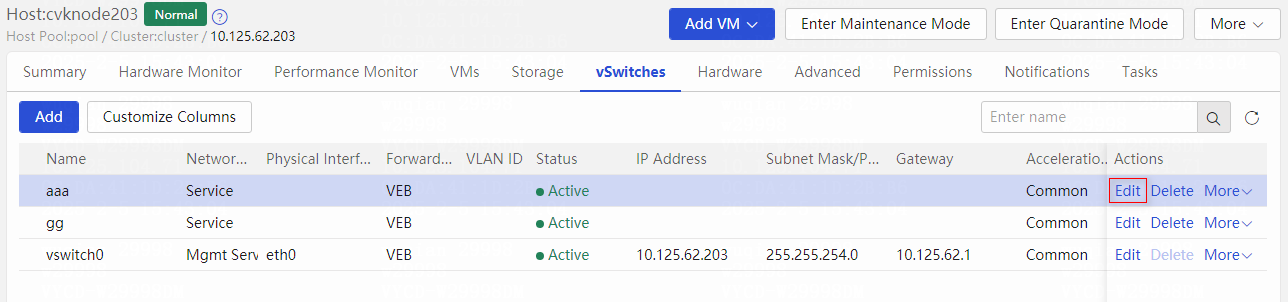

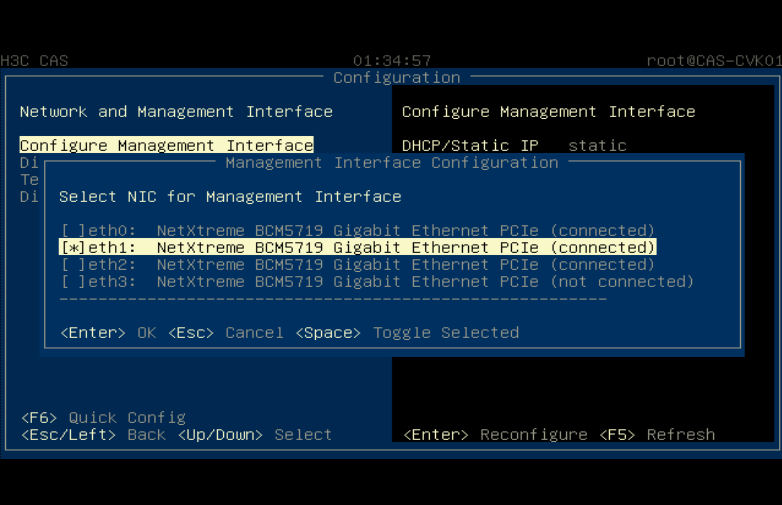

Changing the physical interface bound to the management vSwitch

Changing the physical interface bound to the management vSwitch from the CAS Web interface

Changing the physical interface bound to the management vSwitch from the Xconsole interface

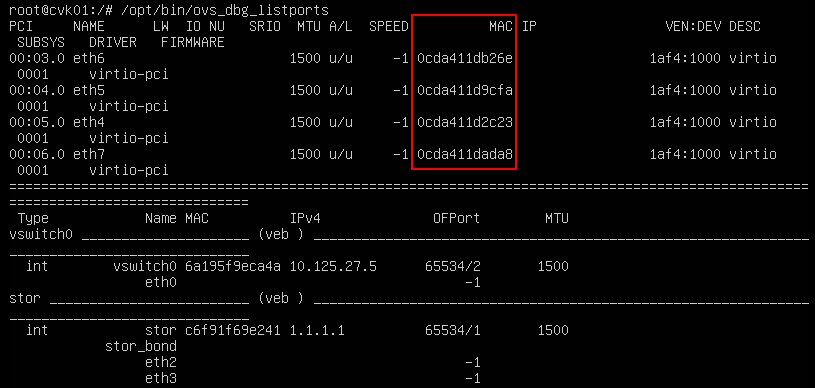

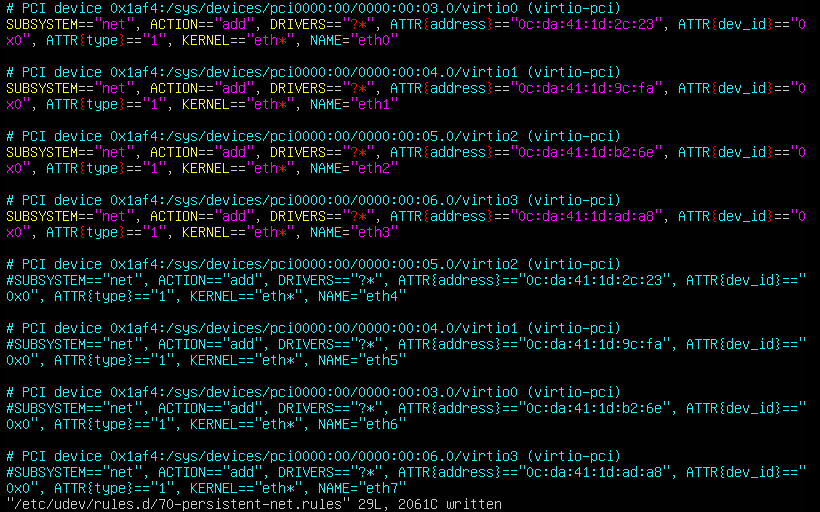

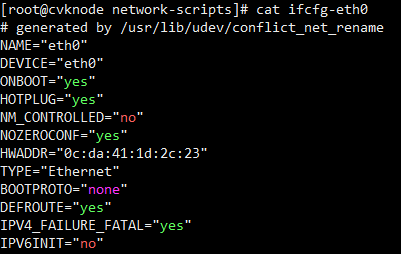

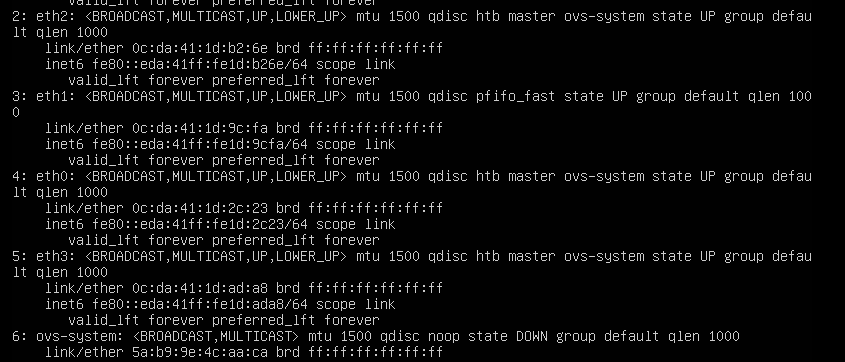

Adding a physical NIC to a CVK host

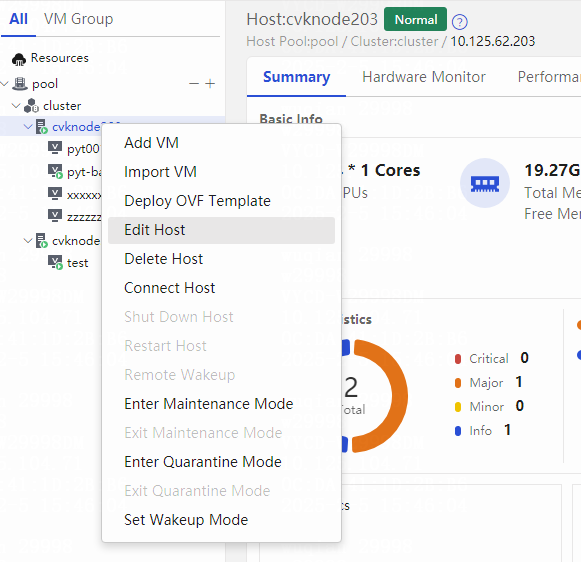

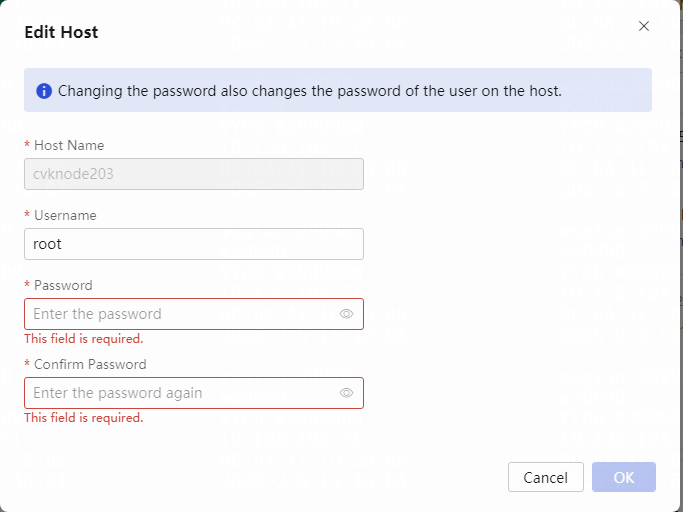

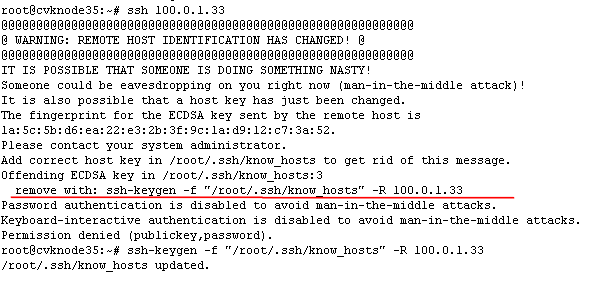

Modifying the CVK root password

Common issue analysis and troubleshooting method with logs

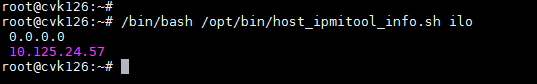

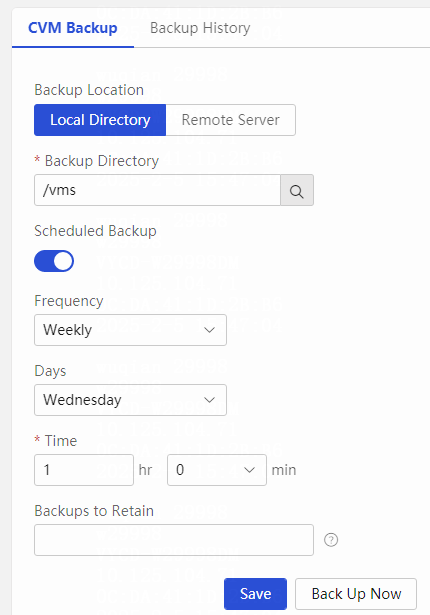

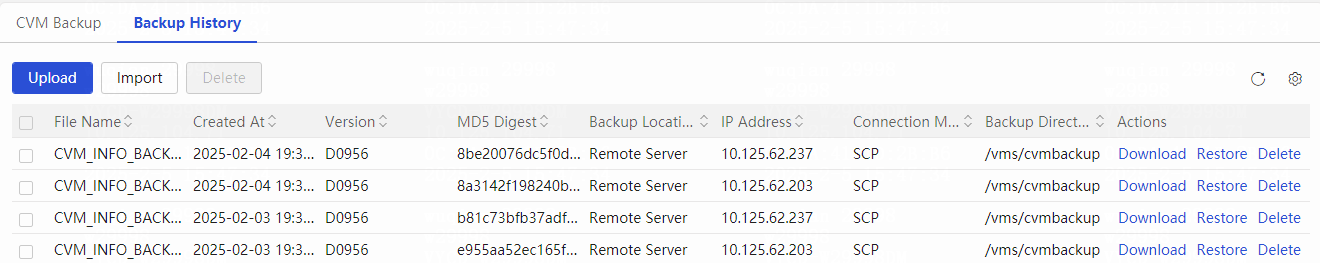

Backing up and restoring CVM configuration

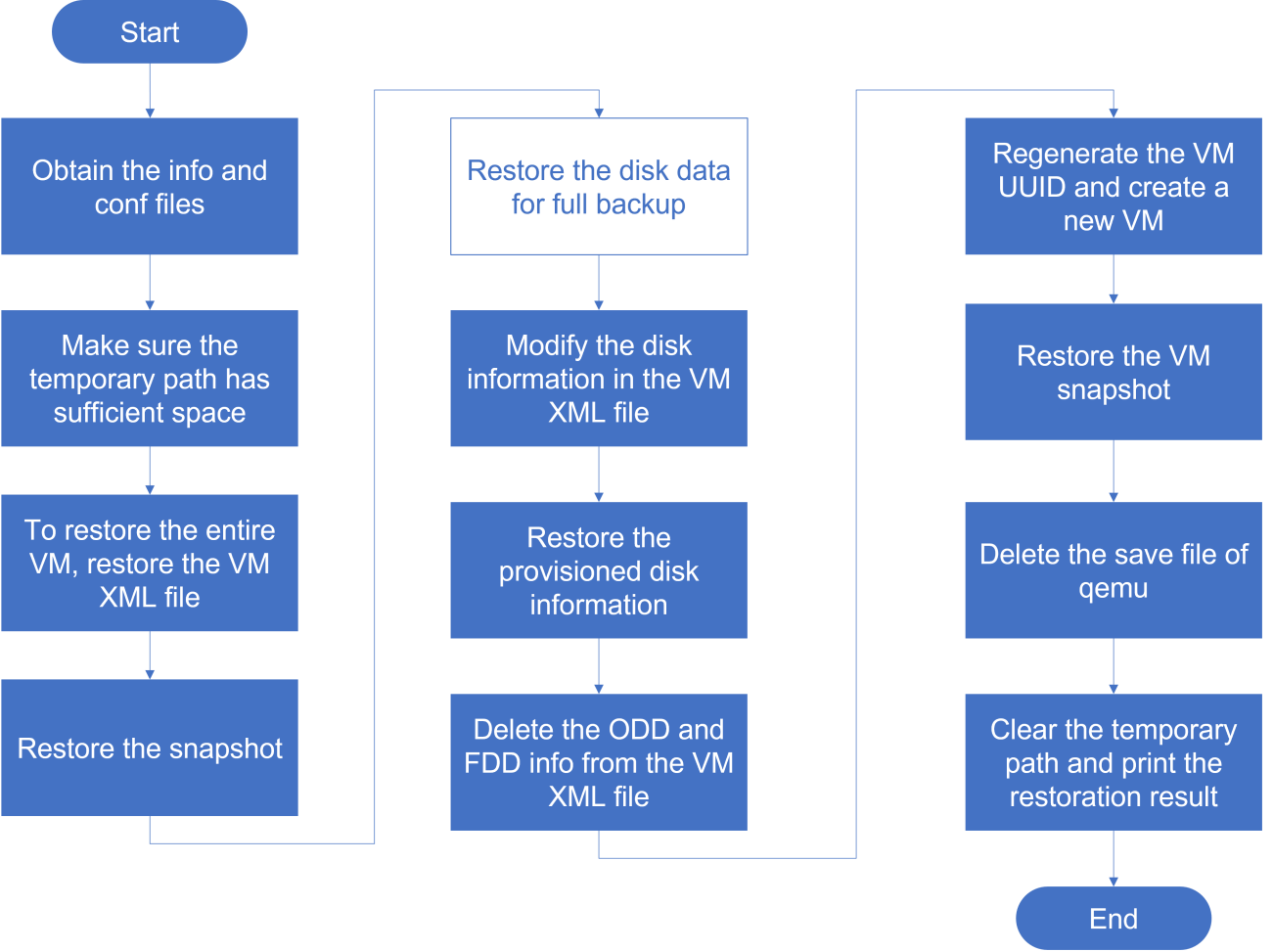

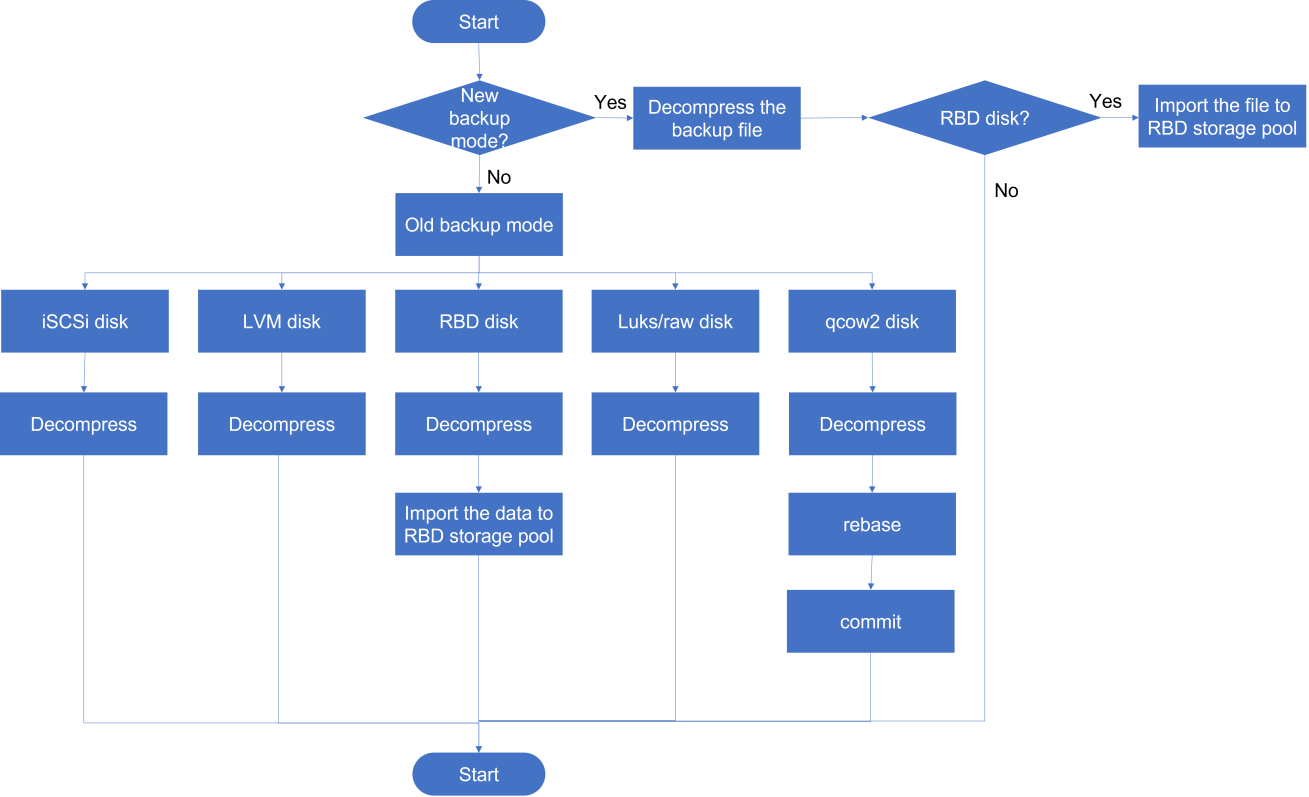

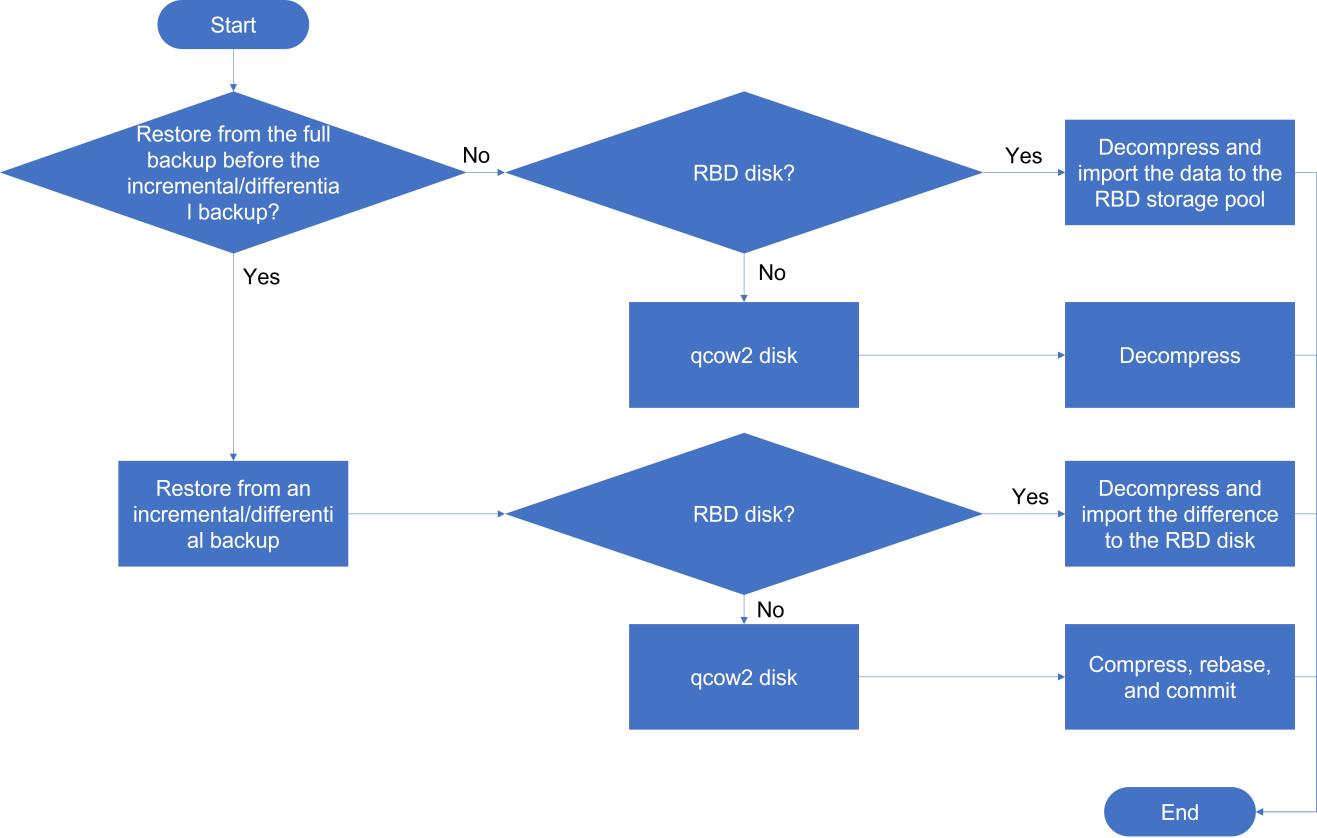

Restoring the CVM configuration

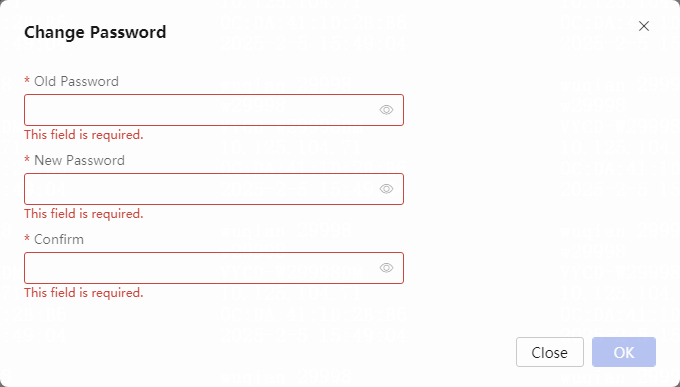

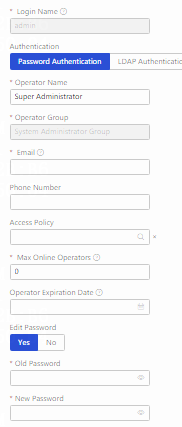

Changing the default admin password

Common issue analysis and troubleshooting method with logs

Managing logs of a Windows operating system

Collecting logs of a Windows operating system

Viewing logs of a Windows operating system

Troubleshooting tools and utilities for CVK host issues

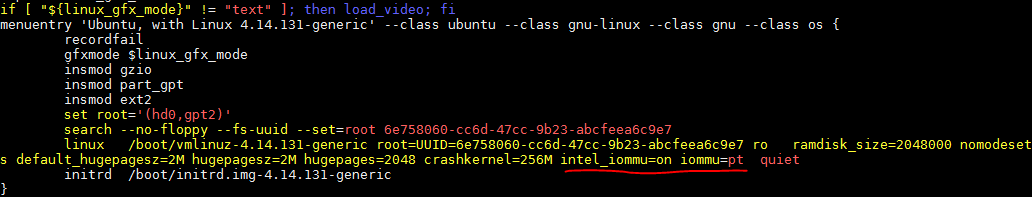

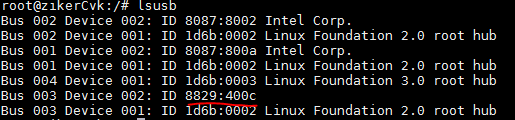

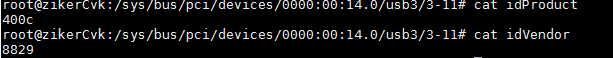

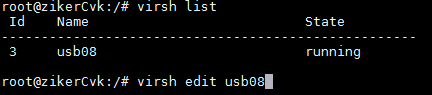

Installation being stuck at 21% for a long time

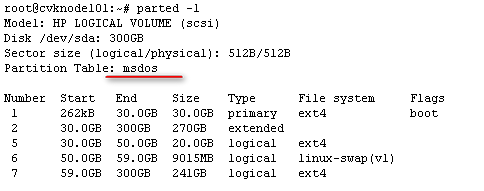

Cannot discover disks during system installation

Black screen or being stuck during system installation

System functions missing after installation

Analysis and detection of blocking issues in OCFS2

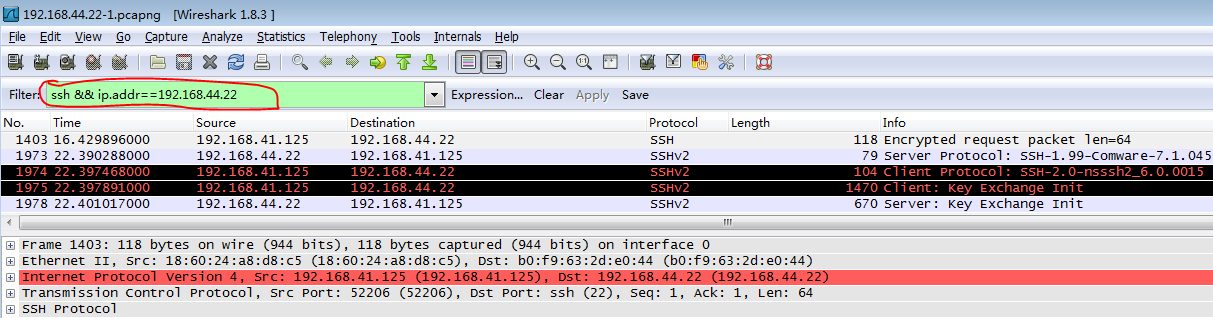

Troubleshooting network issues and capturing packets

A packet capture tool in Windows environment

A packet capture tool in Linux environment

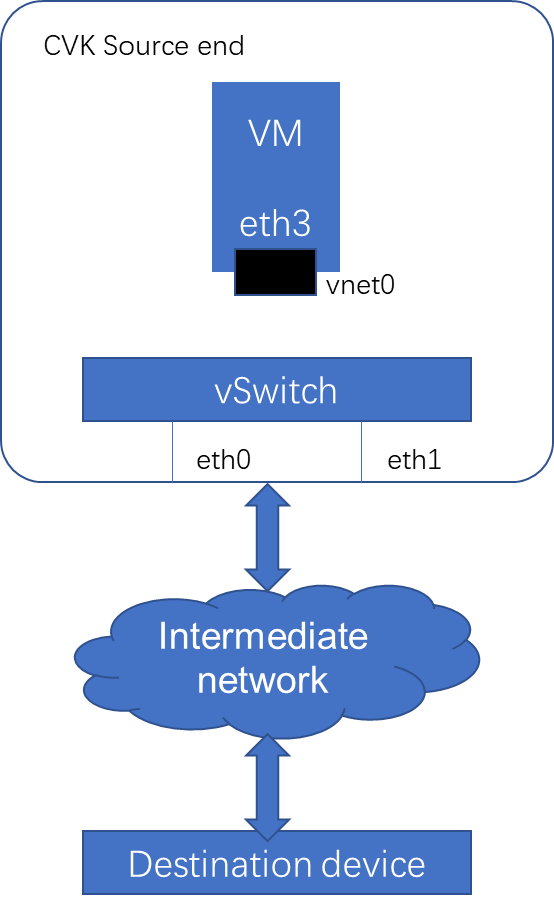

Introduction to packet processing in CAS environment

Troubleshooting common stateful failover issues

Common inspection items for stateful failover

Common stateful failover issues

CVM login failure because of prolonged external storage error

A VM migration task gets stuck at 95% when you migrate a VM by changing the host and storage

AsiaInfo/360 compatibility issues

Checking the running status of AsiaInfo on the CAS system

Checking the running status of 360 on the CAS system (CAS 7.0 is incompatible with 360)

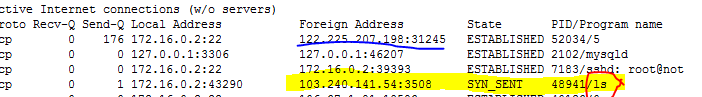

Common host and VM infection treatment

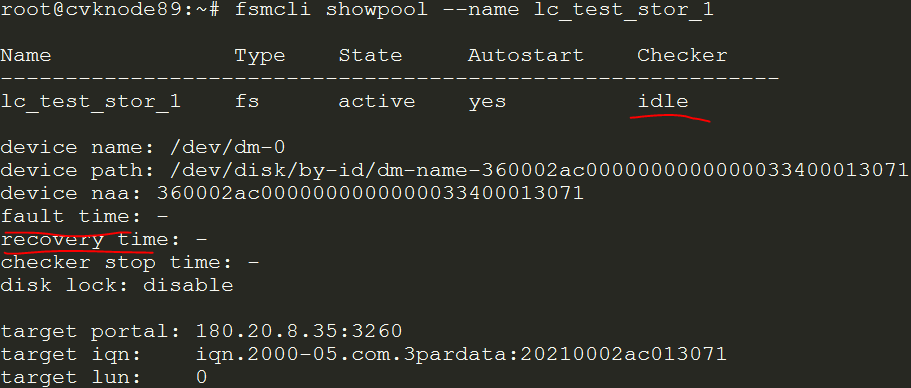

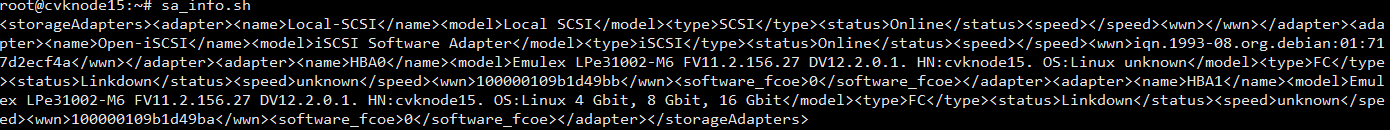

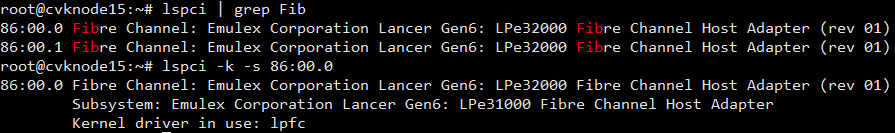

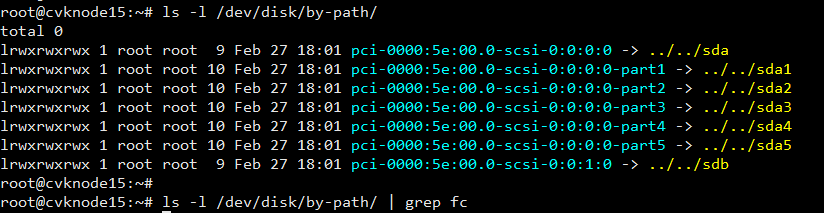

Storage link and multipath issues

Common VNC configuration issues

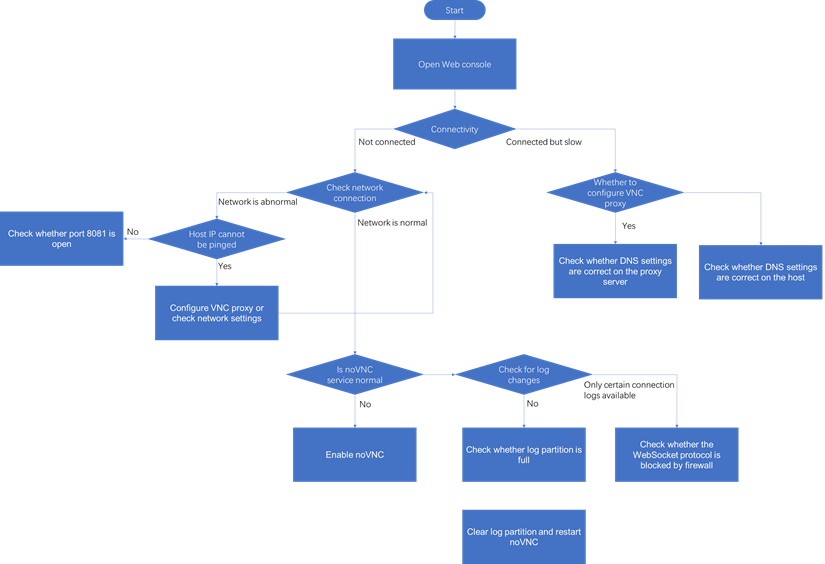

Common troubleshooting workflow

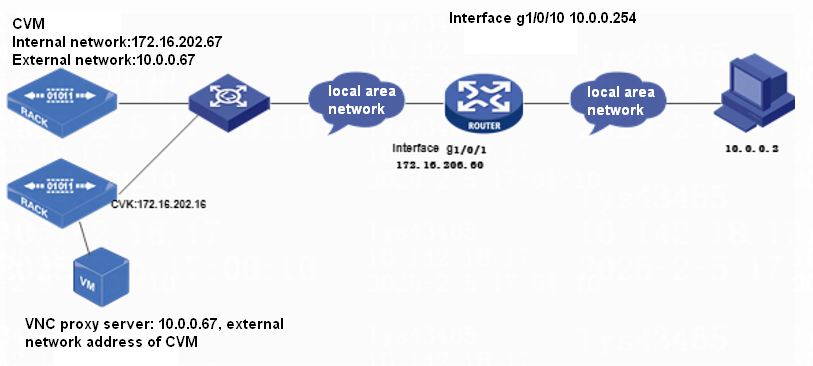

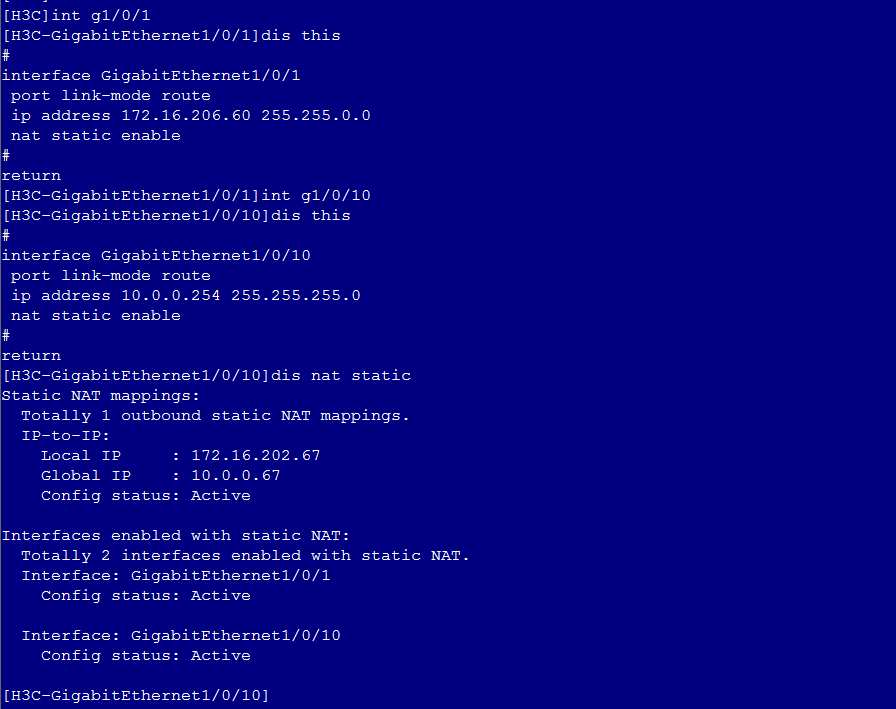

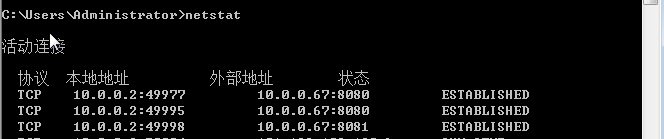

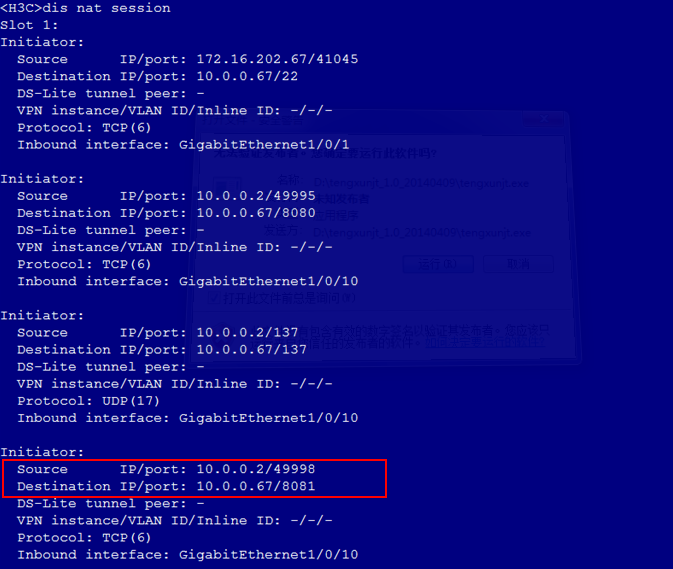

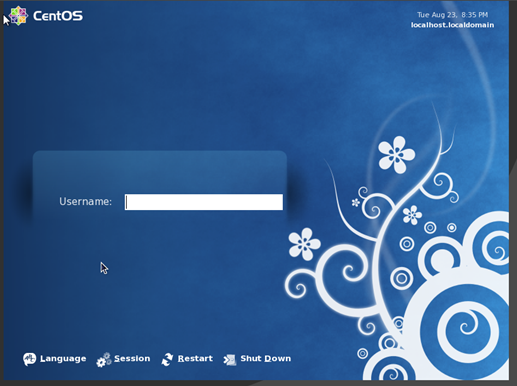

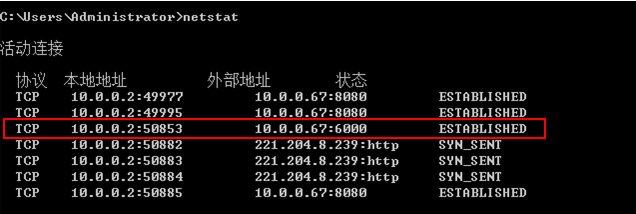

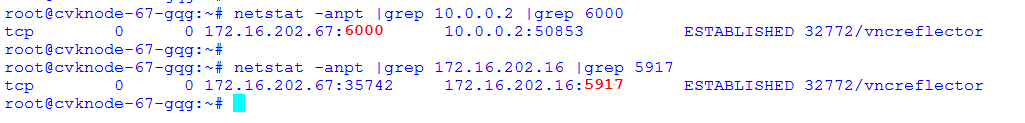

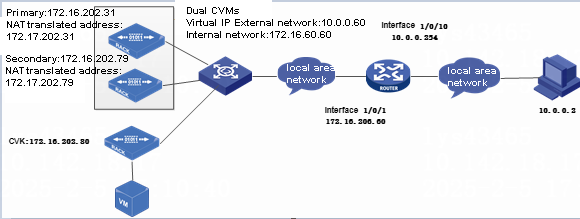

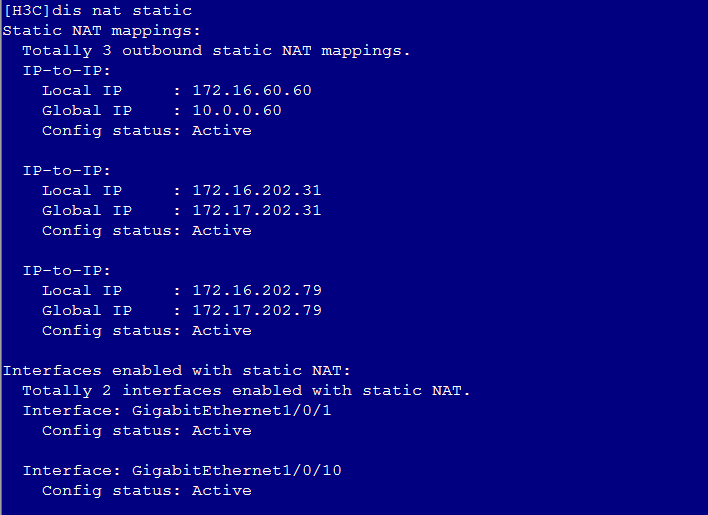

Common VNC configuration issues in the NAT environment

Configuration procedure in the single CVM environment

Handling common issues with plug-ins

Enabling CAStools and qemu-ga debug logging

Full initialization and quick initialization

Partially successful VM deployment (error code: 7501)

CAStools (qemu-ga) installation and upgrade failure

VM and CAS communication failure

Performance monitoring data anomaly

Windows CAStools (qemu-ga) domain joining issues

Anomaly on a Windows VM triggers a dump

VM initialization fails after template deployment

Failure to obtain MAC address information after login to the CAS management interface

Other common issues and operations related with CASTools

Troubleshooting common SRM issues

Overview

H3C Cloud Automation System (CAS) is a resource management platform developed by H3C for building cloud computing infrastructure. H3C CAS provides industry-leading virtualization management solutions for cloud computing infrastructure in data centers. It allows centralized management and control of the cloud computing environment through a unified management interface. Administrators can easily manage all hosts and VMs in the data center, improving control efficiency and simplifying daily work. Additionally, it reduces complexity and management costs in the IT environment.

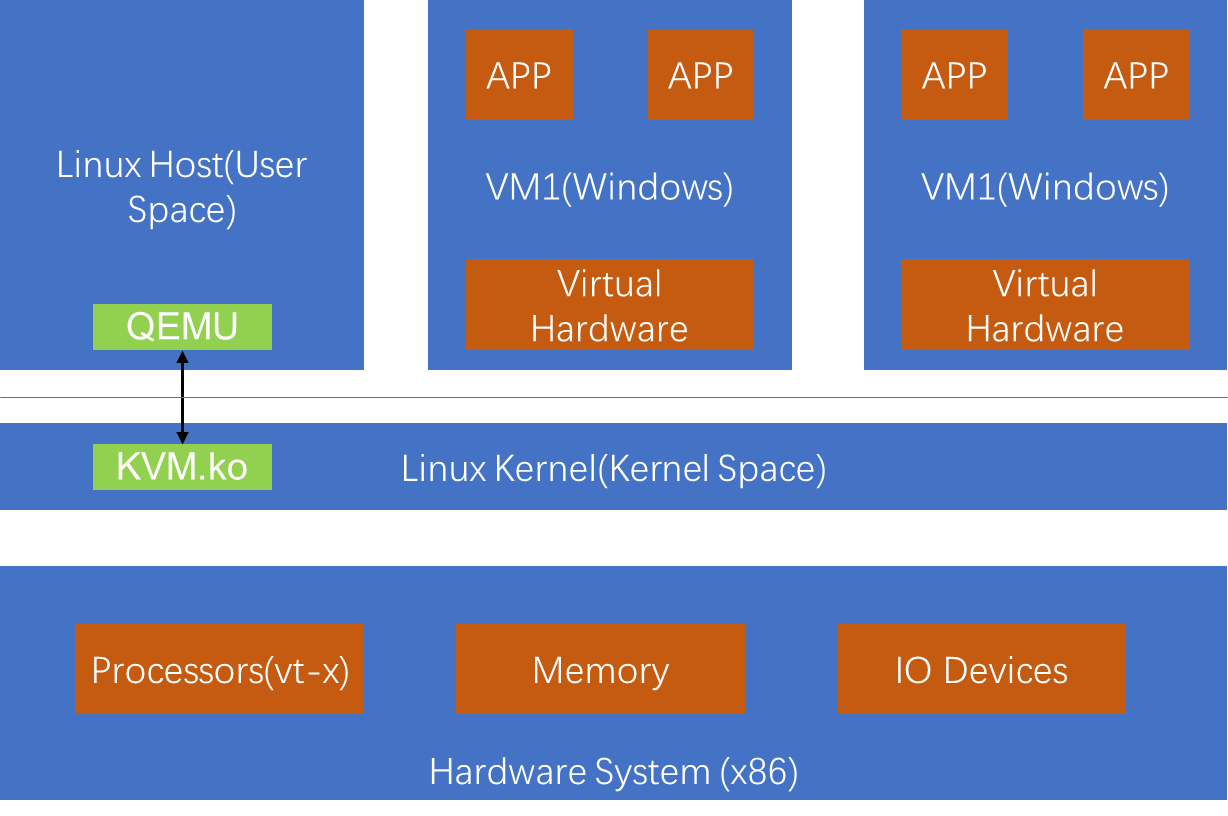

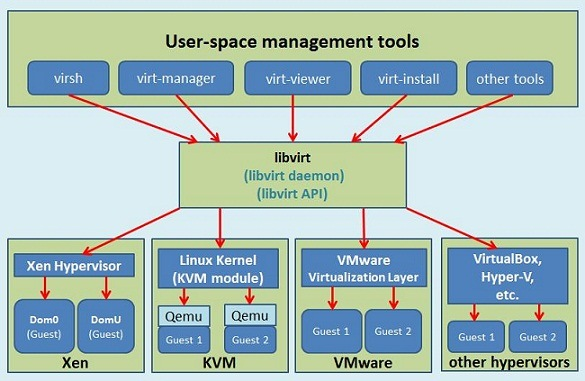

H3C CAS server virtualization products are developed based on the open-source KVM kernel virtualization technology. The kernel performance and stability are optimized for telecom-grade high-performance forwarding, high-availability, and virtualization kernel security and management. With H3C's network expertise, the products offer overlay network solutions for virtual converged framework, innovative solutions such as cloud rainbow, Dynamic Resource eXtension (DRX), and GPU pool, and SRM for disaster recovery. The products aim to provide customers with stable, secure, easy-to-use, open, and high-performance virtualization management products and solutions.

H3C CAS products have been used across various industries in a large scale due to convenient operation, continuous stability of virtualization technology, and sustained improvement of performance. The CAS virtualization scenario utilizes storage, servers, and networks to provide customers with comprehensive virtualization solutions. As the number of application sites increases, the demand is increasing for a common maintenance guide to facilitate daily operations, common configurations, and anomaly processing.

This document explains principles, common issues and solutions, command tools, and configuration risks to better support maintenance. Continuous optimization and supplementation are required to provide accurate guidance for prompt fault resolution and smooth business operation.

CAS application scenarios

The CAS cloud computing management platform is a software suite launched by H3C for building cloud computing solutions. For customers who want to achieve centralized unified management of data centers, integrating existing IT facilities with virtualization and cloud operation management solutions is their best choice. It can perfectly cover the basic application areas of virtualization and cloud computing.

· Facility integration for servers

· Centralized and unified management of computing, network, and storage resources

· High availability and dynamic resource scheduling ensure continuous operation of data center services

· Fast migration and backup of VMs

· Multi-tenant security isolation

· User self-service portal

· Cloud service workflow

· Ensured cloud interoperability through open APIs

Product system structure

CAS contains the following components:

· Cloud Virtualization Kernel (CVK)

The virtualization kernel software that runs between the underlying facility layer and the upper customer operating system layer. CVK is used to shield the differences between underlying heterogeneous hardware and eliminate the OS's dependency on hardware and drivers. It enhances hardware compatibility, high availability, scalability, performance, and reliability in virtualization environments.

· Cloud Virtualization Manager (CVM)

Aims to implement software virtualization management for hardware resources such as computing, networking, and storage within the data center and provide automated services to upper-layer applications. The business scope includes virtual computing, virtual networking, virtual storage, high availability (HA), dynamic resource scheduling (DRS), VM disaster recovery and backup, VM template management, cluster file system, and virtual switch policy.

· Cloud Intelligence Center (CIC)

Contains a series of cloud-based modules. It integrates infrastructure resources, including computing, storage, and networking, and related policies into a resource pool, and allows users to consume resources on-demand to construct a secure, multi-tenant hybrid cloud. The business scope includes: organizations (virtual data centers), multi-tenant data and service security, cloud service workflows, self-service portal, and OpenStack-compatible REST APIs.

System maintenance check on CAS

To ensure stable operation of the CAS system, maintenance work is required. The main functions include viewing alarms, operation logs, clusters, hosts, VMs, packages, licenses, and logs.

Checking the running status of CAS

Log in to the management interface of CAS and check the overall system's health and running state. You must clear alarms and resolve system issues in time to maintain the correct operation of the system.

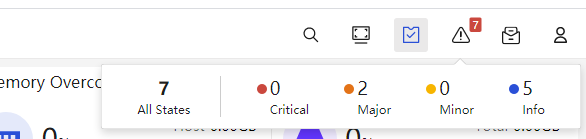

Checking alarms

After logging into CAS management homepage, the top right corner of the page displays the CAS system's alarm indicators, which include Critical, Major, Minor, and Info indications.

If the critical and major indication lights display an alarm message, it indicates that a system error is present. You must quickly identify and solve the problem.

To view real-time alarms, click the corresponding alarm indicator, or access Alarm Management > Alarms.

Troubleshoot problems based on the alarm source, type, alarm message, and alarm time on the real-time alarm page.

Log in to the CAS management console, select System, and view the administrator's operation logs on the Operation Logs page.

The operation log section allows viewing, downloading, and clearing operations. The operations have no risk to running services.

Viewing clusters

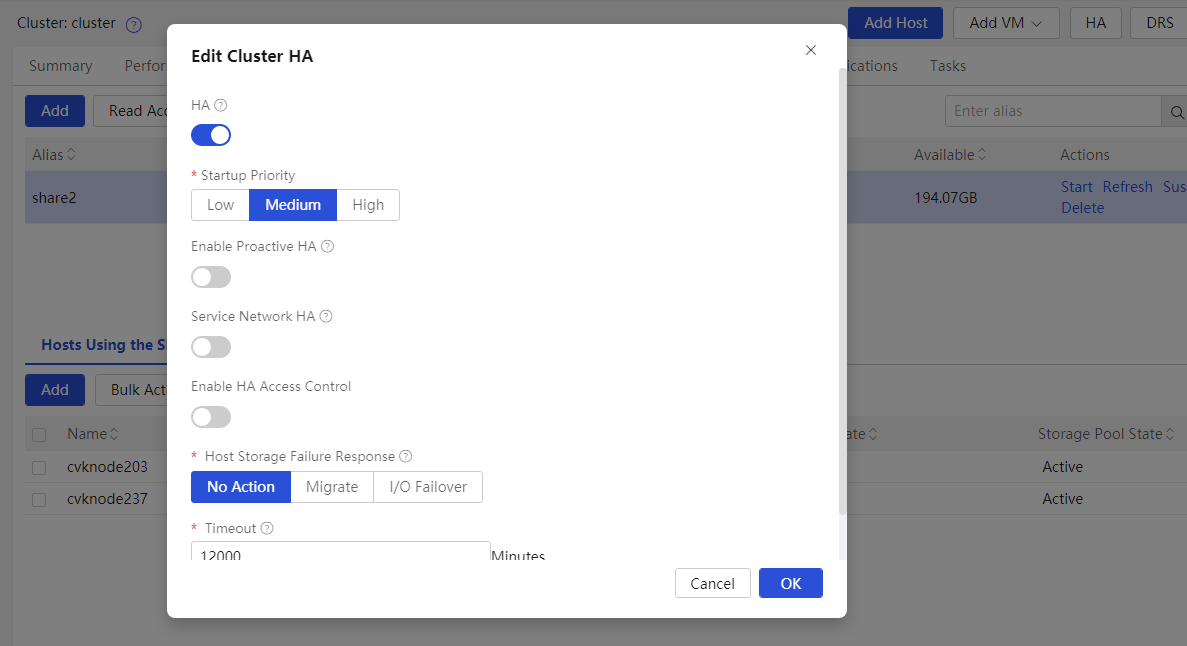

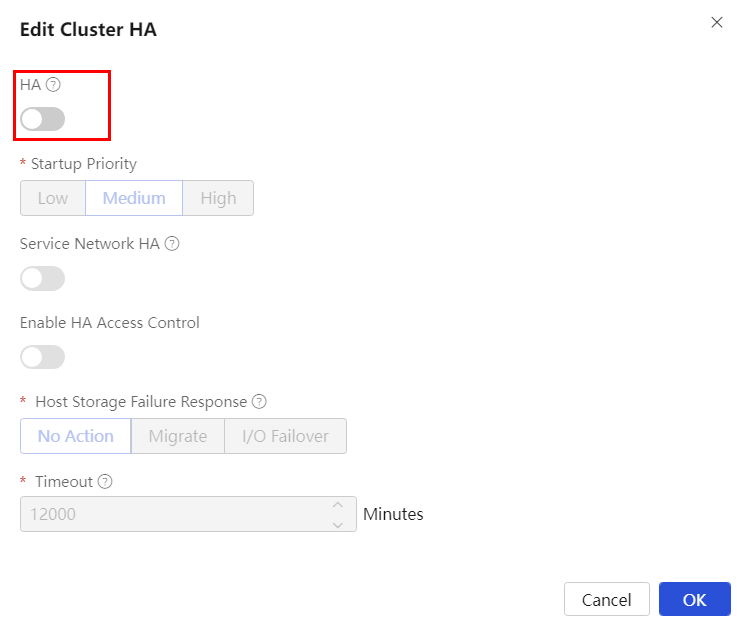

Viewing the high-availability functions of the cluster

High availability (HA) allows the system to automatically migrate VMs from a faulty CVK to another in the cluster, minimizing downtime and out-of-service time.

Before configuring the service, validate if the cluster is enabled with HA. Without HA, VMs on a faulty CVK host cannot be migrated to another CVK host in the same cluster.

Select the clusters to be managed, click HA, and confirm whether to enable HA.

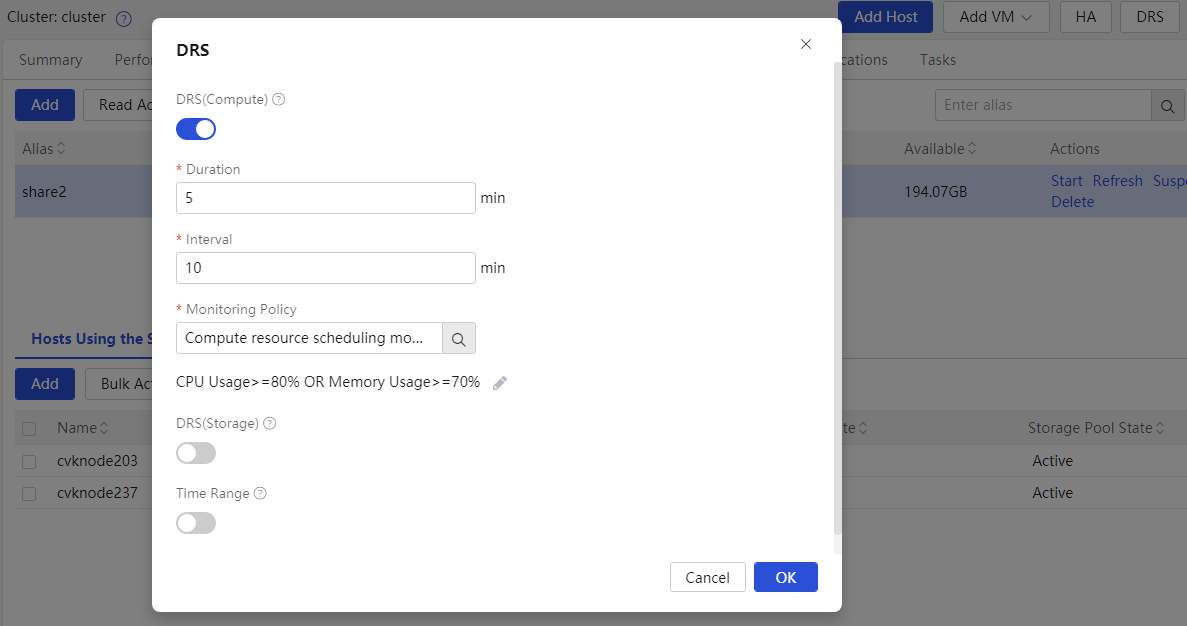

Viewing dynamic resource scheduling of a cluster

Validate if the cluster is enabled with computing resource DRS. If the feature is disabled, when a CVK host is overloaded in the cluster, VMs on the host cannot be migrated to other idle CVK hosts in the same cluster.

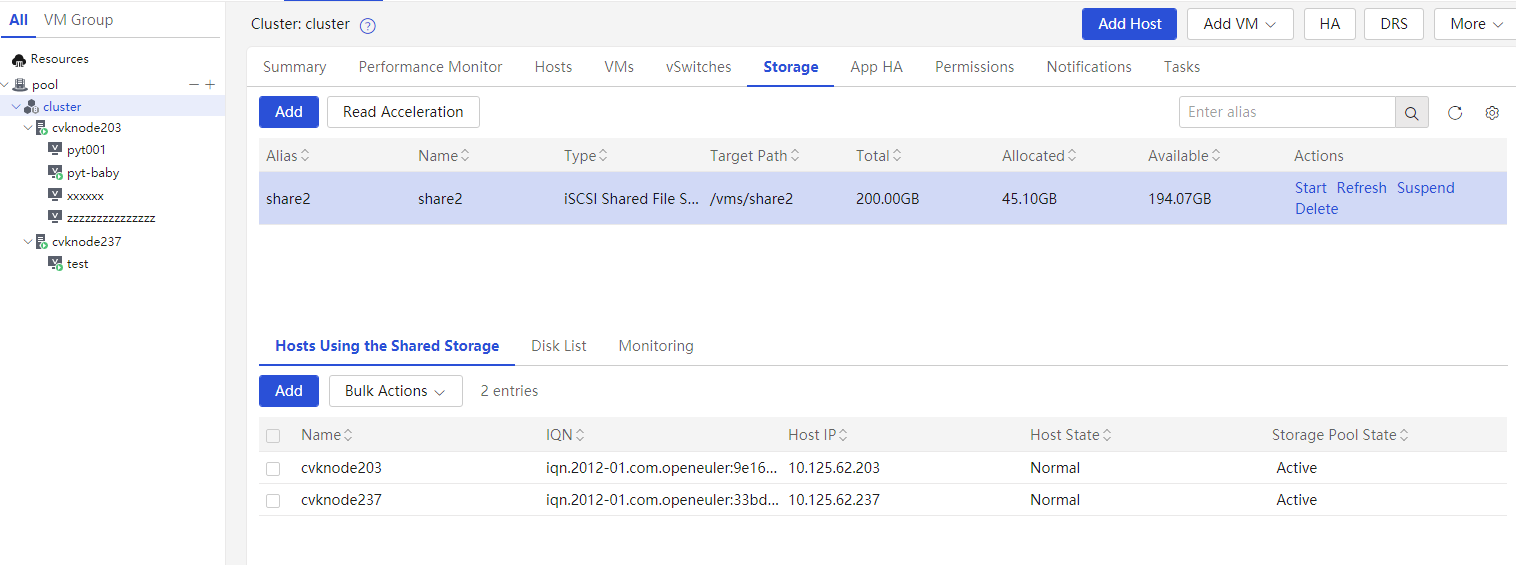

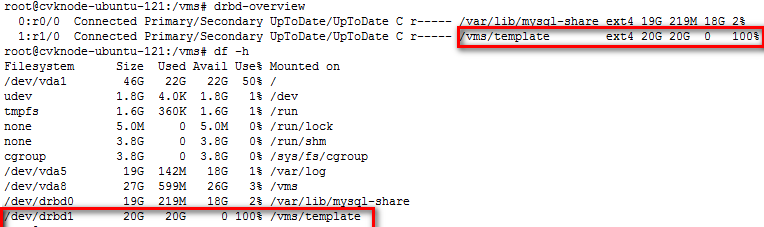

Viewing shared storage in a cluster

Verify if the shared storage on the Storage page of the cluster is being used by all hosts in the cluster. In a cluster, isolate mounted storage as a best practice. Use different LUNs for different clusters to improve security and accessibility.

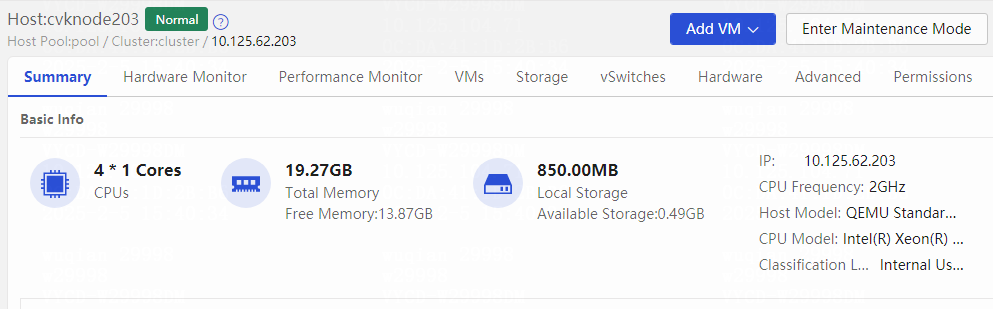

Viewing hosts

Viewing host status

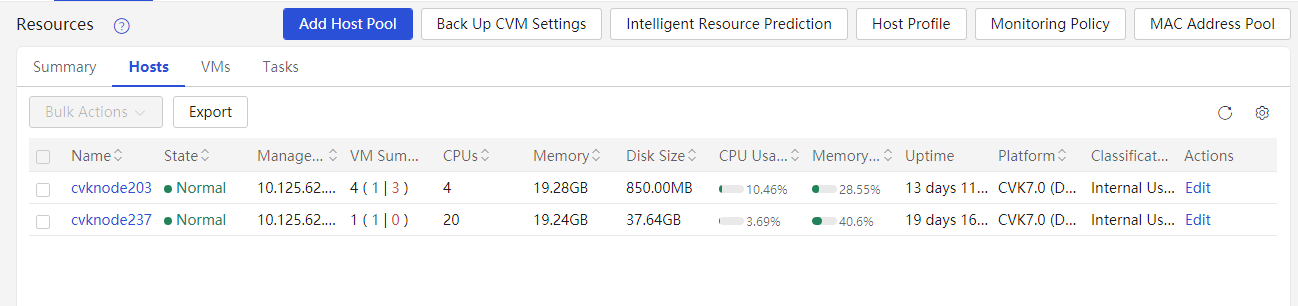

From the left navigation pane, select Resources. Click the Hosts tab, view all the managed hosts, and verify if abnormal hosts exist.

Check the CPU and memory usage of each host and make sure they are normal. Extra attention is required if the usage exceeds 80%.

Viewing the host run time

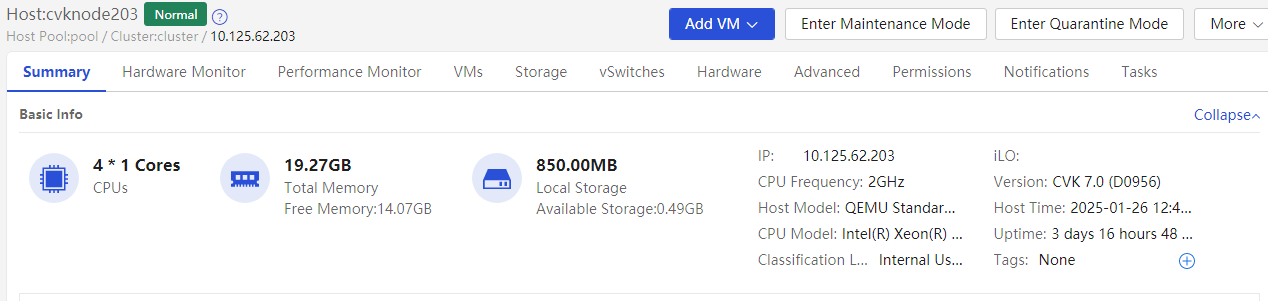

You can view the detailed profile of the host on the Summary page of the CVK host. By checking the run time, you can confirm whether the host has been restarted recently. You can also use the Uptime command at the CVK backend to view the run time.

Viewing host performance monitoring

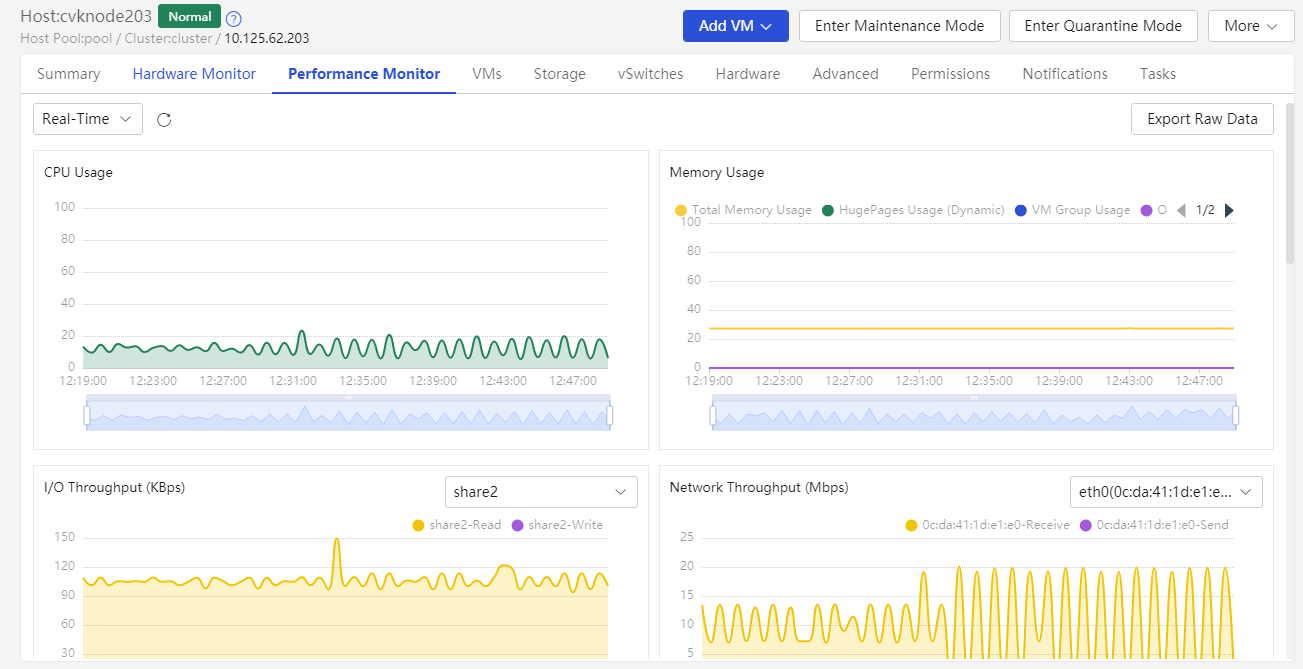

You can view the CPU usage, memory usage, I/O throughput, network throughput, disk usage, and partition usage information of a host on the Performance Monitor page of a CVK host.

1. View the CPU usage of a host.

To view CPU usage information for a longer time-range, access the Performance Monitor page, click the ... button in the CPU Usage section.

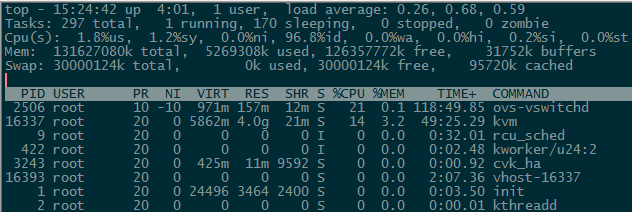

You can also use the top command at the CVK backend to view the CPU usage in real time.

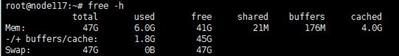

2. View the memory usage of a host.

To view memory usage information for a longer time-range, access the Performance Monitor page, click the ... button in the Memory Usage section.

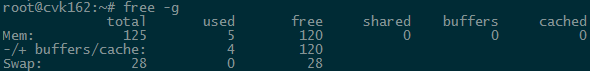

You can also use the free command at the CVK backend to monitor the real-time memory usage. High usage of the Swap partition severely impacts the host performance and can result in automatic shutdown of VMs.

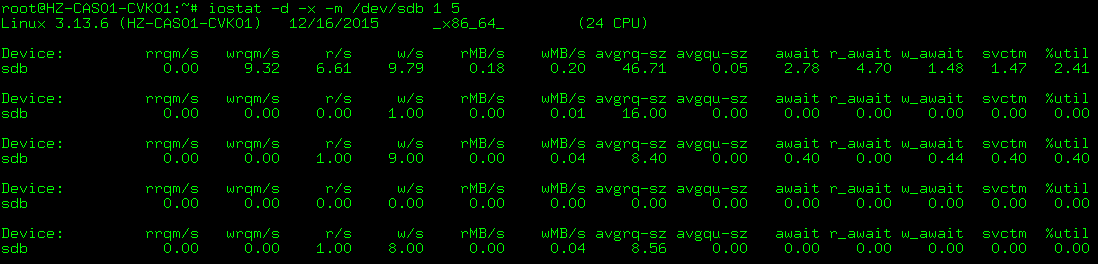

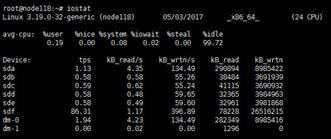

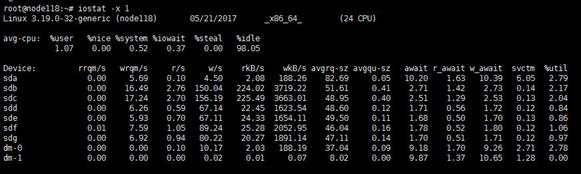

3. Check the I/O throughput of a host.

On the Performance Monitor page, click I/O Throughput to view host disk I/O information. Click the ... button to view I/O throughput information over a longer time range.

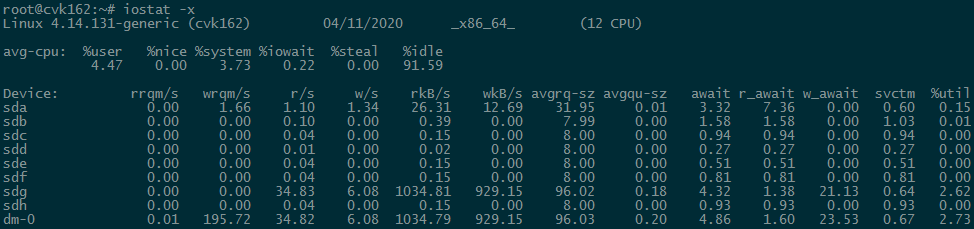

You can also use the iostat -x command at the CVK backend to view disk I/O information.

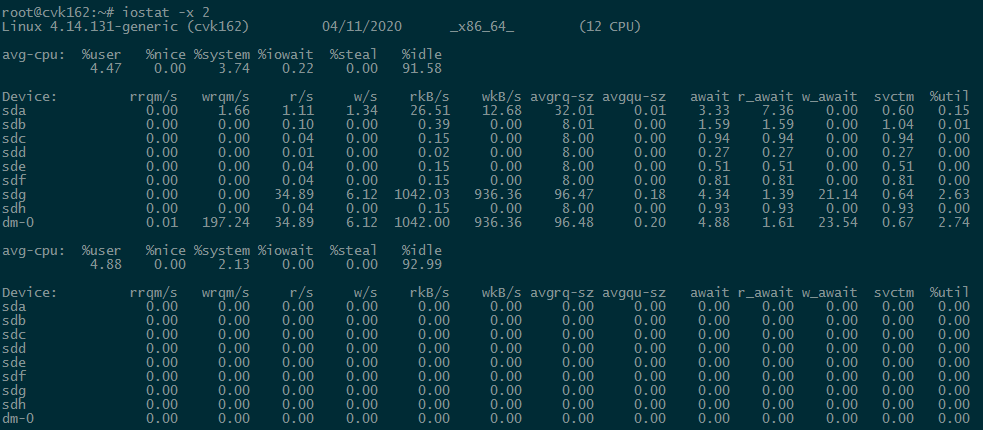

You can use the iostat -x 2 command at the backend to automatically refresh and calculate the results every 2 seconds.

4. View the network throughput of the host.

Click the ... button in the Network Throughput section on the Performance Monitor page to view network throughput information of each physical NIC over a longer time range.

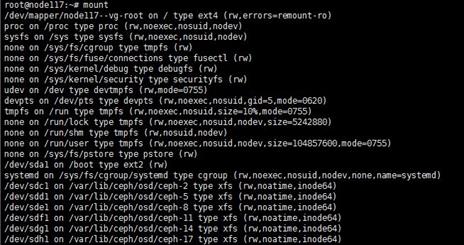

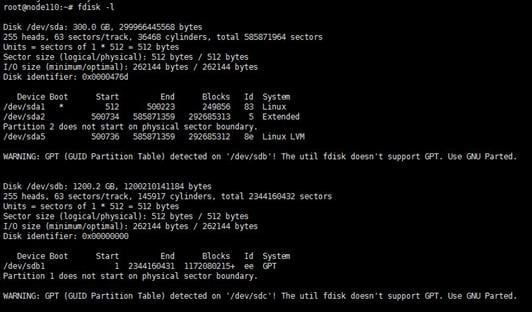

View the disk usage of a host.

The Disk Request section on the Performance Monitor page presents information on disk usage of a host.

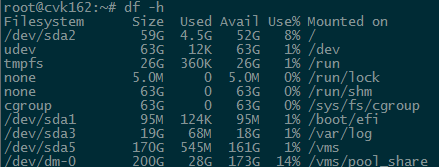

View the occupancy rate of host partitions.

The Partitions section on the Performance Monitor page displays the host's partition occupancy information.

You can use the df -h command at the CVK backend to check the partition occupancy information in real time.

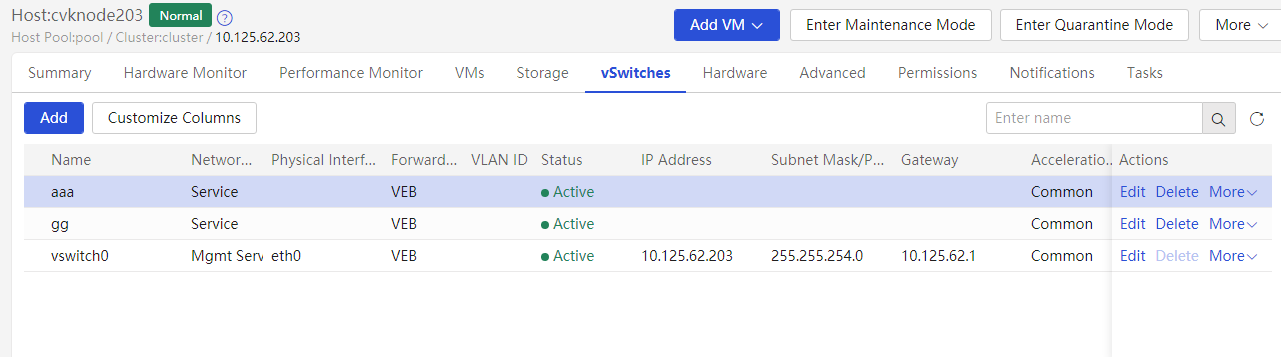

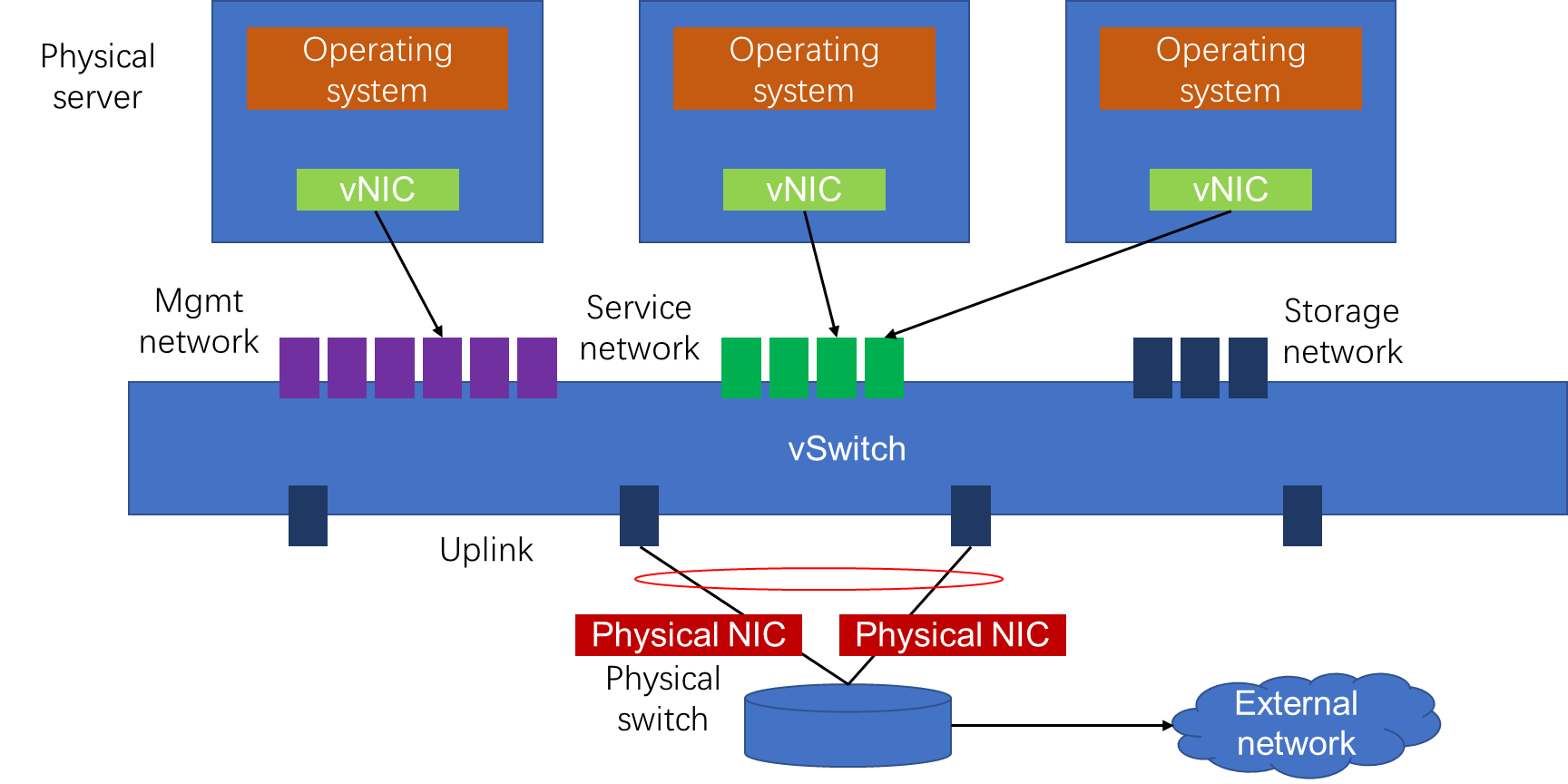

Viewing virtual switches

Check if the virtual switch names are consistent among hosts in the cluster.

Check the status of each virtual switch on the vSwitches page of the host to make sure they are active. Verify that only one gateway is configured among all virtual switches on the host.

Viewing physical NICs

Check the physical NICs of the host on the Physical NICs page. Available information includes rate, working mode, and activity status.

An anomaly can affect the performance of a virtual switch.

|

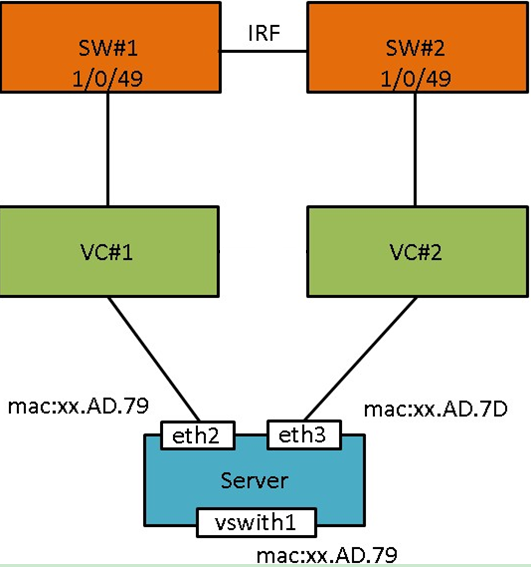

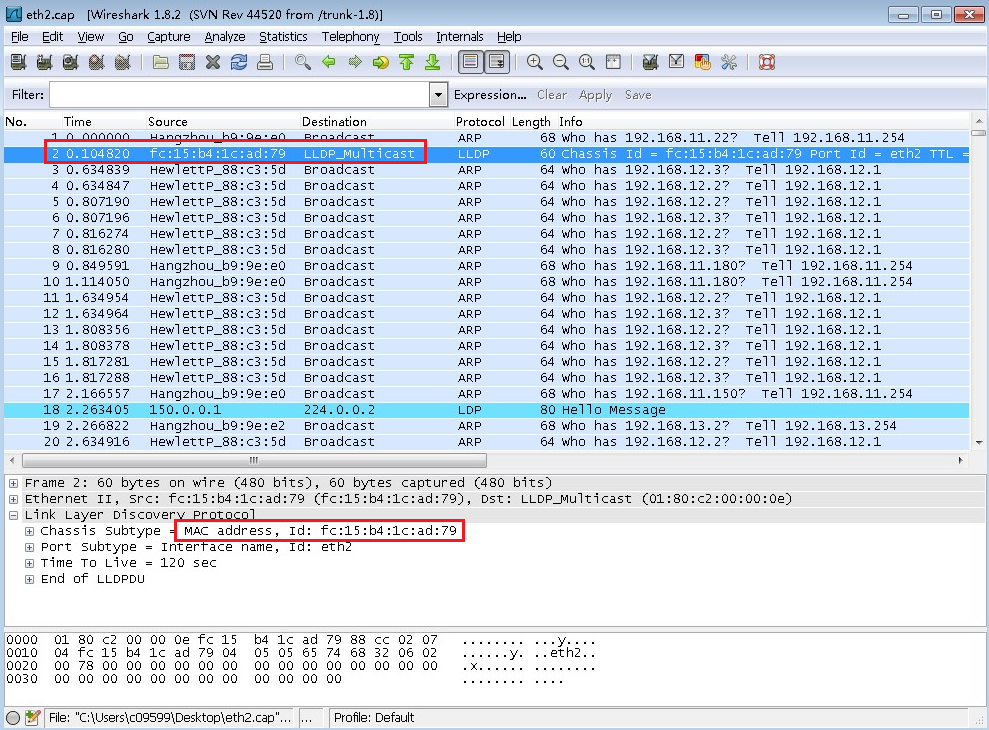

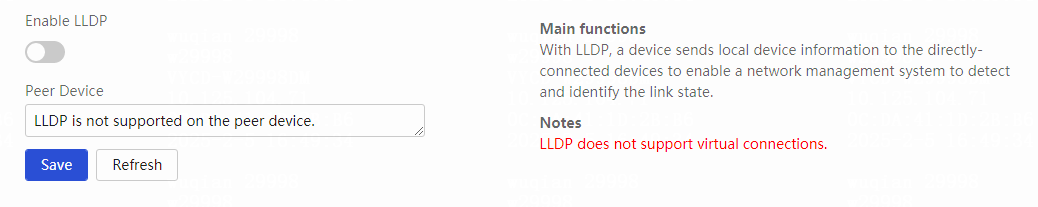

CAUTION: When a blade server uses VC, you must disable LLDP to avoid communication issues on the blade server and VMs on the server. |

Viewing VMs

Verifying if CAS Tools are running correctly

Typically, you can check the running state of the CAS Tools through the VM summary page of the CVM interface. Available states include running and abnormal. Abnormal state may be caused by not running or pending upgrade.

You can also determine the CAS Tools' manual running state through VMs.

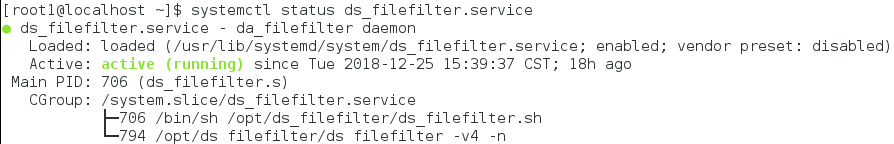

· Linux VM:

For a Linux VM, you can check the state of CAS Tools at the backend by viewing information about the qemu-ga service.

For example, in an Ubuntu system:

root@cvknode-wj:~# service qemu-ga status

* qemu-ga is running

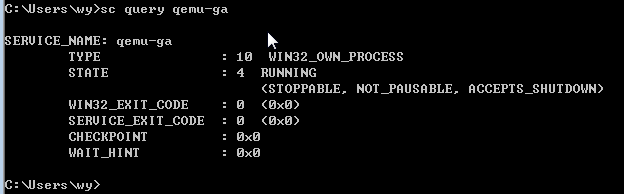

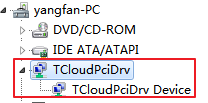

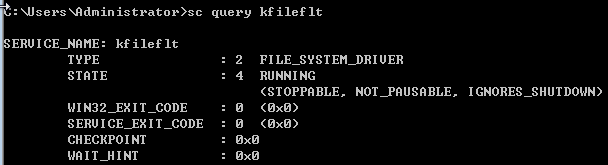

· Windows VM:

For a Windows VM, you can check the corresponding process in Device Manager to identify the state of CAS Tools. The process name is qemu-ga.

For example, in the Windows 7 system:

You can also use cmd to check the state:

Viewing the type of disks and NICs

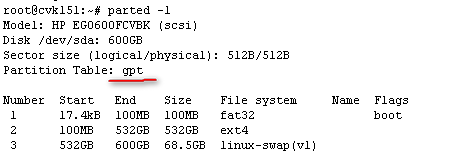

Disk types

CAS supports the following types of disks:

· High-speed disk: virtio disk

IDE disk

High-speed SCSI disk: virtio SCSI disk

You can identify the disk type on the Modify VM > Disk page of the CVM interface.

The XML tags for the three types of disks in the background are also different:

· High-speed disk

<devices>

<emulator>/usr/bin/kvm</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='directsync' io='native'/>

<source file='/vms/images/win7-wj'/>

<backingStore/>

<target dev='vda' bus='virtio'/>

<alias name='virtio-disk0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/>

</disk>

· IDE disk

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='directsync' io='native'/>

<source file='/vms/images/jj'/>

<backingStore/>

<target dev='hdb' bus='ide'/>

<alias name='ide0-0-1'/>

<address type='drive' controller='0' bus='0' target='0' unit='1'/>

</disk>

· High-speed SCSI disk

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='directsync' io='native' discard='unmap'/>

<source file='/vms/images/gg'/>

<backingStore/>

<target dev='sda' bus='scsi'/>

<alias name='scsi2-0-0-0'/>

<address type='drive' controller='2' bus='0' target='0' unit='0'/>

</disk>

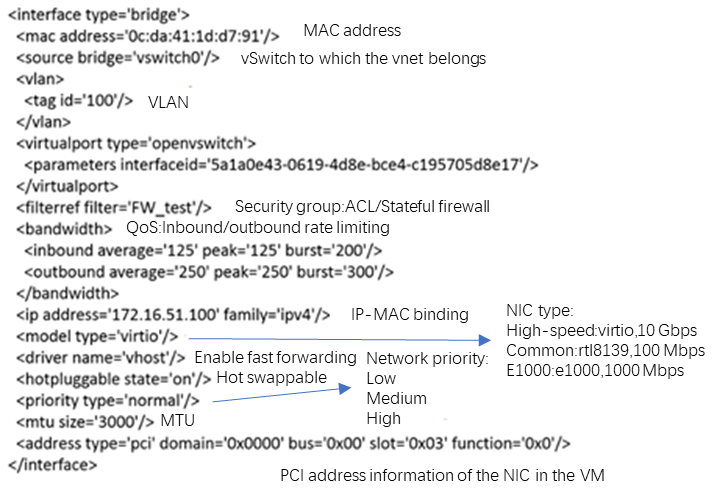

NIC types

CAS provides the following types of NICs:

· Common NIC

High-speed NIC

Intel e1000 NIC

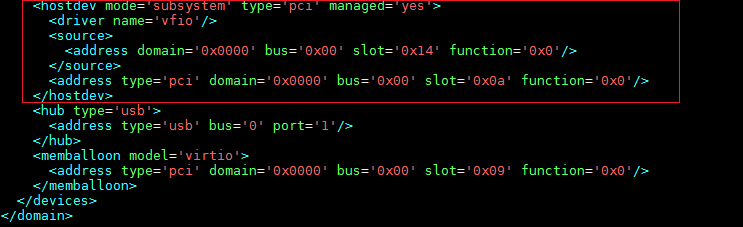

SR-IOV passthrough NIC

For SR-IOV NICs, VMs display them as PCIe devices.

You can also use the VM XML to identify NIC types:

· Common NIC

<interface type='bridge'>

<mac address='0c:da:41:1d:41:6c'/>

<source bridge='vswitch0'/>

<vlan>

<tag id='1'/>

</vlan>

<virtualport type='openvswitch'>

<parameters interfaceid='2738d0d4-4133-4f4d-a187-5bbf7cddf9fd'/>

</virtualport>

<target dev='vnet6'/>

<model type='rtl8139'/>

<priority type='low'/>

<mtu size='1500'/>

<alias name='net1'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x0c' function='0x0'/>

</interface>

· High-speed NIC

<interface type='bridge'>

<mac address='0c:da:41:1d:33:b7'/>

<source bridge='vswitch0'/>

<vlan>

<tag id='1'/>

</vlan>

<virtualport type='openvswitch'>

<parameters interfaceid='0f40ef32-3e05-41ee-b68d-1e0ce1f5ca18'/>

</virtualport>

<target dev='vnet5'/>

<model type='virtio'/>

<driver name='vhost'/>

<priority type='low'/>

<alias name='net0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

· E1000 NIC

</interface>

<interface type='bridge'>

<mac address='0c:da:41:1d:03:6e'/>

<source bridge='vswitch0'/>

<vlan>

<tag id='1'/>

</vlan>

<virtualport type='openvswitch'>

<parameters interfaceid='3739afd5-d624-476f-a653-f1d1e0ee3f2c'/>

</virtualport>

<target dev='vnet7'/>

<model type='e1000'/>

<priority type='low'/>

<mtu size='1500'/>

<alias name='net2'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x0d' function='0x0'/>

</interface>

Viewing performance monitoring of VMs

You can easily view the performance monitoring of a VM on the VM_name > Performance Monitor page of the CVM interface.

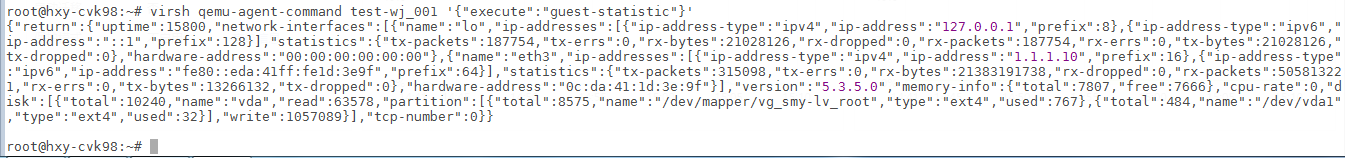

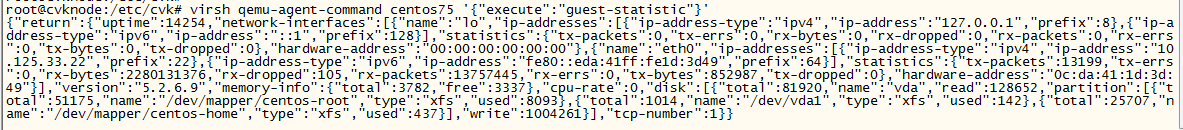

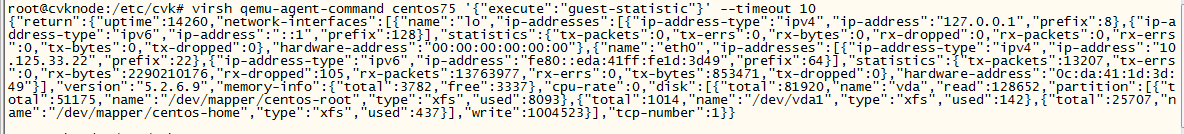

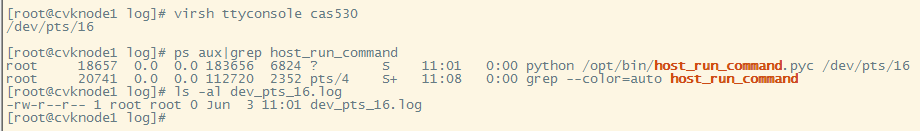

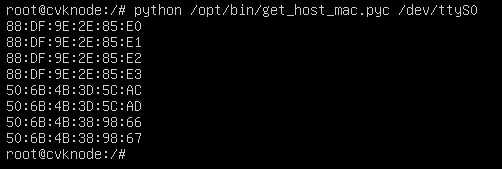

To obtain information about a single VM, use the qemu-agent-command command at the backend.

Command format:

virsh qemu-agent-command vm_name '{"execute":"guest-statistic"}'

Example:

The command can obtain performance data reported by CAS Tools. To use this command, make sure CAS Tools is in running state on the VM.

Log analysis and fault location

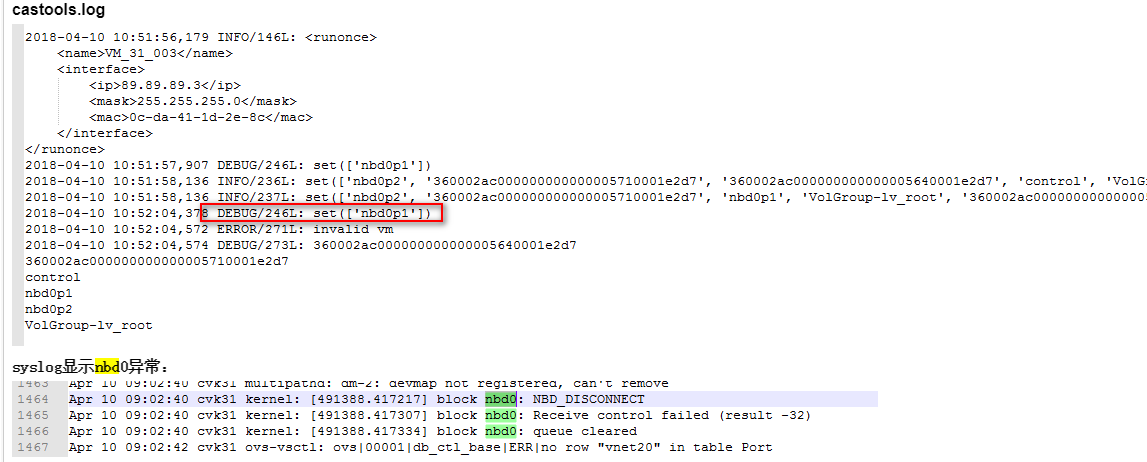

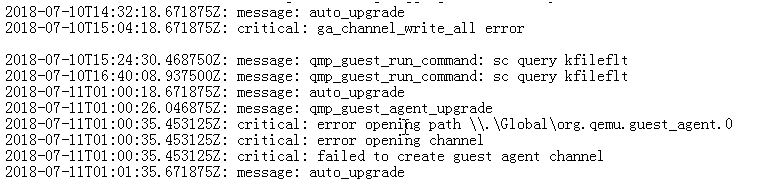

Log troubleshooting for CAS Tools

For the running status of CAS Tools, the following parts must be focused on:

1. Check if the VM system is in normal state. For example, if the system can be pinged or accessed through SSH, if the Windows VM can be accessed remotely through mstsc, and if the console can be opened.

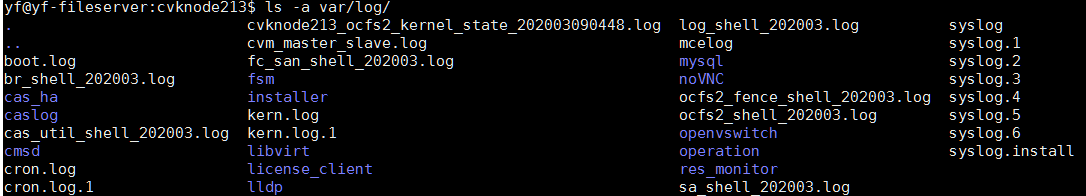

2. To troubleshoot the CAS Tools log when the system is operating correctly, access the /var/log directory to view the qemu-ga.log file in a Linux system, and access the installation directory of CAS Tools in a Windows system.

Typical problems:

· CAS Tools is not running

Two main causes result in this issue:

¡ An anomaly occurred in the qemu-ga within the VM.

¡ An anomaly occurred in the communication channel between the VM and CAS.

· View the version of qemu-ga.

In Linux: qemu-ga --version

In Windows, right-click qemu-ga.exe and click Properties. The file version displayed on the details tab represents the version number.

· Methods to enable debug logging for CAS Tools and qemu-ga:

¡ CAS Tools:

Modify /opt/bin/castools.conf

[handler_LogHandler]

class=FileHandler

level=DEBUG

formatter=LogFormatter

args=('/var/log/castools.log',)

¡ qemu-ga:

Linux: Modify /etc/qemu-ga/qemu-ga.conf to remove # in #verbose=true.

Windows: Change the access of the qemu-ga.conf file in the installation directory in the same way as in Linux

· Open-source qemu-ga issue:

Some Linux distributions have a pre-installed open-source qemu-ga program that can cause issues when running together with CAS qemu-ga. You must remove the pre-installed qemu-ga. To verify if the current qemu-ga is the open-source version or CAS version, use the qemu-ga --version command.

Performance data

You can refer to the relevant content of casserver. If anomalies exist in the performance data of specific VMs, you can use the qemu-agent-command command to verify if the collected data is normal.

Restrictions and guidelines

The function operation does not have risks.

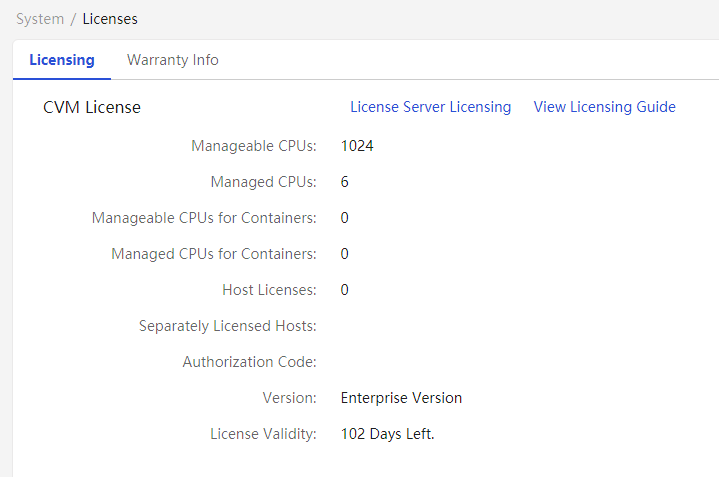

Viewing licenses

You can manage license configurations on the System > Licenses page.

The system mainly contains the following parts: local authorization, disk backup and disaster recovery, and heterogeneous platform migration.

You must use formal licenses for formal deployment. Temporary licenses can be used for testing or temporary deployment. Temporary licenses have an expiration date and may affect normal usage if expired. Make sure to update temporary licenses in advance to avoid license expiration.

The following figure shows the license interface.

Introduction to the CAS module

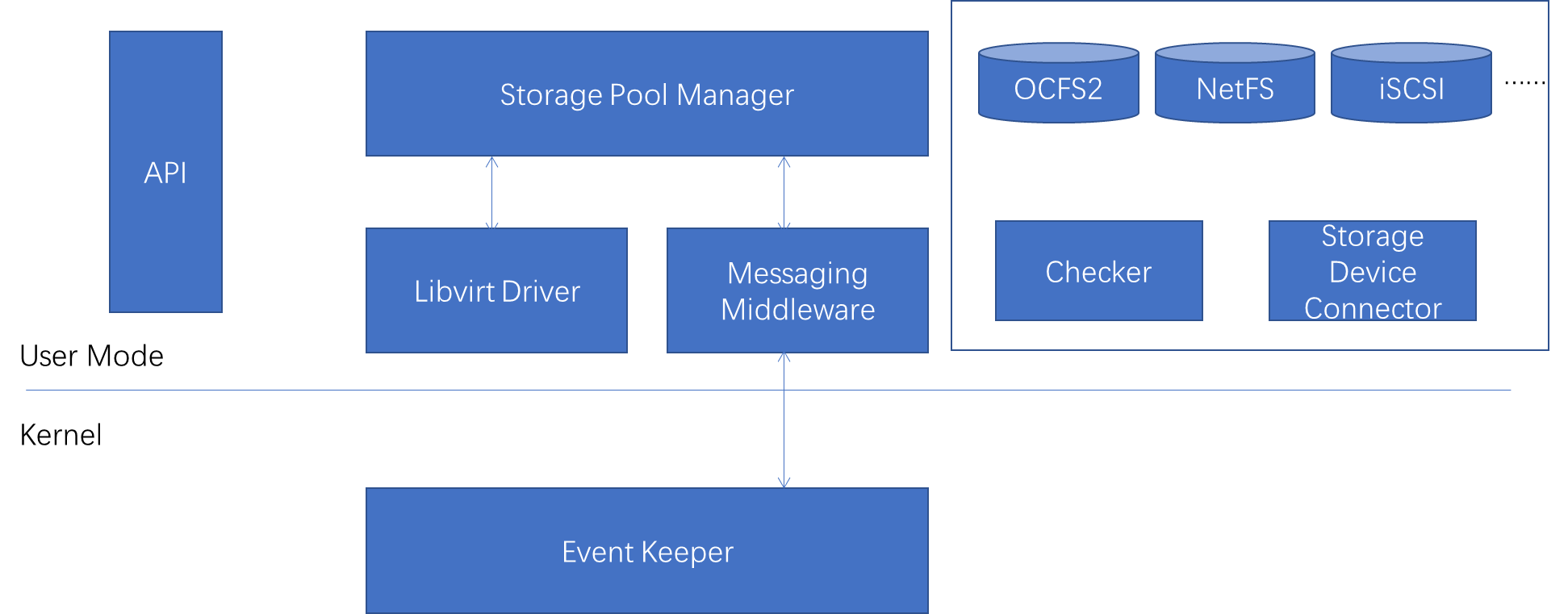

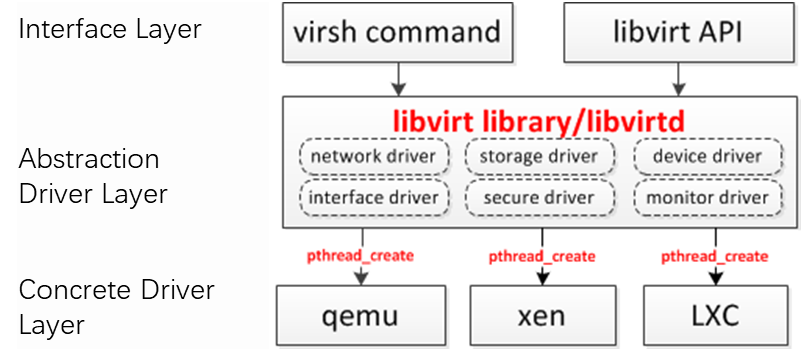

CAS is installed with a Linux operating system that contains various modules for implementing VMs. Each module is a self-contained system that is interrelated to form a unified virtualization management and implementation platform.

CAS mainly includes frontend configuration, calculation, storage, and network modules, as well as related scripts.

This section introduces the basic principles, common commands, and positioning methods of each module, which can be analyzed and referenced during problem processing.

Frontend configuration

The frontend includes the configuration interface of CVM/CIC, administrator operations, and management functions to implement virtualization. It mainly includes Tomcat and casserver.

Tomcat

Tomcat process description and service management

service tomcat8 restart | start | status | stop

Commands commonly used for troubleshooting

The following commands are applicable to both CVM and casserver components. In case of any issues, you can use the commands to obtain necessary information for analysis and feedback in a timely manner.

· Jstack: Jstack can be used to obtain information about Java stack and native stack for running Java programs.

Command format: jstack -l <pid> > <dstFileName>

· Jmap: Jmap can be used to obtain detailed information about memory allocation for running Java programs. For example, the number and size of instances.

Command format: jmap -dump:format=b,file=<destFileName, e.g. heap.bin> <pid>

· Jstat: You can observe information related to classloader, compiler, and gc. You can monitor resources and performance in real time.

Command format: jstat –gcutil <pid> <interval, unit: ms>

Log analysis and fault location

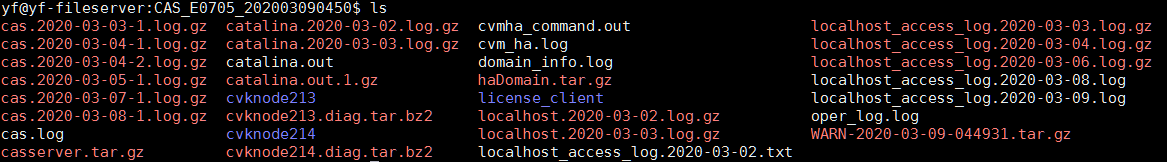

Log directory: /var/log/tomcat8/

· Catalina.out: Records CVM boot logs. If CVM cannot start up, you can view the logs.

· Cas.log: Records the internal operation logs of CVM. If the Web interface prompts errors, you can view the logs.

· hs_err_pid.log: Records Tomcat service anomalies, such as system crash.

· localhost_access_log: Records external requests to CVM, including interface requests and external interface requests, such as CVM interface calling by a plugin.

· jstack generation logs: Used to view specific stacks that may be stuck or blocked when interface tasks are not completed for a long time.

· jmap generation logs: The eclipse MemoryAnalyzer tool is required to resolve the logs. Typically, the logs are collected and then directly provided to the R&D team.

To view cas.log and jstack logs, fuzzy search based on interface services is supported. For example, to filter VM startup logs, you can use startDomain.

Restrictions and guidelines

As a best practice, do not execute Jmap when the environment management service is busy or the environmental pressure is high. You can use other commands if necessary to collect information.

Casserver backend processes

Casserver process description and service management

service casserver restart | start | status | stop

Commands commonly used for troubleshooting

Same as Tomcat.

Log analysis and fault location

Log directory: /var/lib/casserver/logs

· standardServer_bootstrap.log: Records casserver boot logs. If CVM cannot start up, you can view the logs.

· casserver.log: Records casserver internal running logs.

· jstack and jmap: Same as the Tomcat module.

Mysql

Mysql process description and service management

service mysql restart | start | status | stop

Access mysql, and use mysql –uroot –p <database, vservice by default for CAS>. As a best practice, do not include passwords in the command lines to avoid password recording in system logs.

Commands commonly used for troubleshooting

Refer to the Mysql repair document for repairing the Mysql environment.

Log analysis and fault location

Log directory: /var/log/mysql

Error.log: Record mysql-related errors. If the system cannot start up by using the start command, you can view the logs.

Performance monitoring

Performance monitoring description

The performance monitoring tab under the homepage of clusters, hosts, and VMs displays performance monitoring information for the corresponding clusters, hosts, or VMs. Performance monitoring of clusters displays CPU usage, memory usage, I/O throughput statistics, disk requests, and shared file system usage.

Performance monitoring of hosts displays CPU usage, memory usage, I/O throughput, network throughput, total number of network packets, number of lost network packets, disk requests, disk I/O latency, disk usage, and partition occupancy.

Performance monitoring of VMs includes CPU usage, memory usage, I/O throughput, network throughput, disk I/O latency, disk requests, number of connections, CPU usage, disk usage, and partition occupancy.

The chart type collects data in the past half hour. You can query historical performance data for host and VM performance data.

Commands commonly used for troubleshooting

· View if the casserver service is running: service casserver status

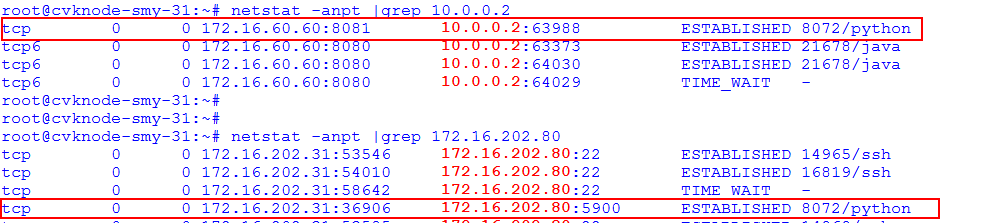

· Verify if a HA module is connected to casserver: netstat –anp|grep 9120

· View the running condition of the performance data report service: service cas_mon status

· Dump memory data: jmap -dump:format=b,file=e.bin <pid>

Log analysis and fault location

First, determine if the hosts or VMs are in running state. VMs and hosts that are not running have no performance data. Performance data issues can be divided into the follow types:

· Issues caused by MySQL database errors.

· Issues caused by casserver errors.

· Issues caused by errors of the performance data report service cas_mon.

MySQL database issues can be disregarded because when MySQL encounters a problem, it will first affect other functions.

Casserver-specific issues mainly manifest in two ways: The entire service process has crashed or the service process is still running but cannot provide functionality as expected. For the issue of a stopped Cassserver process, in most cases it is caused by OutOfMemory exceptions due to excessive memory usage or another reason that leads to the shutdown of the Cassserver service. If the process is still running but cannot provide functionality, it may be caused by insufficient system resources because too many pipes are triggered and consumes a large number of FDs.

Location process:

1. Verify if the mysql service is operating correctly. If the service is not operating correctly, troubleshoot mysql issues.

2. Log in to MySQL, check the corresponding data table, and verify if data for the recent time exist. If data for the recent time exists, troubleshoot Tomcat and locate CAS engineering.

3. Verify if the casserver service is running correctly. If it is not running, collect logs for analysis. (Logs in the /var/log/ and /var/lib/casserver/logs/ directories)

4. If casserver is running, verify if related error logs exist in /var/lib/casserver/logs/casserver.log. If error logs exist, analyze the cause based on the error information provided. If no error logs exist, verify if cas_mon has not reported any errors or if it has reported errors but the reported errors have not been processed by cassserver. You can use jstack to examine for any blocking threads. You can use jmap to export the memory mirror and analyze if data is not immediately written to the database after the data are received.

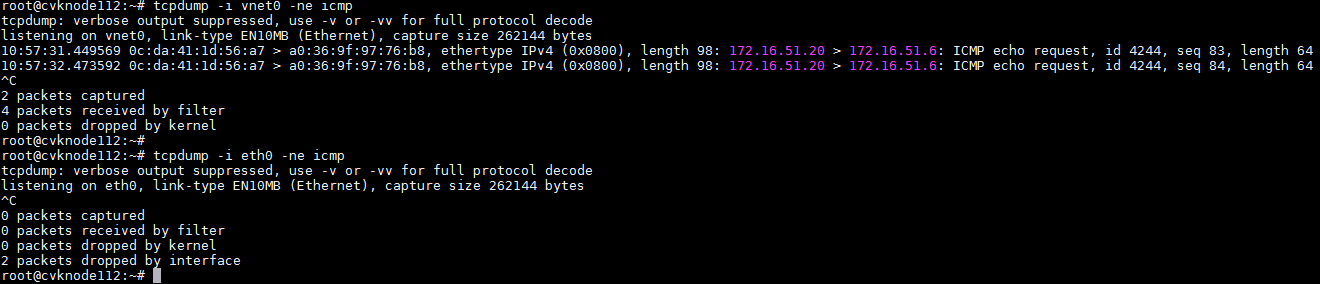

5. To verify if a cas_mon error exists, use netstat to examine if socket connections exist between cas_mon and cassserver. If no connection exists, perform cas_mon issue analysis. If such connections exist, use tcpdump to capture packets. Verify if relevant data packets exist. If such packets exist, analyze casserver. If no relevant data packets exist, it can be determined that the issue is caused by cas_mon errors. Analyze cas_mon.

6. Analyze cas_mon and verify if the service is running correctly. If the service is not running, review the /var/log/cas_ha/cas_mon.log file and Linux system logs to analyze why the service stopped. If the service is running, review the /var/log/cas_ha/cas_mon.log file for clues to the problem.

Restrictions and guidelines

The performance monitoring function only provides data display, and does not provide any risky operation interface. No operational risk exists.

Configuring SRM

About SRM

Disaster recovery management helps system administrators configure disaster recovery settings for protected and recovery sites. When the protected site or its production servers stop operation because of disaster or deliberate destruction, the recovery site takes over to ensure service continuity. A system administrator can set up disaster recovery protected and recovery sites, protection groups and resource mappings, and create recovery policies. Disaster recovery management supports storage replication and disk backup for disaster recovery.

Storage replication disaster recovery depends on server support for storage replication or storage replication adapter (SRAs). To use disaster recovery, first configure a protected site and a recovery site. If the storage servers support SRAs, configure the storage arrays. Then, map resources for the protection group and create a recovery plan. You can execute a recovery plan to test and restore functions. Recovery plan test is manually started and finished. It is used to verify site configuration, protection group, and recovery plan configuration. Recovery plan test does not affect VM business data. Recovery operations include scheduled recovery, failure recovery, and reverse recovery. Scheduled recovery and reverse recovery can be used in combination. Failure recovery and reverse recovery can be used in combination. Scheduled recovery restores running VM business at the recovery site. Failure recovery restores faulty VM business at the recovery site. Reverse recovery restores the VMs that are already running at the recovery site at the protected site. During the reverse recovery process, new data generated during the recovery site operation will also be restored on the VMs created at the protected site.

Troubleshooting commands

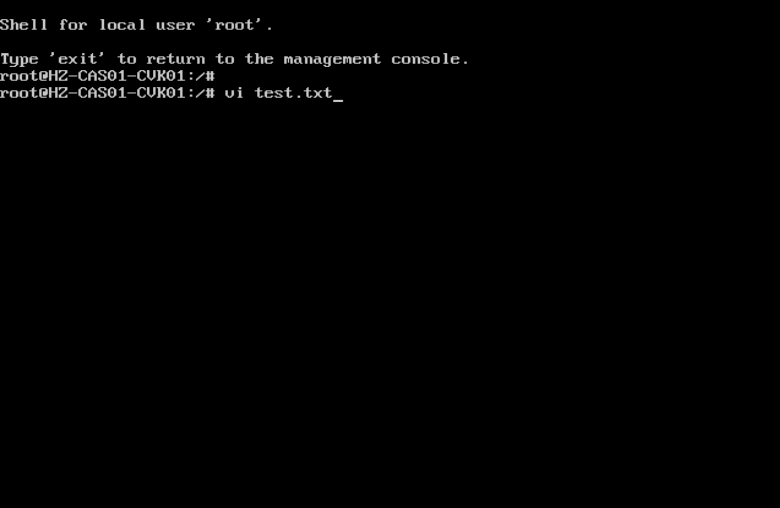

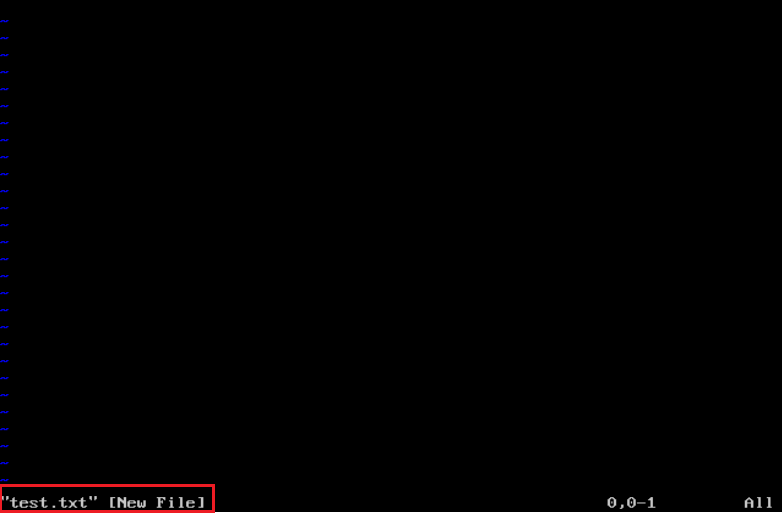

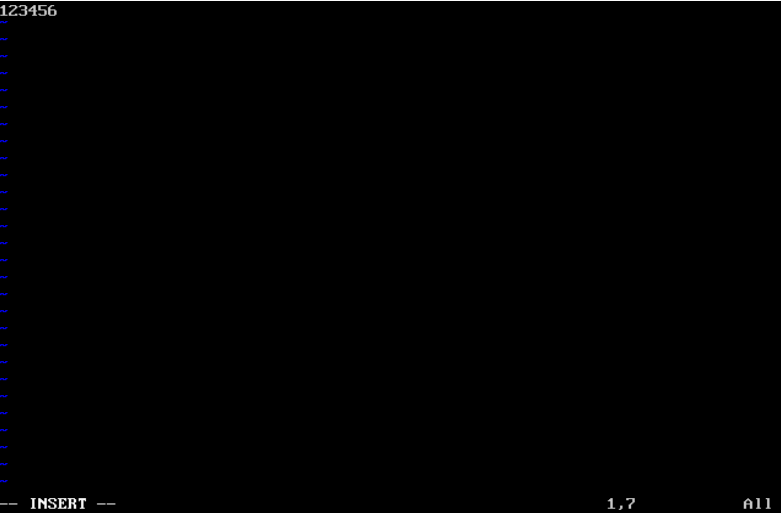

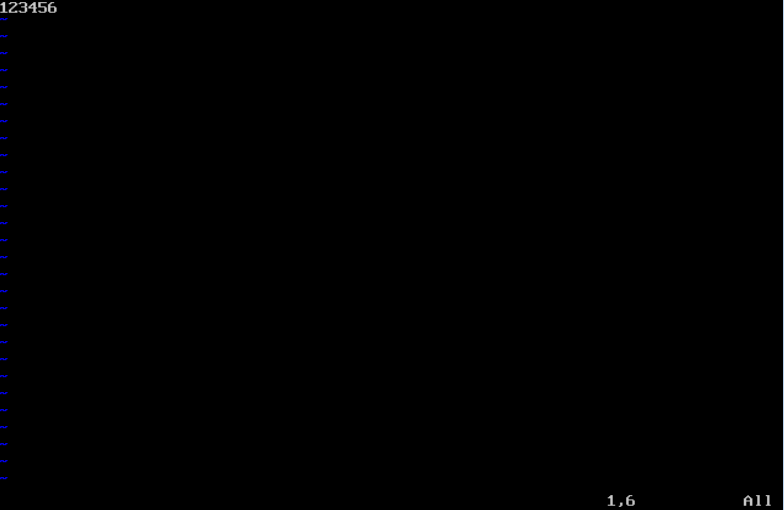

To view the CAS log, use the vi /var/log/tomcat8/cas.log command.

Troubleshooting

A VM cannot be allocated to a storage replication group

Verify that the following requirements are met:

1. The VM does not use resources that are bound to the host resources (hostdev), such as USB, CPU (CPUTune), NUMA, or optical drive or floppy drive using host volumes, or the resources are in an intelligent resource scheduling pool.

2. The storage used by the VM is among the shared storage resources of the protection group. For a multi-level storage resource, make sure each level is among those resources.

3. The virtual switch and port profile used by the VM is among the virtual switches and port profiles of the protection group. If the VM has multiple NICs, verify that every NIC meets this requirement.

4. The VM is not a DRX resource.

5. The recovery site does not have a VM with the same name as the one being recovered. To view VM names, execute the virsh list --all command or check the TBL_DOMAIN.DOMAIN_NAME field in the database.

6. The volume file used by the protected VM exists.

7. The VM's port profile exists in the /etc/libvirt/qemu/domainProfile/{domainName}.xml file.

8. The VM is not in another protection group.

Errors occurs during recovery plan rest, scheduled recovery, failure recovery, or reverse recovery for a storage replication protection group using 3PAR SRAs

Troubleshoot the issue based on the specific cause. For example:

· If an error occurs when recover plan test is started or finished, try executing the failed task again. The 3PAR SRA script can be executed repeatedly regardless of the current status. If the operation button is grayed out, set the STATUS field of the corresponding record in the TBL_SRM_RECOVERY database to 1 for the protected or recovery site to indicate failure. Although the button is operable, you must delete the mounted storage before clicking the button. Remove storage pools from hosts instead of deleting shared file systems. Then, restart Tomcat before operating the button again.

· If an error occurs during scheduled recovery or failure recovery, check the role of the remote copy group on the storage server backend. If the button is still operable, retry the recovery task. If the role has switched, check why the recovery site cannot mount the storage or start the VM. Common causes include insufficient CPU or memory resources on a host, or no host detects the volume in the remote copy group. After resolving the issue, retry the task. If the button is no longer operable, go to the protected and recovery site's backend to set the STATUS field in the corresponding record in the TBL_SRM_RECOVERY database to 1 to indicate failure. If the recovery site has mounted relevant storage resources, delete the resources. Remove storage pools from hosts instead of deleting shared file systems. Then, restart Tomcat. Next, check the role of the remote copy group. If the role has not changed, try to execute the recovery task again. If the role has changed, have the recovery site to meet the requirements before executing the task again.

· For an error occurring during reverse recovery, if the button is inoperable, modify the STATUS field in the database, delete storage pools at the recovery site, and restart Tomcat. Then, execute reverse recovery again.

Synchronization of device replication relationships fails after SRAs are configured

This issue occurs if CVK is not added to the primary or backup site. Commands issued by SRAs are passes by the CVK hosts to the storage servers. Verify that both primary and backup sites contain CVK hosts supporting SRAs. For 3PAR storage, check the /opt/3par/hpe3parsra/srminterface/command.pyc script. For MacroSAN storage, check the /opt/Macrosan/sra/command.pl script. To verify connectivity between 3PAR storage devices and storage servers, perform ping operations. Verify correctness of usernames and passwords, and enable HTTPS on storage servers for 3PAR storage. To view the information, execute the showwsapi command on the storage servers.

A replication relationship is added for a pair of LUNs newly added on the storage servers of the primary and back sites and the replication relationship cannot be found during storage mapping configuration of a protection group

Verify that the replication relationship has been synchronized from the Web interface of the sites. You can check if the newly added LUN information has been synchronized in the storage array management list of site management. Ensure that all recovery plans are placed in initial state. Otherwise, the relationships that have already been added to the protection group after storage replication synchronization might be incorrect or missing.

An error occurred when scheduled recovery, reverse recovery, and scheduled recovery are executed in turn for non-SRA storage

Non-SRA storage programs do not synchronize LUNs. Before executing a recovery task, synchronize LUNs manually. If you do not synchronize LUNs before reverse recovery, the subsequent scheduled recovery will fail.

Storage LUN device promotion fails before scheduled recovery is performed for non-SRA storage.

· Ensure that snapshot is enabled for related LUNs of the primary and backup sites on the storage servers.

· Ensure that the number of snapshot time points of the related LUNs in the primary and backup sites has not reached the upper limit on the storage servers.

· Ensure that automatic time point saving is enabled on the storage servers for the related LUNs in the primary and backup sites.

· Ensure that the LUNs have been manually synchronized.

· Make sure that at least one CVK host in the backup site can access the storage devices configured for the protection group. Check whether the identifier of the storage adapter of the backup site CVK host exists in the Initiator associated with the target client of the storage server.

Recovery of some VMs fails after scheduled recovery is finished

· If non-SRA storage is used, LUNs might not be synchronized before scheduled recovery is executed.

· During the recovery of multiple VMs, some VM might not start successfully due to CVK being busy. In this case, retry the recovery task to resolve the issue.

· If a migration operation has been executed for the VMs in the protected group before failure recovery, the failure to synchronize the VM LUNs to the backup site might cause the VM recovery to fail.

· During recovery, the system will check if the resources on the host are sufficient. If not, adjust the resources to ensure that the recovery site has enough resources before executing the task.

Storage resource suspension fails during scheduled recovery.

If the storage is occupied by an active process, suspension will fail. For example:

· The bug report (201607260323) describes an active VM outside of the protection group that references files from the storage of the protection group through the optical drive.

· An operator has not logged out of the storage directory through SSH.

The desired remote copy group cannot be found when storage scan with 3PAR SRAs is performed.

To resolve this issue:

1. Connect to 3PAR servers and check if the remote copy group's status is correct.

2. Ensure that the protected volumes in the remote copy group are exported to the hosts at the protected site, and the backup volumes are exported to the hosts at the recovery site.

3. Check if the expected remote copy group information is in the /srm/sra/conf/data/rcgroupinfo_XXXX.xml file on each CVK host. Execute device discovery on CVK hosts without the information. If the target WWN is discovered by using this method, delete the remote copy group from the rcgroupinfo_XXXX.xml file on CVK hosts where the WWN is not found.

In version E0705, reverse recovery with 3PAR SRAs fails due to a null pointer exception.

Firstly, verify that the null pointer failure is triggered during the second syncOnce execution. Check the cas.log file to see if the error stack where the null pointer is reported contains the keywords syncOnce and SiteRecoveryManagerHandler.reversePlan. If they exist, log in to the two 3PAR servers and use the showrcopy command to check if the role of the remote copy group used by the protection group has been switched, which means the latest role of the remote copy group added to the protection group as primary is now secondary. If this condition is met, perform the following tasks to restore the environment:

1. Stop the restored VMs at the recovery site, and delete the VMs without clearing the storage volumes.

2. Stop the storage pools mounted by the protection group at the recovery site and delete it. Only delete the storage pools on the hosts. Do not delete the shared file systems of the host pools.

3. Log in to the 3PAR servers and perform a switchover operation for the remote copy group in the protection group. After the operation is successful, check the role of the remote copy group again. The remote copy group should be restored to the state it was when it was added to the protection group. For example, if its role was primary when it was added to the protection group, its role now is also primary.

4. Start storage and VMs at the protected site to ensure normal business operations.

|

IMPORTANT: Although the business is now running correctly, the recovery plan is still in failed state. This is a normal phenomenon. |

Risks, restrictions, and guidelines

During the disaster recovery process, there is a high risk of operational errors, mainly concentrated in the configuration part, with fewer risks in the post-configuration operations. Follow these restrictions and guidelines:

· If a non-SRA storage server is used, and the storage arrays at both the protected and recovery sites have replication and snapshot function licenses, data replication can be achieved.

· If a SRA-supported storage server is used, make sure that you have obtained the relevant licenses for the storage server. For example, for 3PAR, you need to obtain all the remote copy-related licenses that support SRAs.

· The data of the protected VMs is stored on shared storage resources that have replication relationships configured.

· You must obtain a license for CAS CVM to register the SRM feature.

· After the disaster recovery environment is configured, if a MacroSAN storage server is used, data synchronization must be completed before any recovery plan can be carried out.

· For storage arrays that support SRA function, SRM disaster recovery process can automate the entire VM restoration process. For storage arrays that don not support SRA function, SRM process needs manual preparation of the storage environment before VM restoration process can take place.

· If you use 3PAR servers as class 2 storage servers, follow these restrictions and guidelines:

¡ When you create a remote copy group, make sure that the WWNs of the LUNs with mapping relationships within the group are consistent.

¡ When you add a LUN on 3PAR devices to CVK as a shared file system or block device, ensure that the LUN has not been added to a remote copy group, or if it is, the role of the LUN in the remote copy group is primary.

¡ Currently, CAS disaster recovery only supports restoration in the event of a primary CVM fault or link fault between the primary CVM and primary storage server. It does not support restoration in the event of a primary storage server fault, but this will be supported in the future.

¡ CAS disaster recovery CAS only supports remote copy chains of RCFC type.

¡ Before synchronizing the storage replication relationships on CAS, verify that the roles of all remote copy groups used by CAS are not primary-rev or secondary-rev.

¡ To add a new storage replication relationship for use by CVM after synchronizing the storage replication relationships, you need to synchronize the storage replication relationships again after configuring them on the storage server. Before synchronization, ensure that all recovery plans are in initial state to avoid inconsistency before and after synchronization for the existing storage replication relationships.

¡ Do not execute switchover, restore, start, or stop operations on remote copy groups that have joined protected groups on storage servers. Otherwise, recovery or recovery plan test might fail.

Disk backup disaster recovery

About disk backup disaster recovery

Disk backup disaster recovery protects VMs or physical production servers installed with the disaster recovery client. The real-time disk replication feature of the disaster recovery client copies data of protected objects by operating system to the recovery site to restore the protected objects as VMs. With the snapshot chain and real-time synchronization mechanisms, data can be backed up and synchronized in seconds to satisfy the requirements of high-performance RPO data recovery.

Troubleshooting commands

· To check the status of a CDP server, execute the cdapserverservice status command.

· To start a CDP server, execute the cdapserverservice start command.

· To stop a CDP server, execute the cdapserverservice stop command.

· To check the status of a CDP storage node, execute the cdapstorage status command.

· To start a CDP storage node, execute the cdapstorage start command.

· To stop a CDP storage node, execute the cdapstorage stop command.

Troubleshooting

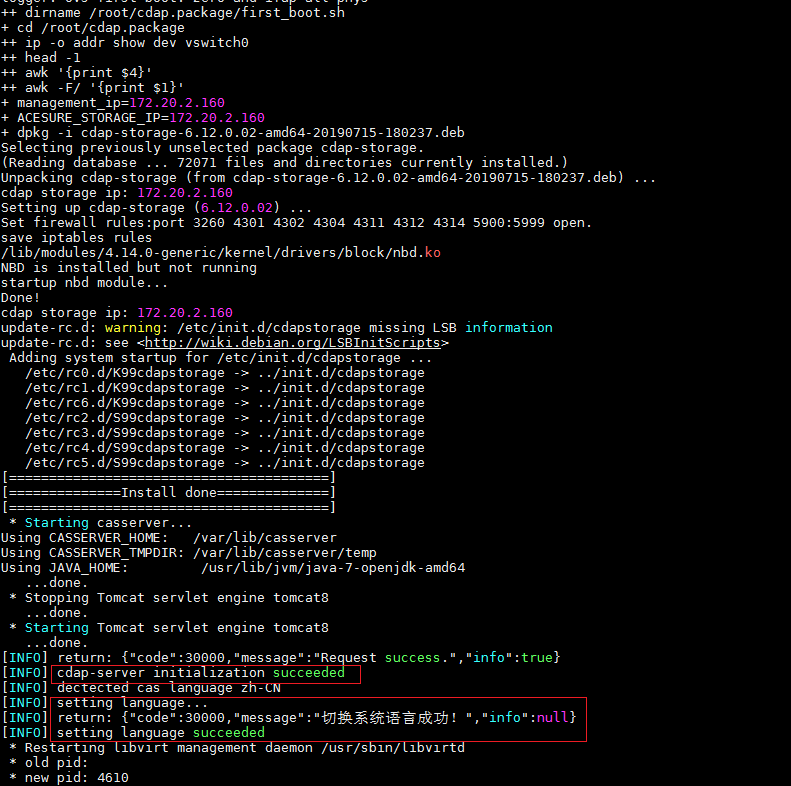

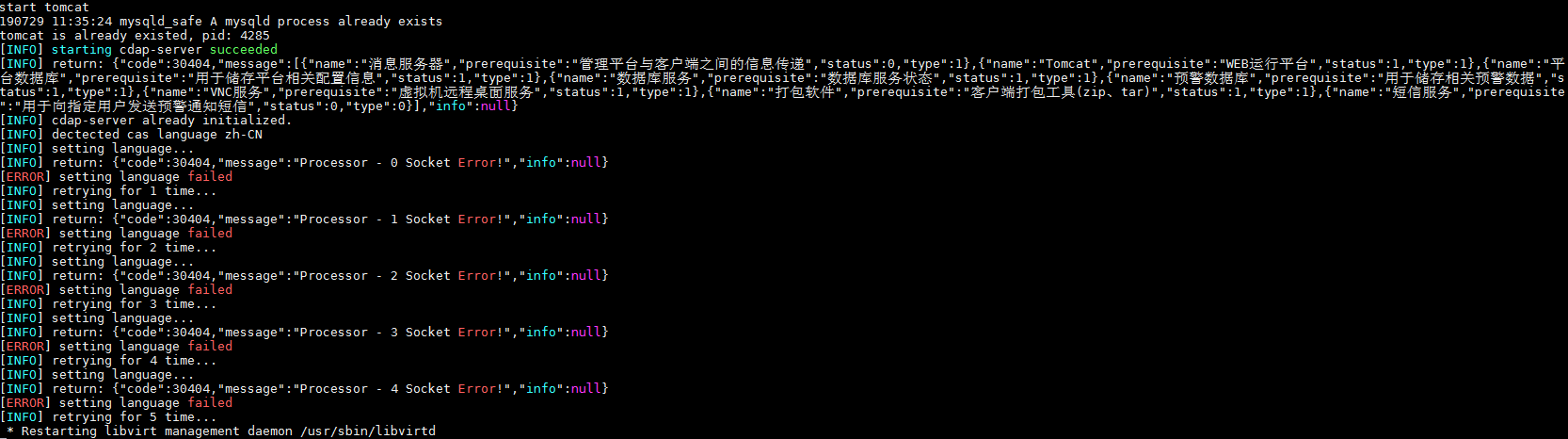

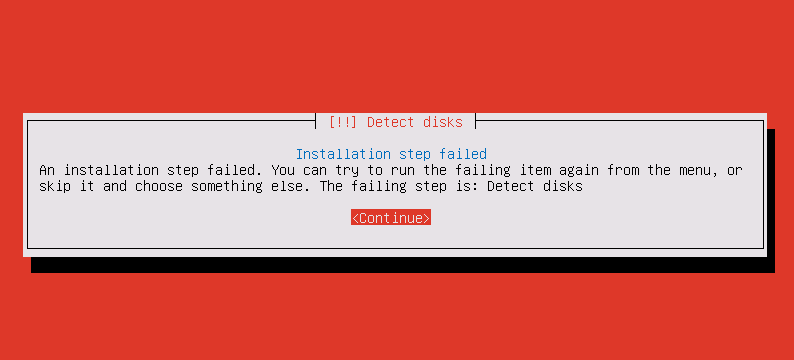

A host cannot be configured as a disaster recovery storage node

Symptom: When a host is configured as a disaster recovery storage node, the system prompts that the system is not initialized and you must initialize it first.

Cause: The AceSure server package has not completed initialization.

To troubleshoot this issue:

1. Verify that the host does not have an IPv6 address. Disaster recovery does not support IPv6.

2. Check whether the initialization is completed by examining the /var/log/firstboot.log file.

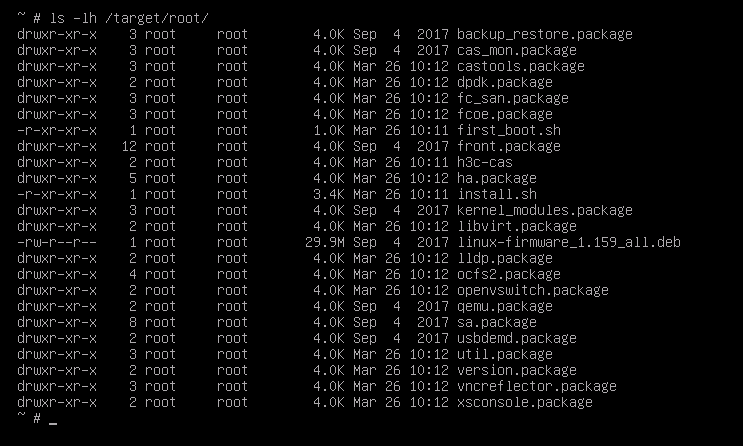

Figure 1 Successful initialization

Because the host is an IPv6 host, initialization and language format configuration fail.

Figure 2 Failed initialization

To resolve this issue:

· Method 1: Run front.upgrade/acesure/cdap/first_boot.sh in the upgrade package.

· Method 2: Log in to the AceSure console and manually complete initialization.

In the Vi /usr/local/cdap/6.0/server/tomcat/webapps/ROOT/WEB-INF/classes/appVersion.properties file, verify that forbidden_web=false exists.

Log in to CVM IP address:9980 with the default username admin and password admin. Start the configuration, use the default primary and secondary IP addresses (the CVM address), and then click Next until completion.

The disaster recovery management function is invisible after an upgrade is performed from a version earlier than E0701L01

Cause: The original SRM disaster recovery function is an enterprise edition feature. After the upgrade, the disaster recovery function can only be used in the enterprise enhanced edition.

Solution: Register with the enterprise enhancement license. This operation is performed by marketing personnel.

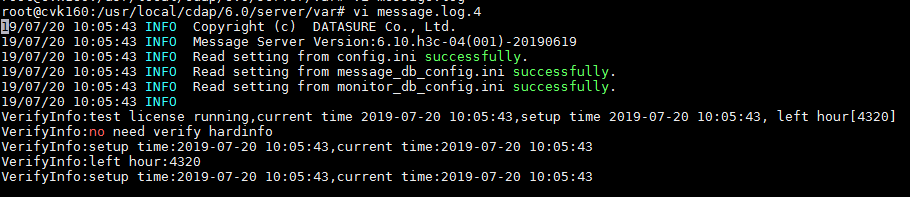

After the disk backup disaster recovery license is registered, the license information displayed is abnormal

Cause: The cas_mon service starts later than the cdapserver service. Check license information in the vi /usr/local/cdap/6.0/server/var/message.log file. This issue does not affect operation.

Solution: Restart the cdapserverservice service after installing a new environment. Execute the cdapserverservice stop and cdapserverservice start commands in turn. If protection groups have been configured, resolve this issue when no backup tasks are in progress.

A storage node goes offline if shared storage is repeatedly configured as disaster recovery storage medium

Cause: When a storage node is offline, it means that the AceSure storage node service is offline, not that the storage node itself is actually offline. To set up shared storage as storage media, restart the cdapstorage service for the configuration to take effect. Storage nodes might be restarting when you repeatedly configure shared storage as disaster recovery storage medium.

Solution: Avoid frequent configuration or try again later.

A production server cannot be found when nodes are adding to a disk backup protection group

Troubleshooting:

1. Verify that the production server is assigned an IP address, and the server can reach the management network and backup network of the recovery site.

2. Verify that Agent is running by using the following commands.

¡ Windows: cdap_client_agent_ex.exe

Verify cdap_client_slave_mount_ex.exe is running.

¡ Linux: server client_agent status

3. Protect group mappings: Verify consistency between the number of virtual switches and production servers. Verify that the VM's storage pools, virtual switches, and port profiles are not in the mapped resources.

4. Verify that the MESSAGE_SERVER_LAN_IP_ADDRESS of Agent is the server IP address of the recovery site.

¡ Windows: \Program File(x86)\Dsagent\Agent.ini

¡ Linux: /usr/local/saltfish/bin/Agent.ini

5. VMs with PCI, USB, SR-IOV, GPU/VGPU, TPM, NUMA binding, mounted optical drive, and floppy drive will be filtered and not allowed to be added.

6. VMs deployed via DRX fast deployment, DRX fast clone, and floating desktop pool, as well as VMs in fixed desktop pool protection mode, are not allowed to be added.

7. VMs with host physical CPU bindings are not allowed to be added.

8. The server is not in any protection group.

9. A VM with the same name exist at the recovery site.

The backup status of a production server is abnormal

· A production server is not successfully added to a protection group. However, the backup status of the production server is in a paused state.

Solution: On the production server addition page, click the restore button for the VMs with abnormal backup status.

· The backup state of VMs in the disk backup protection group is paused or abnormal.

Solution: Check whether there has been a backup network change. If a backup network change has been made, the cdapserverservice service of the recovery-side CVM and the cdapstorage service of the corresponding storage node need to be restarted.

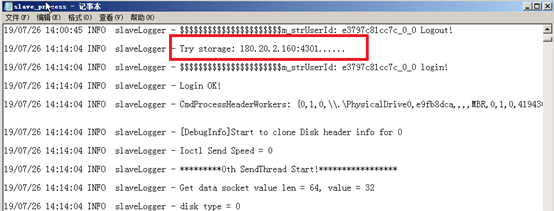

Check for network abnormalities and unreachability. You can ping between the production server and the host, and you can also check the slave_process.log and general.log in the Agent logs of the production server to see the current address used for communication with the AceSure console. If there is a network abnormality, the log will display connect error num=10053.

If the network is normal, select the abnormal production server and start the backup task.

Other factors that might lead to the paused backup status include:

¡ The disaster recovery storage media has no available capacity. As a result, the backup state will automatically become pause.

¡ If the production server's Agent is not running, check the cdap_client_agent service in Windows or the client_agent service in Linux.

· The backup status of VMs in the disk backup protection group is displayed as not configured.

Solution: Select the abnormal production server and issue backup configuration.

· The backup status of the VMs in the disk backup protection group is displayed as empty.

Cause: The production server or Agent is offline.

Bulk deletion of production servers fails for a disk backup protection group

· When there are a large number of VMs in the disk backup protection group, some of them might fail to be deleted in a bulk deletion operation.

Cause: AceSure takes a 2-minute cycle to process deletion tasks, and timeouts might occur due to network issues, disk performance, and task concurrency. You can delete the production servers again.

· When you delete a disk backup protection group for stateful failover or cluster business, you cannot delete it offline, and the backup state must be consistent during the deletion process.

A Linux recovery server cannot load the operating system, and the DataSure boot option must be selected

For example, if a recovery server recovered from a heterogeneous platform (VMware) cannot be started normally on the CAS platform and enters emergency mode, you can restart the VM and select the DataSure boot option to load the system correctly. This boot option is used to handle situations where the server cannot be started correctly.

Risks, restrictions, and guidelines

· Backup network best practices

As a best practice, set up a dedicated backup network as a disaster recovery backup network for a host. The primary and secondary addresses of the client must be their management network address and backup network address respectively. Configure two NICs for a production server, one to interconnect with the AceSure console network for communication between the console and Agent, and another to interconnect with the backup network for data transmission.

After a backup network is deployed to a virtual switch, if the virtual switch is modified by adding a new network or changing the IP address, the backup network configuration needs to be redeployed.

· Backup network changes

To make backup network changes, configure the backup network during non-busy hours, the console's cdapserverservice and cdapstorage services, and redeploy backup tasks to all protection groups. If changes are made during a backup operation, it will cause the backup operation to pause or fail. As a best practice, plan the networks during the initial setup and avoid changing the backup network settings for a live network.

· Restrictions and guidelines for client download

Only disk backup disaster recovery requires a client. Install the client on protected production servers (VMs, physical servers, or bare metal servers). Because the client requires the recovery server IP address, download the client after configuring the recovery network proxy IP address at either the recovery or protected side. As a best practice, download the client at the recovery site.

Use one of the following methods to download and install the client:

¡ Download the client from the Web interface and install it directly on a VM.

¡ For Linux systems, copy the URL link, paste it into the command line of a VM to download and install the client. The URL link is a Linux command line and is not supported by Windows.

· Install Agent on a production server

For client compatibility, see the CAS compatibility matrixes. Make sure that the client version matches the kernel version of the target production server.

After installing Agent on the Windows system, you must restart the system services for the installation to take effect. To view the Agent service:

Navigate to Task Manager > Services > CDAP Service.

Figure 4 CDAP service

Figure 5 CDAP process

For Linux production servers, manually select a client version whose compatible kernel version matches or is similar to the kernel version of the operating system.

After installation, you do not need to restart the Linux system. You can use the following command to check whether the service is running correctly.

¡ [root@localhost bin]# service client_agent status

¡ [root@localhost bin]#ps –ef |grep cdap

· Restrictions and guidelines for creating a new VM or VM template by cloning a VM with Agent-installed

In the initial environment, you might need to deploy Agent in bulk. When you clone a production server with Agent-installed as a VM template, delete the AgentSysteminfo configuration file under the Agent installation path on the production server. Then, clone the running production server to create a VM or VM template. The Agents on the cloned or template-deployed VMs will be in running status.

· Restrictions and guidelines for production servers and recovery VMs

¡ The first nine restrictions apply to VMs protected by storage replication disaster recovery. All the restrictions apply to VMs protected by back disaster recovery.

- Cloud rainbow migration.

- Convert to a template.

- Add VM hardware devices.

- Delete VM hardware devices.

- Volume migration within a storage pool.

- Migrate by storage.

- Migrate by host and storage.

- Migrate by host.

- Forbid deletion.

- Create restoration points.

- Create snapshots.

- Export as an OVF template.

- Managed by HA.

- Edit VM storage.

- Add VM or host rules.

- Forbid to add to a VM group or host group.

- Back up.

- Clone.

- Clone as a template.

- Backup policies.

¡ In storage replication protection groups, production servers have restrictions that include migrating by storage and migrating by hosts. In storage replication protection groups, production servers are allowed to be migrated by storage and migrated by host and storage.

¡ Agent on a recovery VM is in a non-running state. After the recovery VM is switched to a normal VM, its UUID and MAC address will change, and the AceSure field in the XML file will be deleted.

¡ Disaster recovery mechanism focuses on data accuracy. Consistency with the original production server in disk format, provisioning, and cluster size is not guaranteed.

· Disk expansion and addition for VMs in a protection group

¡ Storage replication—LUN replication has no impact on disk expansion and addition.

¡ Disk backup—To avoid data loss, if a production server in a disk backup protection group needs to add or expand a disk, the server must first be deleted from the protection group. After disk expansion or addition, the disk must be expanded or assigned a disk letter on the guest operating system, and then the production server must be added back to the protection group.

· Relationships between protection groups, recovery plans, and production servers

¡ Storage replication—One-to-one correspondence between protection groups and recovery plans.

¡ Disk backup—One protection group can correspond to multiple recovery plans, but one production server can only correspond to one recovery plan.

· Conditions for recovery plan test and scheduled recovery

During a recovery plan test, the second last snapshot is used to simulate the disaster scenario. If there is only one snapshot available on the production server, the test cannot be carried out. For server clustering and stateful failover setups, the snapshot count of a shared disk must be equal to or greater than 2 in order to perform the test. The production servers must not be modified during the test to ensure accurate and reliable results.

For a scheduled recovery, the last snapshot is used to restore the production server environment. However, if the production server is in a synchronization or backup state, recovery is not allowed. In the event of a failed recovery, you cannot perform another test to prevent further damage to the environment. Contact the development team for investigation and resolution in this case.

To query the snapshot number of a normal production server, click its display name in the protected production server list of a protection group to go to the snapshot information page. To view the number of shared disk snapshots for stateful failover and cluster environments, check the storage path.

· PE reverse recovery

After a recovery VM recovered by a disk backup protection group starts, to prevent data loss, use the PE tool to restore the backup data back to the production environment before cleaning backup data. Cleaning backup data will delete the recovery VM and its temporary files, but will not delete AceSure's backup data.

There are the following modes for PE restoration:

¡ Normal mode restores the snapshot point and all the data written at takeover before the restoration.

¡ Seamless mode restores a snapshot point and all data before the snapshot point and then restores the newly generated data in the VMs.

¡ Temporary data restoration only restores the newly generated data in the recovery VM.

· Maximum number of disks for VMs in a disk backup protection group

The production servers in a disk backup protection group can have up to 30 disks.

· Features not supported in the current software version

The current version does not support IPv6, stateful failover disaster recovery, disaster recovery in security mode, bulk deployment of Agent, and disaster recovery alarm. These features will be supported in later versions.

· For disaster recovery alarms, since the current version does not support disaster recovery alarms and CAS cannot read the backup data size in AceSure, enable the cluster shared storage usage threshold in CAS alarm management and set the backup storage on the host at startup as the disaster recovery storage medium. This enables timely expansion or backup data cleaning when storage capacity alarms occur, avoiding backup failure due to full storage.

HA

Overview

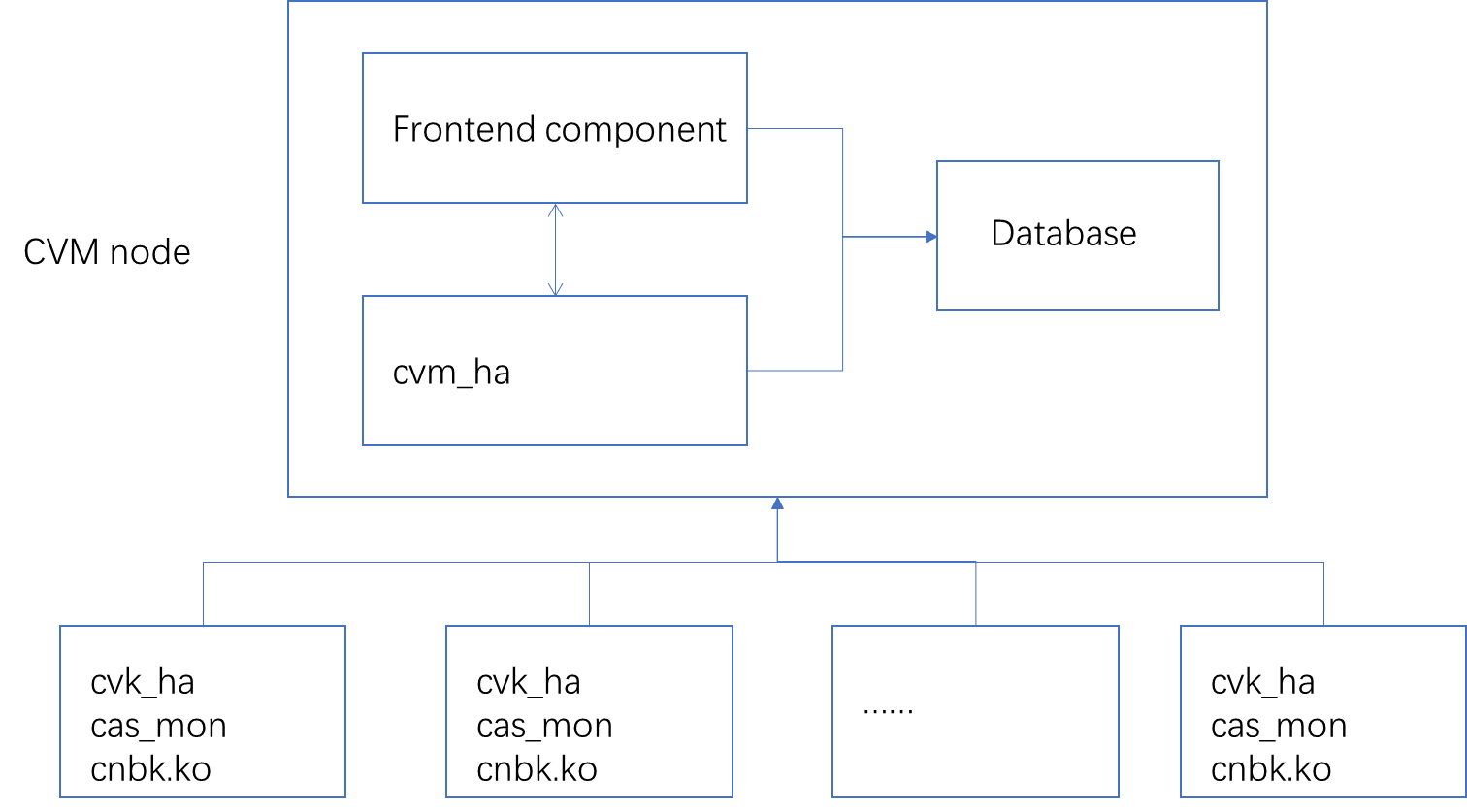

Figure 7 shows the HA modules and data flow direction.

Figure 6 HA modules and data flow direction

You can migrate or freeze VMs through HA.

· Migrate—If a VM uses the shared storage or a block device, the VM will be migrated to another CVK when the shared storage fails.

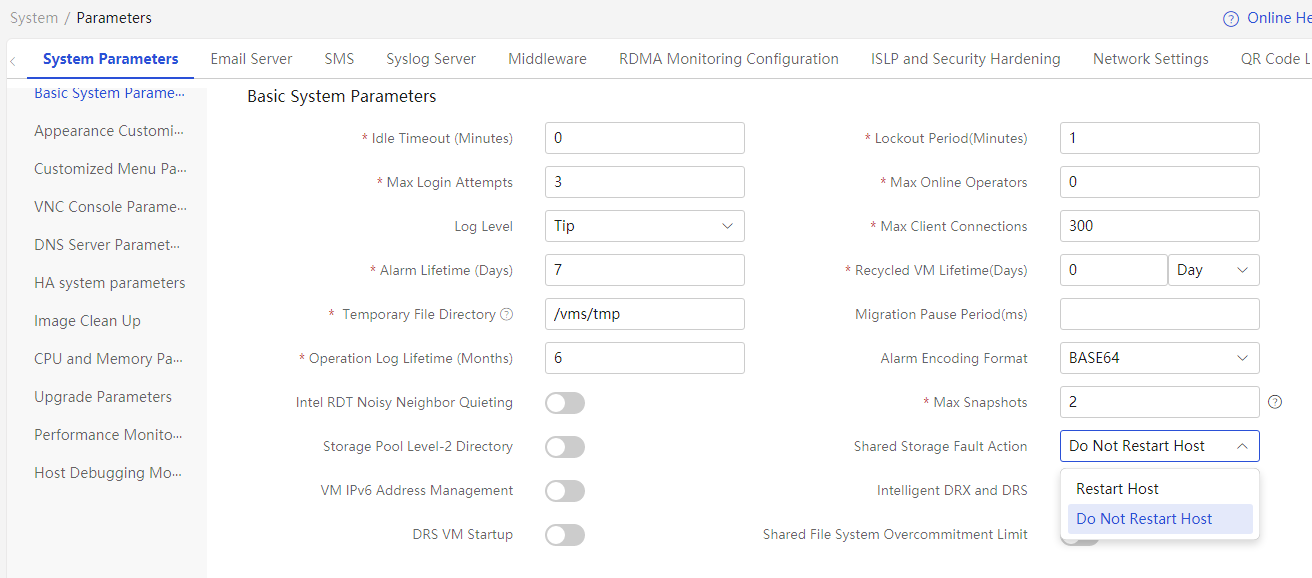

· Freeze—The VM will be frozen and enter suspended state when the shared storage fails. When the Shared Storage Fault Action parameter is Do Not Restart Host, this action can be configured. You can configure the Shared Storage Fault Action parameter on the System > Parameters > System Parameters page.

Command tool: cha –h. For more information, see the HA command help file.

Kernel module of HA

Implementation

The HA kernel module chbk.ko detects the host heartbeat between the CVK hosts and the storage heartbeat between the CVK hosts and the storage pool, and reports the detection results to the cvm_ha process. The cvm_ha process determines whether the management network of CVM and CVK is normal and whether the shared storage pool of CVK is normal.

Fault detection for the OCFS2 storage pool

· Active reporting by the storage module—When the storage module detects a storage pool fault. the fence will restart the host if the Shared Storage Fault Action parameter is Restart Host. If the Shared Storage Fault Action parameter is Do Not Restart Host, the storage module notifies the cvk_ha process of the storage pool fault through the cha command, and the cvk_ha process sends the message to chbk.ko, which reports the message to the cvm_ha process.

· OCFS2 storage pool heartbeat detection—The system reads timestamps from the heartbeat file /sys/kernel/debug/o2hb/storage_uuid/elapsed_time_in_ms. If the timestamp is greater than the heartbeat multiplied by 1000, the detection fails. If the number of consecutive failed detections is a multiple of 12, the cvk_ha process notifies the cvm_ha process of the storage pool fault.

Fault detection for the NFS storage pool

NFS storage pool heartbeat detection: chbk.ko writes timestamps to the heartbeat file /mount_path/.hostname_timestamp at the storage heartbeat interval. If the write fails, it is considered a detection failure. If the detection fails consecutively for three times or the number of failed detections is a multiple of 12, the cvk_ha process notifies the cvm_ha process of the storage pool fault and triggers the migration of VMs using the related storage pool.

Host heartbeat

chbk.ko sends a heartbeat packet to the cvm_ha process at the configured host heartbeat interval. If the cvm_ha process does not receive heartbeat packets for three consecutive intervals, the CVK management network will be considered failed.

Storage heartbeat and host heartbeat

Check the availability of VM disks: If a storage pool's heartbeat check fails, the VM disk in that pool is unusable. This can affect VM operations and trigger VM migration or freezing.

Detect host faults: If both storage heartbeat detection and host heartbeat detection fail, the host is considered faulty, and VM migration or freezing is triggered.

Therefore, host heartbeat and storage pool heartbeat are two important features of HA.

File path

The module path is /lib/modules/4.14.131-generic/kernel/lib/chbk.ko.

Common commands

To detect the kernel module loading state, use the lsmod | grep chbk command.

Problem location

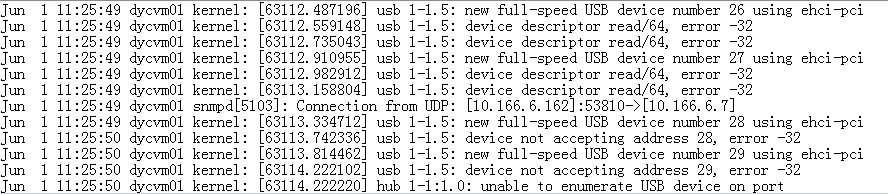

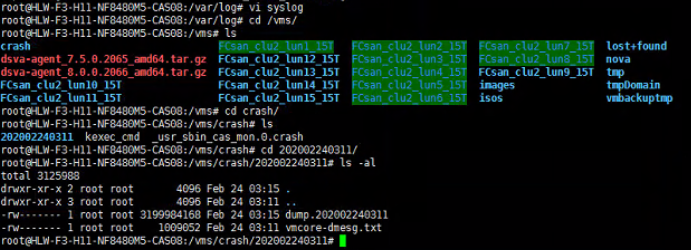

Log file: /var/log/syslog

If a storage pool fails or recovers (keyword: storage pool name), or a heartbeat interruption occurs in the network (keyword: keepalive), you can find related records in the log file.

User-mode services

Service processes

The HA user-mode service processes (cvm_ha, cvk_ha, and cas_mon) work together to ensure high availability of VMs and their applications.

· cvm_ha—located in the CVM node, connects to the database to obtain information about clusters, hosts, storage pools, and VMs. It also synchronizes information with the cvk_ha process. The cvm_ha process and the chbk.ko module exchange messages to monitor the state of the CVK management network and the state of the shared storage pool. They notify the CVM to execute VM migration in case of anomalies.

· cvk_ha—runs on the CVK node, synchronizes data with the cvm_ha process, and is responsible for recording, checking, and reporting information about modules such as VMs, storage pools, block devices, and networks on the CVK.

· cas_mon—is responsible for collecting and reporting performance data, detecting and reporting alarms, and monitoring the lifecycle of VMs.

Implementation

Fault detection for the RBD storage pool

The cvk_ha process uses the ceph command to check the status of the RBD storage pool. If there are three consecutive anomalies detected, the storage pool is considered faulty. If HA is in freeze mode, the cvk_ha process freezes the VM. If it is in migrate mode, the cvk_ha process reports the storage pool state to the cvm_ha process, which notifies the front end to migrate the VM.

Block storage detection

The cvk_ha process opens a block device and sends a test command to check the state of the block device. If there are three consecutive failures, the block storage will be deemed faulty. If HA is in migrate mode, the cvk_ha process notifies the cvm_ha process of block device faults, and the cvm_ha process notifies the front end to migrate the VM. If HA is in freeze mode, the VM is frozen.

Virtual machine fault detection and restart

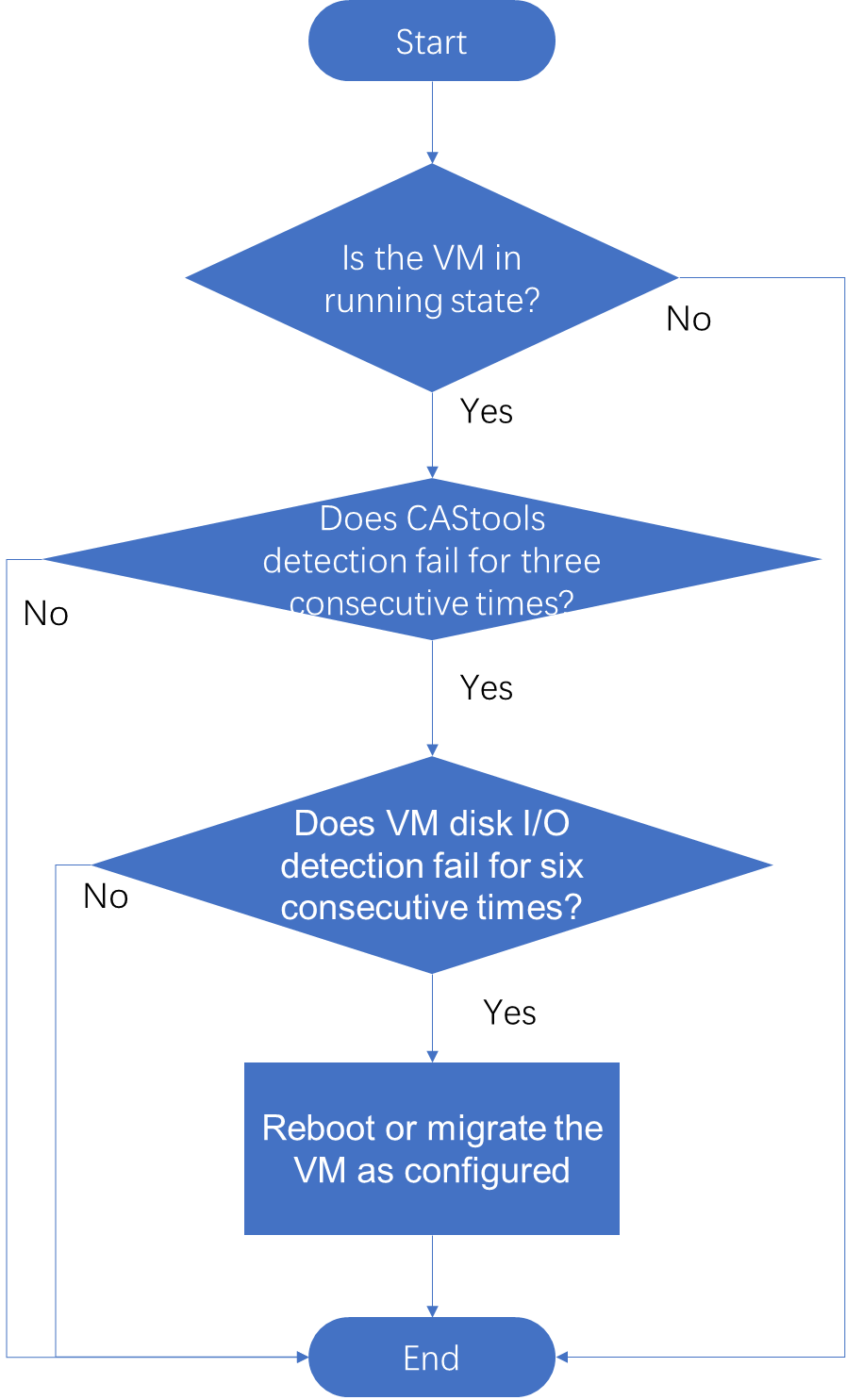

The front end adds a VM and synchronizes its state to the cvk_ha process, which is referred to as the expected state. If the cvk_ha process detected a VM in offline state instead of the expected online state, the VM status check fails. If a VM fails three consecutive checks, it is considered faulty. At this time, if the shared storage used by the VM fails, the cvk_ha process notifies the cvm_ha process to initiate VM migration. If the shared storage used by the VM is normal, the cvk_ha process tries starting the VM. If the VM is started, the cvk_ha process notifies the front end of successful fault recovery. If the VM fails to start, the cvk_ha process notifies the cvm_ha process to migrate the VM and informs the front end of failed fault recovery. After every 10 consecutive failures in checking the VM, the preceding process is performed once. The specific process is shown in the following figure:

Figure 7 VM fault detection

VM freezing

If HA is in freeze mode and the VM supports freezing, the cvk_ha process notifies the qume process to enable no_fence_start mode. High-speed SCSI hard disks support freezing, and IDE and other disks do not support freezing. When there is an I/O anomaly in the storage pool, the qume process switches the VM from running state to suspended state. If there is no I/O operation, the VM will not be set to the suspended state. The cvk_ha process sets the freeze flag in the VM XML file through libvirt.

<cas:freezestatus xmlns:cas=”umount-freezing”>1</cas:freezestatus>

If the storage pool recovers from failure, the qume process switches the VM to the running state and CVK_HA removes the freeze status.

<cas:freezestatus xmlns:cas=”umount-freezing”>0</cas:freezestatus>

When a VM that does not support freezing mode encounters a storage pool fault, it will be migrated to ensure availability.

Application HA

You can add an application monitoring policy for VMs from the Cluster > App. HA > Configure Monitoring Policies page.

The cvk_ha process uses CAStools to detect the HA state of VMs. If the CAStools state is abnormal for three consecutive times and the application HA state cannot be obtained, the cvk_ha process reports the application HA state as unknown. If CAStools runs normally and the VM application fails to pass three consecutive detections, the cvk_ha process reports the VM HA anomaly and restarts the VM application every three failed consecutive detections. After a VM application restarts for three consecutive times, the system will restart the VM and report an alarm if the HA strategy is to restart the VM.

Figure 8 VM application HA principle

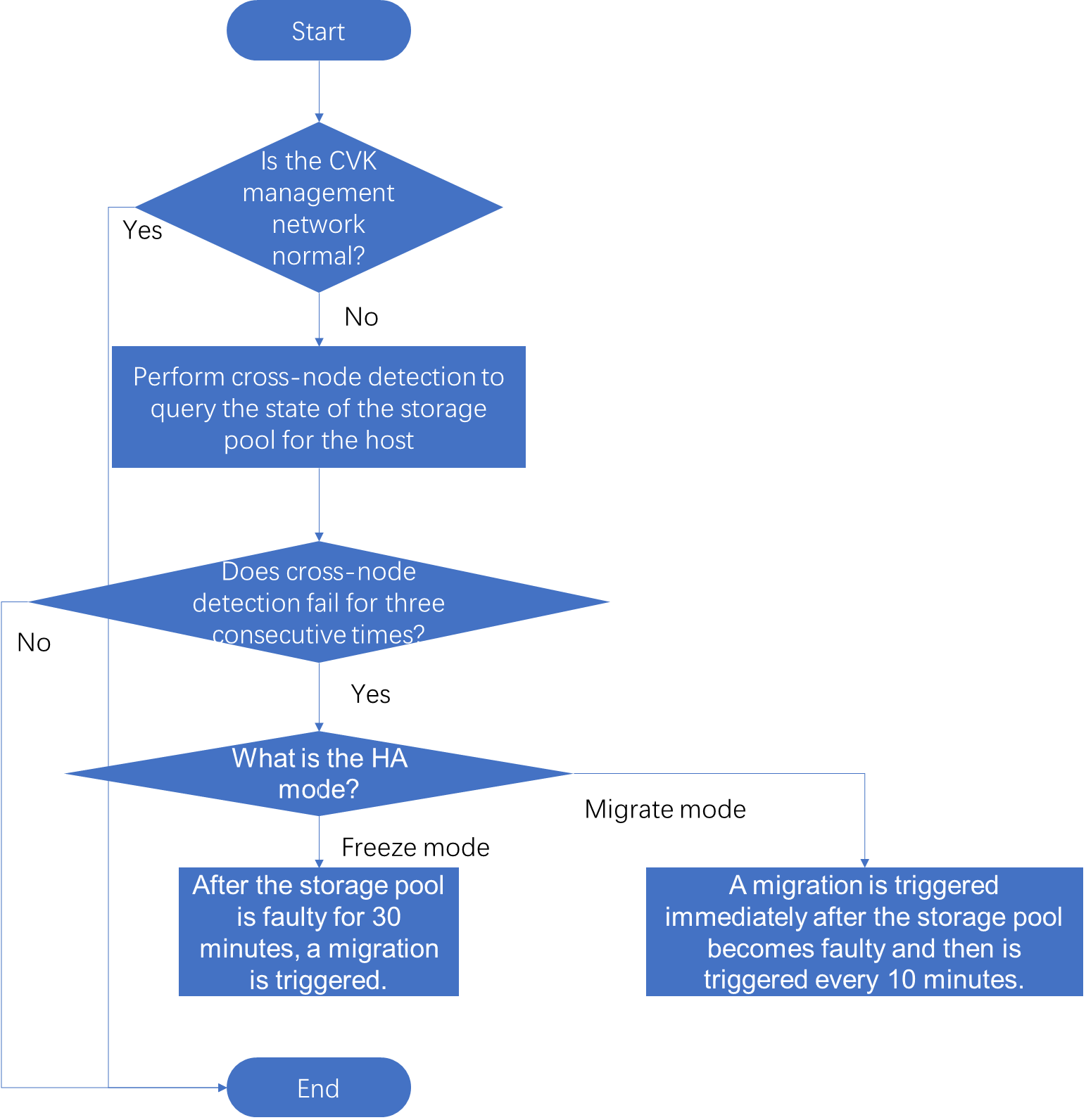

Host fault detection and VM migration

You can configure host heartbeat interval (the default is 10 seconds) from the System > Parameters > System Parameters page.

The chbk.ko module sends heartbeat messages to the cvm_ha process at the host heartbeat interval. If the cvm_ha process does not receive heartbeat messages for three consecutive intervals, it reports an alarm. This indicates a management network disruption between CVK and CVM. At this point, the cvm_ha process executes cross-node detection: Select a healthy CVK (with normal network connectivity and using the same storage pool as the current host (the storage pool status is normal)), and query the current host storage pool status. If the storage pool detection fails for three consecutive times, the current storage pool is considered faulty.

Freeze mode: If the storage pool is faulty and remains faulty for 30 minutes, the host is deemed faulty and VMs are migrated.

Migrate mode: If the storage pool is faulty, the host is deemed faulty and the cvm_ha process triggers VM migration once. Subsequently, the cvm_ha process triggers VM migration once every 10 minutes.

The process is as follows:

Figure 9 Host fault detection

Blue screen fault detection

You can enable blue screen fault detection on VMs through CVM. The osha tag in the VM's XML file is 1 or 2. A value of 1 indicates restart, and a value of 2 indicates migration. The cas_mon process checks the state of the CAStools within the VM. If CAStools detection fails for three consecutive times, detect whether there is disk I/O on the VM. If no disk I/O is detected for six consecutive times, the VM has a blue screen fault. Perform VM restart or migration based on the configuration.

Note: Blue screen fault detection is performed only on VMs in running state, to prevent misjudgment if VMs are manually suspended.

Figure 10 Flowchart for detecting blue screen faults in VMs

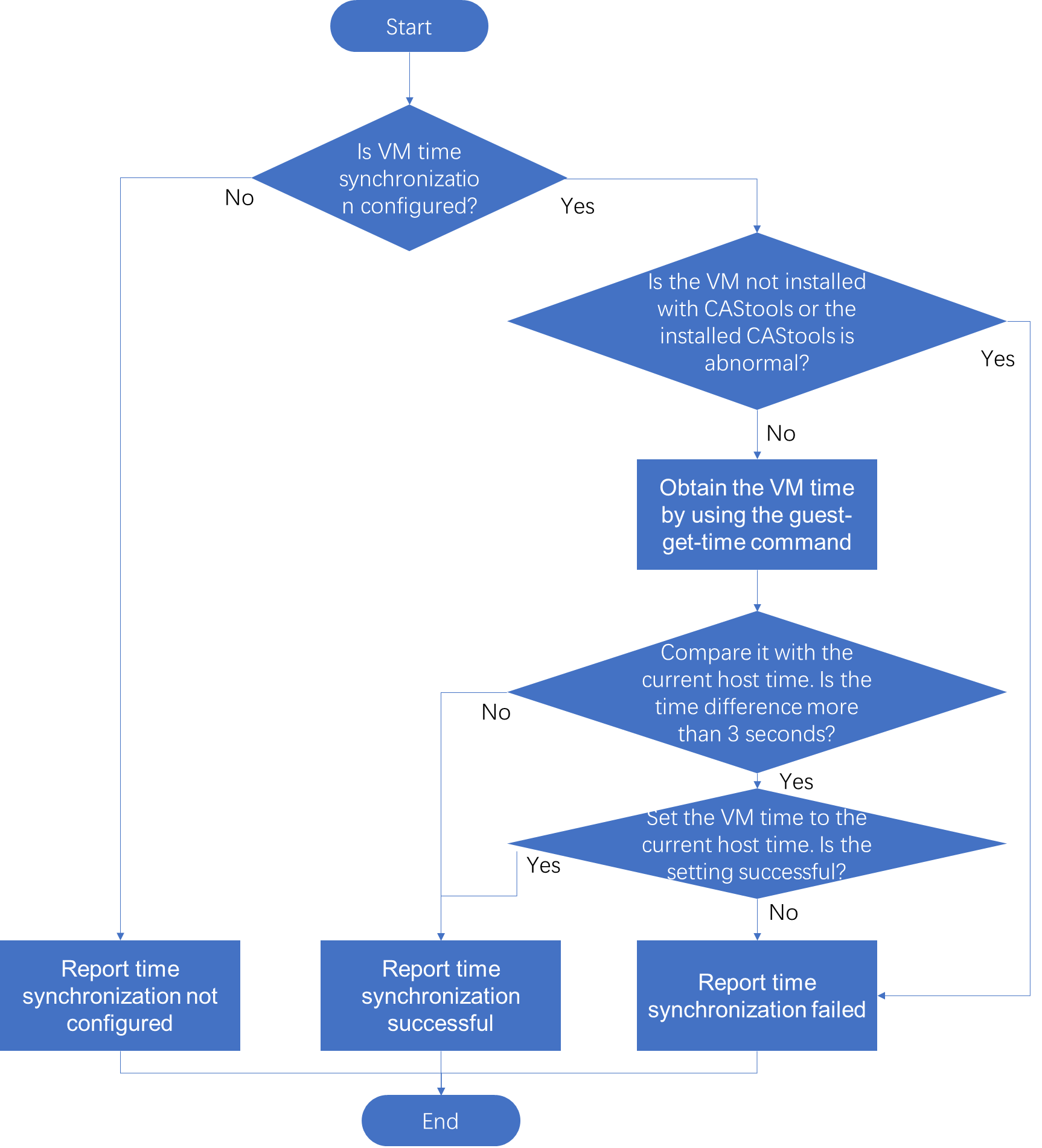

VM time synchronization

You can enable VM time synchronization through CVM by setting the timesync value to 1 in the VM XML file. Other values mean that time synchronization is disabled. If a VM is not installed with CAStools or the installed CAStools is abnormal, the cas_mon process reports the time synchronization state as failed through performance data.

If the CAStools state is normal, use the CAStools command to obtain the VM time and compare it with the current host time. If the time difference is more than 3 seconds, set the VM time to the current host time.

Figure 11 VM time synchronization

Report performance data

The cas_mon process collects the performance data of hosts, VMs, and storage pools. It passes the data to the front end through a TCP connection regularly.

Alarm services

Alarm configuration: CVM deploys alarm configuration to the cas_mon process, which records the alarm configuration in the memory and synchronizes it to the file /etc/cvk/casmon.conf.

Alarm detection and reporting: Alarms include internal alarms and external alarms. The cas_mon process detects and reports internal alarm. External alarms refer to alarms detected by other modules, such as storage. An external alarm is passed to the cas_mon process through the cha command. The cas_mon process reports or confirms the external alarm. The cas_mon process checks the current configuration for alarms, whether external or internal, and only reports them if they meet the relevant configuration requirements. See the CVM alarm management section for the configuration of alarms.

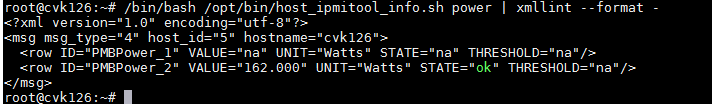

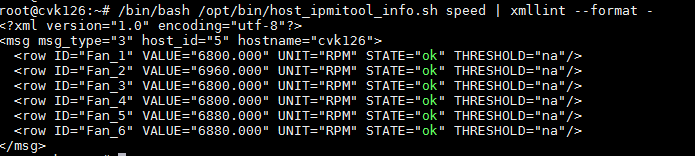

Hardware testing

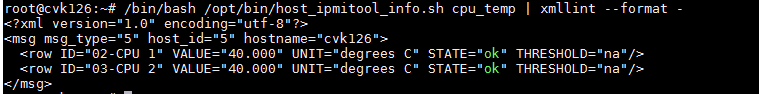

HA provides a hardware detection script: - /opt/bin/host_ipmitool_info.sh. Based on the ipmi management tool ipmitool, it retrieves information about the host power, fan, CPU, memory, temperature, and iLO, and checks their states. For example:

View the power supply:

View the fan:

View the CPU temperature:

View iLO port information:

Commonly used commands and configuration

cas_mon-related

Configuration and file:

The configuration file is located at /etc/cvk/casmon.conf.

The log file path is /var/log/cas_ha/cas_mon.log.

The performance data reporting interval can be configured from the System > Parameters > System Parameters page. If the current version does not support configuring this parameter, the default reporting interval is 30 seconds.

Common commands:

To set the log level, use the cha set-loglevel mode -m command. The mode can be debug, info, error, warning, or fatal, and the default mode is info.

To obtain the host performance data , use the cha get-perf-data host –m command.

To obtain the VM performance data, use the cha get-perf-data vm -m command.

To operate the process, use the service cas_mon operate command. The operate parameter can be status, start, stop, or restart.

cvk_ha-related

Configuration and file:

The service process is the cvk_ha process and it can be found in the path /usr/sbin.

The log file is located at /var/log/cas_ha/cvk_ha.log.

Common commands:

To operate the process, use the service cvk_ha operate command. The operate parameter can be status, start, stop, or restart.

To query information about all VMs on CVK, use the cha cvkvm-list –k command.

To query information about a single VM on CVK, use the cha cvkvm-list vm –k command.

To query application HA information on a VM, use the cha appha-list vm –k command.

To set the log level for the cvk_ha process, use the cha set-loglevel mode –k command. The mode can be debug, info, error, warning, or fatal, and the default mode is info.

To query the freeze state of a VM, use the virsh metadata vmname umount-freezing command.

cvm_ha-related

Configuration and file:

The service process is located at /usr/sbin/cvm_ha.

The log file is located at /var/log/cas_ha/cvm_ha.log.

Common commands:

To operate the process, use the service cvm_ha operate command. The operate parameter can be status, start, stop, or restart.

To view the list of clusters managed by HA, use the cha cluster-list command.

To view the status of a cluster managed by HA, use the cha cluster-status cluster-id command.

Problem location

Performance data issue

Symptom: The VM performance data cannot be obtained, and the VM icon is displayed in blue at the front end.

Reason: There are three main reasons for the issue: frontend, network, and backend problems.

Fast location: In cas_mon debug mode, view reported data in /tmp/mon_pdc.xml.

1. To enable cas_mon debug mode, use the cha set-loglevel debug –m command.

2. After one performance data reporting internal, identify whether the file /tmp/mon_pdc.xml exists.

3. If the file /tmp/mon_pdc.xml exists, performance data is reported as normal on the cas_mon of the CVK where the VM is located. Then, the CVM casserver service and management network are checked.

4. If the file /tmp/mon_pdc.xml does not exist, check whether the cas_mon log file include Failed to send performance data to cvm. If not, check whether the cas_mon process is running, collect Err-level log information in the cas_mon log file, and then contact H3C Support for help. If yes, check whether the CVM IP configured in the current CVK is correct by executing the cat /etc/cvk/cvm_info.conf | grep address command. If the CVM IP is correct, troubleshoot the management network. If the CVM IP is incorrect, there might be multiple CVMs managing the CVK, and troubleshoot the network setup.

Remarks:

· After completing the operation, restore the log level to info by using cha set-loglevel info –m command.

· To view the specific performance data reported, view the /tmp/mon_pdc.xml file by using the cat /tmp/mon_pdc.xml | xmllint –format - command.

· Complete cas_mon operations on the CVK host where VM is located.

· To obtain the host performance data, use the cha get-perf-data host –m command.

· To obtain the VM performance data, use the cha get-perf-data vm –m command.

Common backend reasons:

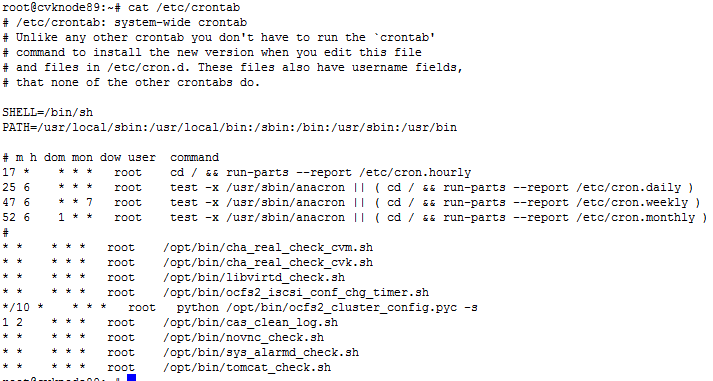

· Some operations caused the /etc/crontab service to malfunction, and cas_mon wasn't initiated.

· The CVK configuration file /etc/cvk/cvm_info.conf records an incorrect CVM IP address.